该脚本用于列出在子表上没有对应索引的外键,没有索引可能引发额外的表锁:

"You should almost always index foreign keys. The only exception is when the matching unique or primary key is never updated or deleted." When a foreign key is unindexed, DML on the parent primary key results in a share row exclusive table lock (or share-subexclusive table lock, SSX) on the child table, preventing DML from other transactions against the child table. If the DML affects several rows in the parent table, the lock on the child table is obtained and released immediately for each row in turn. Despite the speed of the lock-release process, this can cause significant amounts of contention on the child table during periods of heavy update/delete activity on the parent table. When a foreign key is indexed, DML on the parent primary key results in a row share table lock (or subshare table lock, SS) on the child table. This type of lock prevents other transactions from issuing whole table locks on the child table, but does not block DML on either the parent or the child table. Only the rows relating to the parent primary key are locked in the child table.

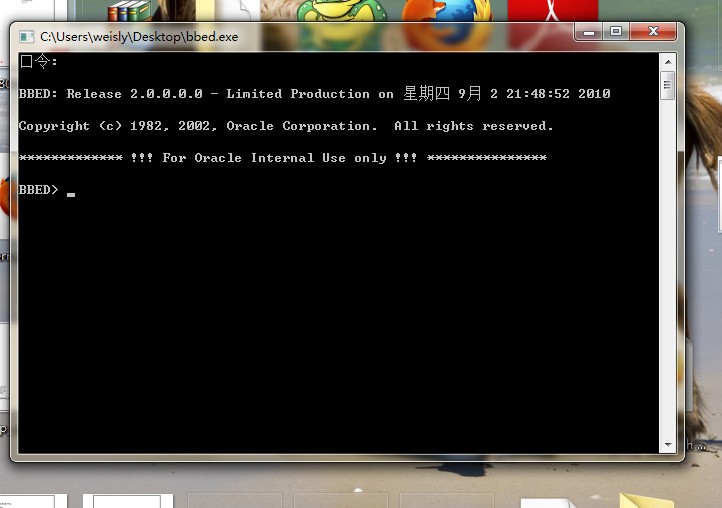

Script:

REM List foreign keys with no matching index on child table - causes locks

set linesize 150;

col owner for a20;

col COLUMN_NAME for a20;

SELECT c.owner,

c.constraint_name,

c.table_name,

cc.column_name,

c.status

FROM dba_constraints c, dba_cons_columns cc

WHERE c.constraint_type = 'R'

AND c.owner NOT IN

('SYS',

'SYSTEM',

'SYSMAN',

'EXFSYS',

'WMSYS',

'OLAPSYS',

'OUTLN',

'DBSNMP',

'ORDSYS',

'ORDPLUGINS',

'MDSYS',

'CTXSYS',

'AURORA$ORB$UNAUTHENTICATED',

'XDB',

'FLOWS_030000',

'FLOWS_FILES')

AND c.owner = cc.owner

AND c.constraint_name = cc.constraint_name

AND NOT EXISTS

(SELECT 'x'

FROM dba_ind_columns ic

WHERE cc.owner = ic.table_owner

AND cc.table_name = ic.table_name

AND cc.column_name = ic.column_name

AND cc.position = ic.column_position

AND NOT EXISTS

(SELECT owner, index_name

FROM dba_indexes i

WHERE i.table_owner = c.owner

AND i.index_Name = ic.index_name

AND i.owner = ic.index_owner

AND (i.status = 'UNUSABLE'

OR i.partitioned = 'YES'

AND EXISTS

(SELECT 'x'

FROM dba_ind_partitions ip

WHERE status =

'UNUSABLE'

AND ip.

index_owner =

i.

owner

AND ip.

index_Name =

i.

index_name

UNION ALL

SELECT 'x'

FROM dba_ind_subpartitions isp

WHERE status =

'UNUSABLE'

AND isp.

index_owner =

i.

owner

AND isp.

index_Name =

i.

index_name))))

ORDER BY 1, 2

/