ASM基础概念

如果自己搞不定可以找诗檀软件专业ORACLE数据库修复团队成员帮您恢复!

诗檀软件专业数据库修复团队

服务热线 : 13764045638 QQ号:47079569 邮箱:service@parnassusdata.com

任何转载请注明源地址,否则追究法律责任!:https://www.askmac.cn/archives/know-oracle-asm.html

相关文章链接:

Asm Instance Parameter Best Practice

针对11.2 RAC丢失OCR和Votedisk所在ASM Diskgroup的恢复手段

在11gR2 RAC中修改ASM DISK Path磁盘路径

在Linux 6上使用UDEV解决RAC ASM存储设备名问题

Comparation between ASM note [ID 373242.1] and note [ID 452924.1]

ASM file metadata operation等待事件

Discover Your Missed ASM Disks

使用AMDU工具从无法MOUNT的DISKGROUP中抽取数据文件

Oracle 自动存储管理概述

自动存储管理 (ASM) 是 Oracle Database 的一个特性,它为数据库管理员提供了一个在所有服务器和存储平台上均一致的简单存储管理接口。作为专门为 Oracle 数据库文件创建的垂直集成文件系统和卷管理器,ASM 提供了直接异步 I/O 的性能以及文件系统的易管理性。ASM 提供了可节省 DBA 时间的功能,以及管理动态数据库环境的灵活性,并且提高了效率。ASM 的主要优点有:

- 简化和自动化了存储管理

- 提高了存储利用率和敏捷性

- 提供可预测的性能、可用性和可伸缩性

Oracle Cloud File System 概述

Oracle Cloud File System (CloudFS) 前所未有地简化了通用文件的存储管理、供应自动化和存储整合。CloudFS 是一个存储云基础架构,提供资源池、网络可访问性、快速伸缩以及快速供应 — 这些都是云计算环境的关键要求。该产品包括:

- Oracle ASM Dynamic Volume Manager (ADVM)

ADVM 提供了一个通用卷管理服务和一个标准设备驱动程序接口,便于系统管理员跨不同平台进行管理。ACFS 和第三方文件系统可以使用 ASM 动态卷创建和管理可利用 ASM 特性的所有功能的文件系统。因此,无需停机即可轻松调整 ADVM 卷的大小以适应文件系统的存储需求。

- Oracle ASM Cluster File System (ACFS)

一个通用的与 POSIX、X/OPEN 和 Windows 兼容的文件系统,专为单节点和单集群的配置而设计。使用操作系统自带的命令、ASM asmcmd 和 Oracle Enterprise Manager 对 ACFS 进行管理。ACFS 支持高级数据服务,如时间点复制快照、文件系统复制和标签,以及文件系统安全性和加密。

Automatic Storage Management是Oracle 在版本10g中率先(对比其他RDBMS)提出的数据库存储自动解决方案,在版本11g中得到进一步升华。ASM提供了数据库管理所需要的一个简单、有效的存储管理接口,该接口实现了跨服务器和存储平台。 ASM是文件系统filesystem和volume manager卷管理软件的一体化,专门为Oracle的数据库文件锁设计的; ASM在保证如文件系统般管理简单的基础上提供高性能的异步Async IO。ASM的引入提高了数据库的可扩展容量,同时节约了DBA的时间,使其能够更敏捷、更高效地管理一个流动性较大的数据库环境。

ASM的出现是为RDBMS管理文件存储

- 注意ASM不会替代RDBMS去实施IO读写,很多对这一点存在误解,认为RDBMS发送IO request给ASM,ASM去做真正的IO操作,这是错误的。

- 真正的IO还是由RDBMS进程去实施,和不用ASM的裸设备一样

- 因此ASM不是IO的中间层,也就不存在因为ASM而出现所谓的IO瓶颈

- 对于ASM而言LUN DISK可以是裸设备也可以直接是块设备(10.2.0.2以后)

- 适合存放在ASM中的文件类型包括:数据文件datafile、控制文件controlfile、重做日志redolog、归档日志archivelog、闪回日志flashback log、spfile、RMAN备份以及block tracking file、datapump文件

- 从11gR2开始,ASM引入了ACFS特性可以存放任何类型的文件; 但是ACFS不支持存放数据文件

ASM基础概念:

- ASM的最小存储单位是一个”allocation unit”(AU),通常为1MB,在Exadata上推荐为4MB

- ASM的核心是存储文件

- 文件被划分为多个文件片,称之为”extent”

- 11g之前extent的大小总是为一个AU,11g之后一个extent可以是1 or 8 or 64个AU

- ASM使用file extent map维护文件extent的位置

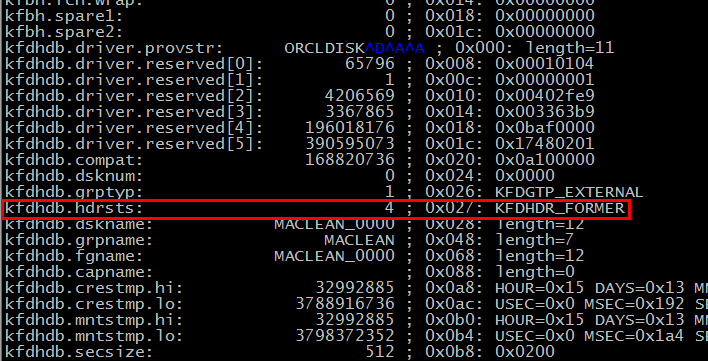

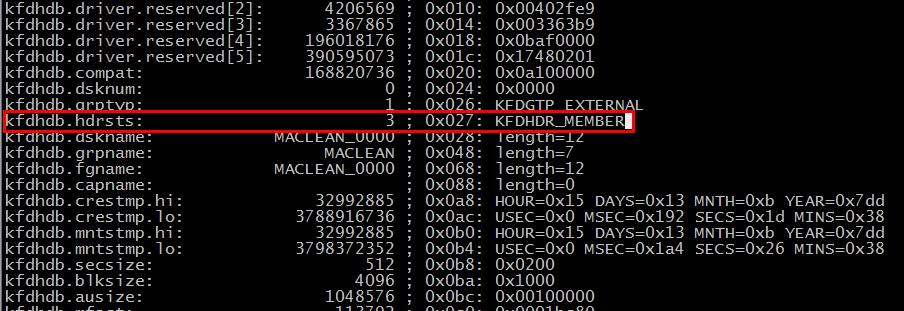

- ASM在LUN DISK的头部header维护其元数据,而非数据字典

- 同时RDBMS DB会在shared pool中缓存file extent map,当server process处理IO时使用

- 因为ASM instance使用类似于普通RDBMS的原理的instance/crash recovery,所以ASM instance奔溃后总是能复原的。

ASM存储以diskgroups的概念呈现:

- Diskgroup DG对RDBMS实例可见,例如一个DATA DG,对于RDBMS来说就是以’+DATA’表示的一个存储点, 可以在该DG上创建一个tablespace,例如: create tablespace ONASM datafile ‘+DATA’ size 10M。

- Diskgroup下面是一个或者多个failure group (FG)

- FG被定义为一组Disk

- Disk在这里可以是裸的物理卷、磁盘分区、代表某个磁盘阵列的LUN,亦或者是LVM或者NAS设备

- 多个FG中的disk不应当具备相同的单点故障,否则ASM的冗余无效

ASM所提供的高可用性:

- ASM提供数据镜像以便从磁盘失败中恢复

- 用户可以选择EXTERNAL、NORMAL、HIGH三种冗余镜像

- EXTERNAL即ASM本身不做镜像,而依赖于底层存储阵列资深实现镜像;在External下任何的写错误都会导致Disk Group被强制dismount。在此模式下所有的ASM DISK必须都存在健康,否则Disk Group将无法MOUNT

- NORMAL 即ASM将为每一个extent创建一个额外的拷贝以便实现冗余;默认情况下所有的文件都会被镜像,这样每一个file extent都有2份拷贝。若写错误发生在2个Disk上且这2个Disk是partners时将导致disk Disk Group被强制dismount。若发生失败的磁盘不是partners则不会引起数据丢失和不可用。

- HIGH 即ASM为每一个extent创建两个额外的拷贝以便实现更高的冗余。2个互为partners的Disk的失败不会引起数据丢失,当然不能有更多的partners Disk失败了。

- 数据镜像依赖于failure group和extent partnering实现。ASM在NORMAL 或 HIGH 冗余度下可以容许丢失一个failure group中所有的磁盘。

Failure Group镜像的使用

- ASM的镜像并不像RAID 1那样

- ASM的镜像基于文件extent的粒度,extent分布在多个磁盘之间,称为partner

- Partner disk会存放在一个或者多个分离的failure group上

- ASM自动选择partner并限制其数量小于10个

- 若磁盘失败,则ASM更新其extent map使今后的读取操作指向剩余的健康partner

- 在11g中,若某个disk处于offline状态,则对于文件的变更会被追踪记录这样当disk被重现online时则这些变化得以重新应用,前提是offline的时间不超过DISK_REPAIR_TIME所指定的时间(默认为3.6个小时). 这种情况常发生在存储控制器故障或者类似的短期磁盘故障:

- 这种对于文件变更的追踪基于一个发生变化的file extent的位图,该位图告诉ASM哪些extents需要从健康的partner哪里拷贝至需要修复的disk,该特性称之为fast mirror resync

- 在10g中没有fast mirror resync特性,若disk出现offline则直接自动被drop掉,不存在允许修复的周期

- 对于无法再online的disk,则必须被drop掉; 一个新的disk会被ASM选择并通过rebalancing 操作拷贝数据,这些工作是后台自动完成的。

重新平衡Rebalancing

- Rebalancing是在磁盘之间移动文件extent以实现diskgroup上的IO负载均衡的过程

- Rebalancing在后台异步发生,是可监控的

- 在集群环境中,一个diskgroup的重平衡只能在一个ASM instance上发生,不能通过集群多节点同时处理以加速

- 当disk被加入或移除时,ASM会自动在后台开始数据重新平衡工作

- 重平衡的速度和力度可以通过asm_power_limit参数控制

- asm_power_limit参数默认为1,其范围为0~11(从11.2.0.2开始是0-1024),该参数控制实施重平衡后台进程的数量;Level 0表示不实施重新平衡

- 在重新平衡过程中IO性能(主要是吞吐量和响应时间)可能受到影响,其影响程度取决于存储本身的能力和重新平衡的力度,默认的asm_powner_limit=1不会造成过度的影响

性能方面

- ASM会通过在DG中条带化文件extent分布以最大化可用的IO带宽

- 有2种可用条带化宽度:coarse粗糙条带化大小为1个AU,fine精细条带化为128K

- 即便是fine精细条带化仍采用普通大小的file extent,但是条带化以更小的片形式循环式地分布在多个extent上

- ASM默认不让RDBMS去读备用的镜像拷贝extent,即使这样请放心IO还是均衡的

- 默认情况下RDBMS总是去读取主primary extent,从11.1开始可以通过PREFERRED_READ_FAILURE_GROUP参数设置让本地节点优先读取某个failure group中的extent; 该特性主要为extended distance RAC设计,不建议在常规ASM中使用

其他知识

- 并非RAC才能使用ASM,单节点同样可以从ASM哪里获得好处

- 节点上的一个ASM instance实例可以为多个RDBMS DB实例服务

- RAC环境中的ASM必须也是集群化的,以便能够协调更新元数据

- 从11.2开始,ASM从RDBMS HOME分离出来,而和clusterware一起安装在GRID HOME下。

Disk Group:

Disk Group”磁盘组” 是ASM管理的逻辑概念对象,一个Disk Group由多个ASM disk组成。每一个Disk Group都是子描述的,如同一个标准的文件系统一样。所有关于该Diskgroup 空间使用信息的元数据均完整地包含在这个磁盘组中。 若ASM可以找到所有属于该ASM diskgroup的DISK则他不需要任何其他额外的元数据。

文件空间从Disk Group中分配。任何一个ASM文件总是完整地包含在一个单独的Disk Group中。但是,一个Disk Group可能包含了属于多个数据库的文件,一个单独的数据库的文件也可以存放在多个不同的Disk Group中。 在大多数实际的部署中,不会创建太多数量的Disk Groups,一般在3~4个。

Disk Group提供三种不同的redundancy冗余度,详见上文。

ASM Disk

一个ASM Disk是组成Disk Group的基本的持久的存储。 当一个ASM Disk加入到Disk Group中时,它要么采用管理员指定的ASM Disk Name要么采用系统自动分配的Disk Name。 这不同于OS 给用于访问该设备的”艺名”。 在一个Cluster集群中, 同一个Disk 可能在不同的节点上显示不同的Device Name设备名,例如在 Node1上的 /dev/sdc ,对应于Node2上的/dev/sdd。 ASM Disk必须在所有使用该Disk Group的实例上可用直接磁盘I/O访问。

实际上对于RDBMS Oracle而言访问ASM disk和访问普通的文件并没有什么不同,除非使用了ASMLIB(ASMLIB不是ASM必须的,再次强调!)。常规情况下ASM Disk是OS上可见的LUN的partition,该分区覆盖了所有不被操作系统所保留的磁盘的空间。 大多数操作系统需要保留LUN的第一个block作为分区表(partition table); 由于ASM总是会写ASM Disk的第一个块,所以要保证ASM不会去覆盖前几个block上的分区表(partition table),例如在Solaris上分区时不要把前几个柱面划给partition。LUN可以是简单的物理JBOD,或者是由高级存储阵列管理的虚拟LUN。既可以是直连的设备也可以是SAN。ASM Disk可以是任何被开发系统调用所访问的东西,除了本地文件系统。 甚至于NFS上的文件都可以被当做一个ASM Disk来用,这样便于喜欢NAS的用户使用ASM,当然比起NFS来我更建议干脆用ISCSI。

注意虽然可以使用普通logical Volume Manager LVM管理的logical volume作为ASM Disk,但是这并不是推荐组合,除非你想不到其他更好的办法。 即便你一定要这样用,但是注意也不要在LVM级别做镜像和条带化。

ASM将任何文件以AU大小均匀分布在Disk Group的所有Disk上。每一个ASM Disk均被维护以保持同样的使用比率。这保证同一个Disk Group中的所有Disk的IO负载基本一致。由于ASM在一个Disk Group中的磁盘上的负载均衡,所以为同一个物理磁盘的不同区域划分为2个ASM Disk不会对性能有所影响;而同一个物理磁盘上划分2个不同分区置于不同的2个Disk Group则有效。

当ASM Disk Group启用冗余时单个ASM Disk仅是一个失败单元。对于该ASM Disk的写失败在10g会自动从该Disk Group drop掉该Disk,前提是该Disk的丢失被容许。

Allocation Unit

每一个ASM Disk都被划分为许多个AU allocation units(单个AU 的大小在 1MB ~64MB,注意总是2的次方MB)。而且AU allocation unit也是Disk Group的基本分配单元。一个ASM Disk上的可用空间总是整数倍个AU。在每一个ASM Disk的头部均有一个表,该表的每一条记录代表该ASM Disk上的一个AU。文件的extent指针(pointer)给出了ASM Disk Number磁盘号和AU号,这就描述了该extent的物理位置。由于所有的空间操作都以AU为单位,所以不存在所谓ASM碎片这样的概念和问题。

一个AU(1M~64M)足够小,以便一个文件总是要包含很多个AU,这样就可以分布在很多磁盘上,也不会造成热点。一个AU又足够大以便能够在一个IO操作中访问它,以获得更加的吞吐量,也能提供高效的顺序访问。访问一个AU的时间将更多的消耗在磁盘传输速率上而非花在寻找AU头上。对于Disk Group的重新平衡也是对每一个AU逐次做的。

了解ASM后台进程的作用:

GMON: ASM Diskgroup监控进程

ASMB: ASM后台网络进程

RBAL: ASM reblance master process 重新平衡主进程

ARBx: reblance slave process实际实施reblance的后台进程

MARK: AU resync AU重新同步的指挥家进程

了解ASM前台进程的作用:

ASM的client(主要是RDBMS DB和CRSD))在连接ASM实例时会产生前台进程,前天进程的名字一般为oracle+ASM_<process>_<product> (例如: oracle+ASM_DBW0_DB1)。

OCR 特有的前台进程foreground: oracle+ASM1_ocr

ASM相关的V$和X$视图

| 视图名 | X$基表名 | 描述 |

| V$ASM_DISKGROUP | X$KFGRP | 实施磁盘发现disk discovery和列出磁盘组 |

| V$ASM_DISKGROUP_STAT | X$KFGRP_STAT | 显示disk group状态 |

| V$ASM_DISK | X$KFDSK, X$KFKID | 实施磁盘发现disk discovery和列出磁盘以及这些磁盘的使用度量信息 |

| V$ASM_DISK_STAT | X$KFDSK_STAT,X$KFKID | 列出磁盘和其使用度量信息 |

| V$ASM_FILE | X$KFFIL | 列出ASM文件也包括了元数据信息 |

| V$ASM_ALIAS | X$KFALS | 列出了ASM的别名,文件和目录 |

| V$ASM_TEMPLATE | X$KFTMTA | 列出可用的模板和其属性 |

| V$ASM_CLIENT | X$KFNCL | 列出链接到ASM的DB实例 |

| V$ASM_OPERATION | X$KFGMG | 列出rebalancing重平衡操作 |

| N/A | X$KFKLIB | 可用的ASMLIB路径 |

| N/A | X$KFDPARTNER | 列出Disk-partners关系 |

| N/A | X$KFFXP | 所有ASM文件的extent map |

| N/A | X$KFDAT | 所有ASM Disk的extent列表 |

| N/A | X$KFBH | 描述ASM cache |

| N/A | X$KFCCE | ASM block的链表 |

| V$ASM_ATTRIBUTE(new in 11g) | X$KFENV(new in 11g) | Asm属性,该X$基表还显示一些隐藏属性 |

| V$ASM_DISK_IOSTAT(new in 11g) | X$KFNSDSKIOST(new in 11g) | I/O统计信息 |

| N/A | X$KFDFS(new in 11g) | |

| N/A | X$KFDDD(new in 11g) | |

| N/A | X$KFGBRB(new in 11g) | |

| N/A | X$KFMDGRP(new in 11g) | |

| N/A | X$KFCLLE(new in 11g) | |

| N/A | X$KFVOL(new in 11g) | |

| N/A | X$KFVOLSTAT(new in 11g) | |

| N/A | X$KFVOFS(new in 11g) | |

| N/A | X$KFVOFSV(new in 11g) |

X$KFFXP包含了文件、extent和AU之间的映射关系。 从该X$视图可以追踪给定文件的extent的条带化和镜像情况。注意对于primary au和mirror au读操作的负载是均衡的, 而写操作要求同时写2者到磁盘。以下是X$KFFXP视图列的含义

| X$KFFXP Column Name | Description |

| ADDR | x$ table address/identifier |

| INDX | row unique identifier |

| INST_ID | instance number (RAC) |

| NUMBER_KFFXP | ASM file number. Join with v$asm_file and v$asm_alias |

| COMPOUND_KFFXP | File identifier. Join with compound_index in v$asm_file |

| INCARN_KFFXP | File incarnation id. Join with incarnation in v$asm_file |

| PXN_KFFXP | Progressive file extent number |

| XNUM_KFFXP | ASM file extent number (mirrored extent pairs have the same extent value) |

| GROUP_KFFXP | ASM disk group number. Join with v$asm_disk and v$asm_diskgroup |

| DISK_KFFXP | Disk number where the extent is allocated. Join with v$asm_disk |

| AU_KFFXP | Relative position of the allocation unit from the beginning of the disk. The allocation unit size (1 MB) in v$asm_diskgroup |

| LXN_KFFXP | 0->primary extent, ->mirror extent, 2->2nd mirror copy (high redundancy and metadata) |

| FLAGS_KFFXP | N.K. |

| CHK_KFFXP | N.K. |

X$KFDAT该X$视图包含了所有allocation unit AU的细节,不管是FREE的还是USED。

| X$KFDAT Column Name | Description |

| ADDR | x$ table address/identifier |

| INDX | row unique identifier |

| INST_ID | instance number (RAC) |

| GROUP_KFDAT | diskgroup number, join with v$asm_diskgroup |

| NUMBER_KFDAT | disk number, join with v$asm_disk |

| COMPOUND_KFDAT | disk compund_index, join with v$asm_disk |

| AUNUM_KFDAT | Disk allocation unit (relative position from the beginning of the disk), join with x$kffxp.au_kffxp |

| V_KFDAT | V=this Allocation Unit is used; F=AU is free |

| FNUM_KFDAT | file number, join with v$asm_file |

| I_KFDAT | N/K |

| XNUM_KFDAT | Progressive file extent number join with x$kffxp.pxn_kffxp |

| RAW_KFDAT | raw format encoding of the disk,and file extent information |

X$KFDPARTNER 这个X$视图包含了 disk-partner(1-N)的映射关系,在一个给定ASM Diskgroup,若2个Disk存有同一个extent的镜像拷贝,则将2个disk视作partners。因此partners必须属于同一个diskgroup下的不同的failgroup。

| X$KFDPARTNER Column Name | Description |

| ADDR | x$ table address/identifier |

| INDX | row unique identifier |

| INST_ID | instance number (RAC) |

| GRP | diskgroup number, join with v$asm_diskgroup |

| DISK | disk number, join with v$asm_disk |

| COMPOUND | disk identifier. Join with compound_index in v$asm_disk |

| NUMBER_KFDPARTNER | partner disk number, i.e. disk-to-partner (1-N) relationship |

| MIRROR_KFDPARNER | if=1 in a healthy normal redundancy config |

| PARITY_KFDPARNER | if=1 in a healthy normal redundancy config |

| ACTIVE_KFDPARNER | if=1 in a healthy normal redundancy config |

研究ASM必要的技巧

1)找出ASM的镜像mirror extent,在例子中是ASM的spfile

[grid@localhost ~]$ sqlplus / as sysasm

SQL*Plus: Release 11.2.0.3.0 Production on Wed Feb 13 11:13:39 2013

Copyright (c) 1982, 2011, Oracle. All rights reserved.

Connected to:

Oracle Database 11g Enterprise Edition Release 11.2.0.3.0 - 64bit Production

With the Automatic Storage Management option

INSTANCE_NAME

----------------

+ASM

SQL>

SQL> show parameter spfile

NAME TYPE VALUE

------------------------------------ ----------- ------------------------------

spfile string +SYSTEMDG/asm/asmparameterfile

/registry.253.805993079

select GROUP_KFFXP, DISK_KFFXP, AU_KFFXP

from x$kffxp

where number_kffxp =

(select file_number

from v$asm_alias

where name = 'REGISTRY.253.805993079');

GROUP_KFFXP DISK_KFFXP AU_KFFXP

----------- ---------- ----------

3 2 38

3 1 39

3 0 44

也可以这样定位

select GROUP_KFDAT, NUMBER_KFDAT, AUNUM_KFDAT

from x$kfdat

where fnum_kfdat = (select file_number

from v$asm_alias

where name = 'REGISTRY.253.805993079')

GROUP_KFDAT NUMBER_KFDAT AUNUM_KFDAT

----------- ------------ -----------

3 0 44

3 1 39

3 2 38

==> 找到该 DISK对应的路径

SQL> select path,DISK_NUMBER from v$asm_disk where GROUP_NUMBER=3 and disk_number in (0,1,2);

PATH DISK_NUMBER

-------------------- -----------

/dev/asm-diski 2

/dev/asm-diskh 1

/dev/asm-diskg 0

SQL> create pfile='/home/grid/pfile' from spfile;

File created.

SQL> Disconnected from Oracle Database 11g Enterprise Edition Release 11.2.0.3.0 - 64bit Production

With the Automatic Storage Management option

[grid@localhost ~]$ cat pfile

+ASM.asm_diskgroups='EXTDG','NORDG'#Manual Mount

*.asm_diskstring='/dev/asm*'

*.asm_power_limit=1

*.diagnostic_dest='/g01/app/grid'

*.instance_type='asm'

*.large_pool_size=12M

*.local_listener='LISTENER_+ASM'

*.remote_login_passwordfile='EXCLUSIVE'

通过dd读取该AU

[grid@localhost ~]$ dd if=/dev/asm-diski of=/tmp/spfile.dmp skip=38 bs=1024k count=1

1+0 records in

1+0 records out

1048576 bytes (1.0 MB) copied, 0.00328614 seconds, 319 MB/s

[grid@localhost ~]$ strings /tmp/spfile.dmp

+ASM.asm_diskgroups='EXTDG','NORDG'#Manual Mount

*.asm_diskstring='/dev/asm*'

*.asm_power_limit=1

*.diagnostic_dest='/g01/app/grid'

*.instance_type='asm'

*.large_pool_size=12M

*.local_listener='LISTENER_+ASM'

*.remote_login_passwordfile='EXCLUSIVE'

[grid@localhost ~]$ dd if=/dev/asm-diskh of=/tmp/spfile1.dmp skip=39 bs=1024k count=1

1+0 records in

1+0 records out

1048576 bytes (1.0 MB) copied, 0.0325114 seconds, 32.3 MB/s

[grid@localhost ~]$ strings /tmp/spfile1.dmp

+ASM.asm_diskgroups='EXTDG','NORDG'#Manual Mount

*.asm_diskstring='/dev/asm*'

*.asm_power_limit=1

*.diagnostic_dest='/g01/app/grid'

*.instance_type='asm'

*.large_pool_size=12M

*.local_listener='LISTENER_+ASM'

*.remote_login_passwordfile='EXCLUSIVE'

[grid@localhost ~]$ dd if=/dev/asm-diskg of=/tmp/spfile2.dmp skip=44 bs=1024k count=1

1+0 records in

1+0 records out

1048576 bytes (1.0 MB) copied, 0.0298287 seconds, 35.2 MB/s

[grid@localhost ~]$ strings /tmp/spfile2.dmp

+ASM.asm_diskgroups='EXTDG','NORDG'#Manual Mount

*.asm_diskstring='/dev/asm*'

*.asm_power_limit=1

*.diagnostic_dest='/g01/app/grid'

*.instance_type='asm'

*.large_pool_size=12M

*.local_listener='LISTENER_+ASM'

*.remote_login_passwordfile='EXCLUSIVE'

2) 显示asm disk failure group和 disk partners的映射关系:

1* select DISK_NUMBER,FAILGROUP,path from v$asm_disk where group_number=3

SQL> /

DISK_NUMBER FAILGROUP PATH

----------- ------------------------------ --------------------

3 SYSTEMDG_0003 /dev/asm-diskj

2 SYSTEMDG_0002 /dev/asm-diski

1 SYSTEMDG_0001 /dev/asm-diskh

0 SYSTEMDG_0000 /dev/asm-diskg

SQL> select disk,NUMBER_KFDPARTNER,DISKFGNUM from X$KFDPARTNER where grp=3;

DISK NUMBER_KFDPARTNER DISKFGNUM

---------- ----------------- ----------

0 1 1

0 2 1

0 3 1

1 0 2

1 2 2

1 3 2

2 0 3

2 1 3

2 3 3

3 0 4

3 1 4

3 2 4

12 rows selected.

ASM常见问题, FAQ:

Q:ASM做 rebalance和 mirror 的基本颗粒是什么?

A: ASM做mirror 镜像的基本颗粒是file的extent,默认情况下一个extent等于一个AU,11g之后一个extent可以是1 or 8 or 64个AU

ASM做rebalance重新平衡的基本颗粒也是extent,虽然重新平衡是对每一个AU逐次做的。

Q:ASMLIB和ASM的关系是什么?

A:ASMLIB是一种种基于Linux module,专门为Oracle Automatic Storage Management特性设计的内核支持库(kernel support library)。

简单来说ASMLIB是一种Linux下的程序包,它不属于Oracle ASM kernel。 通过ASMLIb可以做到设备名绑定,便于ASM使用的目的; 但是Linux上能实现设备名绑定并便于ASM使用的服务有很多,例如udev、mpath等;

所以ASMLIB并不是ASM必须的组件; 国内的中文文章对于该概念的描述大多不清晰,造成了ASMLIB=ASM或者ASM必须用ASMLIB的误解,这是以讹传讹。

ASMLIB的缺点见拙作《Why ASMLIB and why not?》一文

Q: ASM是否是raid 10或者raid 01?

A:ASM的mirror是基于file extent的,而不是像raid那样基于disk或者block。 所以ASM既不同于Raid 10,也不是Raid 01。 如果硬要说相似点的话,因为ASM是先mirror镜像后stripe条带化,所以在这个特征上更像Raid 10。 但是注意,再次强调,ASM既不是RAID 10也不是RAID 01, 重复一千遍。。。。。。。。。。。。。

TO BE Continued………………. 😎