诗檀软件Biot 分享《使用VirtualBox在Oracle Linux 5.7上安装Oracle Database 11g Release 2 RAC的最佳实践》,下载地址:

诗檀软件Biot 分享《使用VirtualBox在Oracle Linux 5.7上安装Oracle Database 11g Release 2 RAC的最佳实践》,下载地址:

GC FREELIST等待事件 freelist empty

kclevrpg Lock element event number is the name hash bucket the LE belongs to.

kclnfndnew – Find an le for a given name

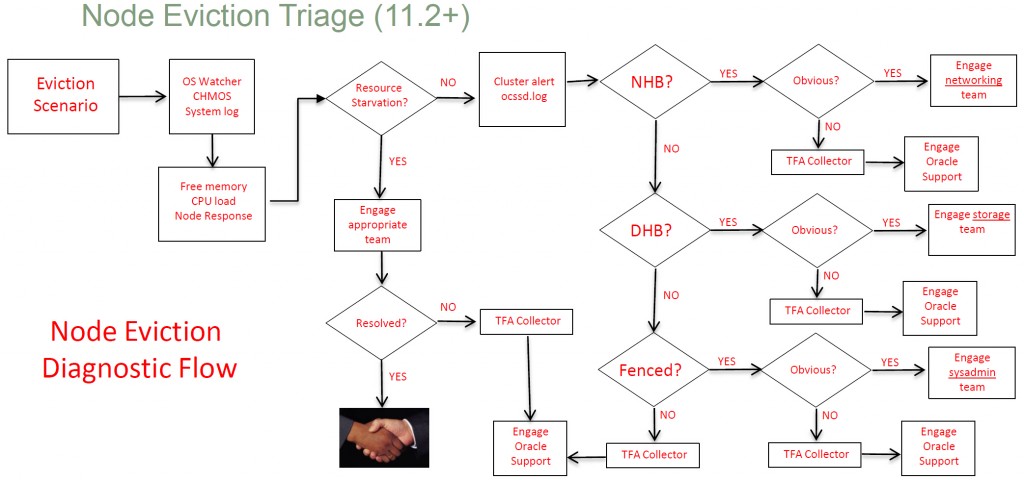

ORACLE RAC节点意外重启Node Eviction诊断流程图

导致实例逐出的五大问题 (Doc ID 1526186.1)

Oracle Database – Enterprise Edition – 版本 10.2.0.1 到 11.2.0.3 [发行版 10.2 到 11.2]

本文档所含信息适用于所有平台

本文档针对导致实例驱逐的主要问题为 DBA 提供了一个快速概述。

DBA

实例崩溃,警报日志显示“ORA-29740:evicted by member …(被成员…驱逐)”错误。

检查所有实例的 lmon 跟踪文件,这对确定实例驱逐的原因代码而言非常重要。查找包含“kjxgrrcfgchk:Initiating reconfig”的行。

这将提供一个原因代码,如“kjxgrrcfgchk:Initiating reconfig, reason 3”。实例驱逐时发生的大多数 ora-29740 错误是由于原因 3(“通信故障”) 造成的。

Document 219361.1 (Troubleshooting ORA-29740 in a RAC Environment) 介绍了以下几种可能造成原因 3的 ora-29740 错误原因:

a) 网络问题。

b) 资源耗尽(CPU、I/O 等)

c) 严重的数据库争用。

d) Oracle bug。

实例驱逐时,警报日志显示许多“IPC send timeout”错误。此消息通常伴随数据库性能问题。

lmon、lms 和 lmd 进程报告“IPC send timeout”错误的另一个原因是网络问题或服务器资源(CPU 和内存)问题。这些进程可能无法获得 CPU 运行调度或这些进程发送的网络数据包丢失。

涉及 lmon、lmd 和 lms 进程的通信问题导致实例驱逐。被驱逐实例的警报日志显示的信息类似于如下示例

IPC Send timeout detected.Sender: ospid 1519

Receiver: inst 8 binc 997466802 ospid 23309

如果某实例被驱逐,警报日志中的“IPC Send timeout detected(检测到 IPC 发送超时)”通常伴随着其它问题,如 ora-29740 和“Waiting for clusterware split-brain resolution(等待集群件“脑裂”解决方案)”

1) 检查网络,确保无网络错误,如 UDP 错误或 IP 数据包丢失或故障错误。

2) 检查网络配置,确保所有节点上的所有网络配置均设置正确。

例如,所有节点上 MTU 的大小必须相同,并且如果使用巨帧,交换机也能够支持大小为 9000 的 MTU。

3) 检查服务器是否存在 CPU 负载问题或可用内存不足。

4) 检查数据库在实例驱逐之前是否正处于挂起状态或存在严重的性能问题。

5) 检查 CHM (Cluster Health Monitor) 输出,以查看服务器是否存在 CPU 或内存负载问题、网络问题或者 lmd 或 lms 进程出现死循环。CHM 输出只能在特定平台和版本中使用,因此请参阅 CHM 常见问题 Document 1328466.1

6) 如果 OSWatcher 尚未设置,请按照 Document 301137.1 中的说明进行设置以运行 OSWatcher。

CHM 输出不可用时,使用 OSWatcher 输出将有所帮助。

在实例崩溃/驱逐前,该实例或数据库正处于挂起状态。当然,也可能是节点挂起。

在执行驱逐其他实例动作的实例警报日志中,您可能会看到与以下消息类似的消息:

Remote instance kill is issued [112:1]:8

或者

Evicting instance 2 from cluster

在一个或多个实例崩溃之前,警报日志显示“Waiting for clusterware split-brain resolution(等待集群件“脑裂”解决方案)”。这通常伴随着“Evicting instance n from cluster(从集群驱逐实例 n)”,其中 n 是指被驱逐的实例编号。

常见原因有:

1) 实例级别的“脑裂”通常由网络问题导致,因此检查网络设置和连接非常重要。但是,因为如果网络已关闭,集群件 (CRS) 就会出现故障,所以只要 CRS 和数据库使用同一网络,则网络不太可能会关闭。

2) 服务器非常繁忙和/或可用内存量低(频繁的交换和内存扫描),将阻止 lmon 进程被调度。

3) 数据库或实例正处于挂起状态,并且 lmon 进程受阻。

4) Oracle bug

以上原因与问题 1的原因相似(警报日志显示 ora-29740 是实例崩溃/驱逐的原因)。

1) 检查网络,确保无网络错误,如 UDP 错误或 IP 数据包丢失或故障错误。

2) 检查网络配置,确保所有节点上的所有网络配置均设置正确。

例如,所有节点上 MTU 的大小必须相同,并且如果使用巨帧,交换机也能够支持大小为 9000 的 MTU。

3) 检查服务器是否存在 CPU 负载问题或可用内存不足。

4) 检查数据库在实例驱逐之前是否正处于挂起状态或存在严重的性能问题。

5) 检查 CHM (Cluster Health Monitor) 输出,以查看服务器是否存在 CPU 或内存负载问题、网络问题或者 lmd 或 lms 进程出现死循环。CHM 输出只能在特定平台和版本中使用,因此请参阅 CHM 常见问题 Document 1328466.1

6) 如果 OSWatcher 尚未设置,请按照 Document 301137.1 中的说明进行设置以运行 OSWatcher。

CHM 输出不可用时,使用 OSWatcher 输出将有所帮助。

一个实例驱逐其他实例时,在问题实例自己关闭之前,所有实例都处于等待状态,但是如果问题实例因为某些原因不能终止自己,发起驱逐的实例将发出 Member Kill 请求。Member Kill 请求会要求 CRS 终止问题实例。此功能适用于 11.1 及更高版本。

例如,以上消息表示终止实例 8 的 Member Kill 请求已发送至 CRS。

问题实例由于某种原因正处于挂起状态且无响应。这可能是由于节点存在 CPU 和内存问题,并且问题实例的进程无法获得 CPU 运行调度。

第二个常见原因是数据库资源争用严重,导致问题实例无法完成远程实例驱逐该实例的请求。

另一个原因可能是由于实例尝试中止自己时,一个或多个进程“幸存”了下来。除非实例的所有进程全部终止,否则 CRS 不认为该实例已终止,而且不会通知其它实例该问题实例已经被终止。这种情况下的一个常见问题是一个或多个进程变成僵尸进程且未终止。

并导致CRS通过节点重启或 rebootless restart( CRS 重新启动但节点不重启)进行重新启动。这种情况下,问题实例的警报日志显示

Instance termination failed to kill one or more processes

Instance terminated by LMON, pid = 23305

(实例终止未能终止一个或多个进程

实例被 LMON, pid = 23305 终止)

1) 查找数据库或实例挂起的原因。对数据库或实例挂起问题进行故障排除时,获取全局 systemstate 转储和全局hang analyze 转储是关键。如果无法获取全局 systemstate 转储,则应获取在大致相同时间所有实例的本地 systemstate 转储。

2) 检查 CHM (Cluster Health Monitor) 输出,以查看服务器是否存在 CPU 或内存负载问题、网络问题或者 lmd 或 lms 进程出现死循环。CHM 输出只能在某些平台和版本中使用,因此请参阅 CHM 常见问题Document 1328466.1

3) 如果 OSWatcher 尚未设置,请按照 Document 301137.1 中的说明进行设置以运行 OSWatcher。

CHM 输出不可用时,使用 OSWatcher 输出将有所帮助.

【转】导致 Scan VIP 和 Scan Listener(监听程序)出现故障的最常见的 5 个问题 (Doc ID 1602038.1)

Oracle Database – Enterprise Edition – 版本 11.2.0.1 到 11.2.0.3 [发行版 11.2]

本文档所含信息适用于所有平台

本说明简要总结了导致 SCAN VIP 和 SCAN LISTENERS 故障的最常见问题

所有遇到 SCAN 问题的用户

在其中一个节点上,SCAN VIP 显示状态“UNKNOWN”和“CHECK TIMED OUT”

另两个 SCAN VIP 在其他节点上启动,显示状态“ONLINE”

crsctl stat res -t

——————————————————————————–

Cluster Resources

——————————————————————————–

ora.scan1.vip 1 ONLINE UNKNOWN rac2 CHECK TIMED OUT

ora.scan2.vip 1 ONLINE ONLINE rac1

ora.scan3.vip 1 ONLINE ONLINE rac1

SCAN VIP 是 11.2 版本集群件的新功能。

安装之后,验证 SCAN 配置和状态:

– crsctl status resource -w ‘TYPE = ora.scan_vip.type’ -t

必须显示3 个SCAN 地址 ONLINE

ora.scan1.vip 1 ONLINE ONLINE rac2

ora.scan2.vip 1 ONLINE ONLINE rac1

ora.scan3.vip 1 ONLINE ONLINE rac1

– crsctl status resource -w ‘TYPE = ora.scan_vip.type’ -t

应显示 LISTENER_SCAN<x> ONLINE

– srvctl config scan /srvctl config scan_listener

显示 SCAN 和 SCAN listener(监听程序)配置:scan 名称、网络和所有 SCAN VIP(名称和 IP)、端口

– cluvfy comp scan

在执行 SCAN VIP 和 SCAN listener故障切换后,实例未注册到 SCAN listener。这种情况只会发生在其中1 个 scan listener上。客户机连接间歇性出现“ORA-12514 TNS:listener does not currently know of service requested in connect descriptor”。

1. 未发布的 Bug 12659561:在执行 scan listener故障切换后,数据库实例可能未注册到 scan listener(请参阅 Note 12659561.8),这一问题已在 11.2.0.3.2 中修复,针对 11.2.0.2 的 Merge patch13354057 适用于特定平台。

2. 未发布的 Bug 13066936:在执行 scan 故障切换时,实例未注册服务(请参阅 Note 13066936.8)。

1) 对于以上两个 Bug,解决方法是执行以下步骤,在未注册到 SCAN listener的数据库实例上注销并重新注册remote_listener。

show parameter remote_listener

alter system set remote_listener=”;

alter system register;

alter system set remote_listener='<scan>:<port>’;

alter system register;

2) 服务未注册到 SCAN listener(监听程序)时要检查的其他要点:

a. 正确定义了 remote_listener 和 local_listener

b. sqlnet.ora 中定义了 EZCONNECT,示例:NAMES.DIRECTORY_PATH= (TNSNAMES, EZCONNECT)

c. /etc/hosts 或 DNS 中定义了 SCAN,并且如果在两处都定义,则检查是否存在任何不匹配情况

d. nslookup <scan> 应以 round-robin (循环)方式显示 SCAN VIP

e. 如果未配置 Secure transports (COST) 的类,则不要在 listener.ora 中设置 SECURE_REGITER_<listener>。

公网关闭时,Scan Vip 应切换到下一个节点。在 11.2.0.1 的一些环境中,Scan Vip 可能会停留在错误的节点上。

Database – RAC/Scalability 社区

为了与 Oracle 专家和业内同行进一步讨论这个话题,我们建议您加入 My Oracle Support 的 Database – RAC/Scalability 社区参与讨论。

10.2.0.4以后vip不会自动relocate back回原节点, 原因是ORACLE开发人员发现在实际使用中会遇到这样的情况: relocate back回原节点 需要停止VIP并在原始节点再次启动该VIP,但是如果原始节点上的公共网络仍不可用,则这个relocate的尝试将再次失败而failover到第二节点。 在此期间VIP将不可用,所以从10.2.0.4和11.1开始,默认的实例检查将不会自动relocate vip到原始节点。

详细见下面的Note介绍:

Applies to:

Oracle Server – Enterprise Edition – Version 10.2.0.4 to 11.1.0.7 [Release 10.2 to 11.1]

Information in this document applies to any platform.

Symptoms

Starting from 10.2.0.4 and 11.1, VIP does not fail-over back to the original node even after the public network problem is resolved. This behavior is the default behavior in 10.2.0.4 and 11.1 and is different from that of 10.2.0.3

Cause

This is actually the default default behavior in 10.2.0.4 and 11.1

In 10.2.0.3, on every instance check, the instance attempted to relocate the VIP back to the preferred node (original node), but that required stopping the VIP and then attempt to restart the VIP on the original node. If the public network on the original node is still down, then the attempt to relocate VIP to the original node will fail and the VIP will fail-over back to the secondary node. During this time, the VIP is not available, so starting from 10.2.0.4 and 11.1, the default behavior is that the instance check will not attempt to relocate the VIP back to the original node.

Solution

If the default behavior of 10.2.0.4 and 11.1 is not desired and if there is a need to have the VIP relocate back to the original node automatically when the public network problem is resolved, use the following workaround

Uncomment the line

ORA_RACG_VIP_FAILBACK=1 && export ORA_RACG_VIP_FAILBACK

in the racgwrap script in $ORACLE_HOME/bin

With the above workaround, VIP will relocate back to the original node when CRS performs the instance check, so in order for the VIP to relocate automatically, the node must have at least one instance running.

The instance needs to be restarted or CRS needs to be restarted to have the VIP start relocating back to the original node automatically if the change is being made on the existing cluster.

Relying on automatic relocation of VIP can take up to 10 minutes because the instance check is performed once every 10 minutes. Manually relocating the VIP is only way to guarantee quick relocation of VIP back to the original node.

To manually relocate the VIP, start the nodeapps by issuing

srvctl start nodeapps -n <node name>

Starting the nodeapps does not harm the online resources such as ons and gsd.

【Maclean Liu技术分享】12c 12.1.0.1 RAC Real Application Cluster 安装教学视频 基于Vbox+Oracle Linux 5.7

安装步骤脚本下载:

Maclean技术分享 12c RAC 安装OEL 5.7 12.1.0.1 RAC VBox安装脚本.txt

视频观看地址:

[Read more…]

本文永久地址:https://www.askmac.cn/archives/oracle-clusterware-11-2.html

到Oracle数据库11g第2版(11.2)的过渡中,Oracle集群做了大量的改变,完全重新设计了CRSD,引进了“本地CRS”(OHASD)和紧密集成的代理层更换RACK层。新的功能,如Grid Naming Service,即插即用,集群时间同步服务和Grid IPC。集群同步服务(CSS)可能是影响最小的变化,但它提供支持新功能的功能,以及添加了新的功能,如支持IPMI。

有了这个技术文件,我们想借此机会,提供所有的我们已经积累了多年的11.2的发展技术,并将其转发给那些刚刚开始学习Oracle 11.2集群的人。本文提供了总体概述,以及相关的诊断和调试的详细信息。

由于这是Oracle集群诊断文章的第一版本,而不是11.2集群覆盖的全部详细信息。如果你觉得你可以对本文档提供帮助与修改,请告诉我们。

这节将介绍Oracle集群的主要守护进程

下图是关于Oracle集群11.2版本所使用的守护进程,资源和代理的高度概括。

11.2版本和之前的版本的第一个大的区别就是OHASD守护进程,替代了所有在11.2之前版本里的初始化脚本。

Oracle集群由两个独立的堆栈组成。上层Cluster Ready Services守护进程(CRSD)堆栈和下层Oracle High Availability Services守护进程(ohasd)堆栈。这两个堆栈有促进集群操作几个进程。下面的章节将详细介绍这些内容。

OHASD是启动一个节点的所有其他后台程序的守护进程。OHASD将替换所有存在于11.2之前版本的初始化脚本。

OHASD入口点是/etc/inittab文件,其执行/etc/init.d/ohasd和/etc/init.d/init.ohasd。/etc/init.d/ohasd脚本是包含开始和停止操作的RC脚本。/etc/init.d/init.ohasd脚本是OHASD框架控制脚本将生成Grid_home/bin/ ohasd.bin可执行文件。

集群控制文件位于/ etc/ ORACLE / scls_scr/<hostname>/root(这是Linux的位置),并维护CRSCTL;换句话说,一个“crsctl enable / disable crs”命令将更新该目录中的文件。

| 如:

[root@rac1 root]# ls /etc/oracle/scls_scr/rac1/root crsstart ohasdrun ohasdstr

# crsctl enable -h Usage: crsctl enable crs Enable OHAS autostart on this server

# crsctl disable –h Usage: crsctl disable crs Disable OHAS autostart on this server |

scls_scr/<hostname>/root/ohasdstr文件的内容是控制CRS堆栈的自动启动;文件中的两个可能的值是“enable” – 启用自动启动,或者“disable” – 禁用自动启动。

scls_scr/<hostname>/root/ohasdrun文件控制init.ohasd脚本。三个可能的值是“reboot” – 和OHASD同步,“restart” – 重启崩溃的OHASD,“stop” – 计划OHASD关机。

Oracle 11.2集群有OHASD最大的好处是在一个集群的方式运行某些CRSCTL命令的能力。命令是完全独立于操作系统,因此他们只能靠ohasd。如果ohasd正在运行,则远程操作,如启动,停止和检查远程节点的堆栈状态都是可以执行的。

集群命令包括:

| [root@rac2 bin]# ./crsctl stop cluster

CRS-2673: Attempting to stop ‘ora.crsd’ on ‘rac2’ CRS-2790: Starting shutdown of Cluster Ready Services-managed resources on ‘rac2’ CRS-2673: Attempting to stop ‘ora.OCR_VOTEDISK.dg’ on ‘rac2’ CRS-2673: Attempting to stop ‘ora.registry.acfs’ on ‘rac2’ 。。。。。。。。。。。。。

[root@rac2 bin]# ./crsctl start cluster CRS-2672: Attempting to start ‘ora.cssdmonitor’ on ‘rac2’ CRS-2676: Start of ‘ora.cssdmonitor’ on ‘rac2’ succeeded CRS-2672: Attempting to start ‘ora.cssd’ on ‘rac2’ CRS-2672: Attempting to start ‘ora.diskmon’ on ‘rac2’ CRS-2676: Start of ‘ora.diskmon’ on ‘rac2’ succeeded 。。。。。。。。。。。。。

[root@rac2 bin]# ./crsctl check cluster CRS-4537: Cluster Ready Services is online CRS-4529: Cluster Synchronization Services is online CRS-4533: Event Manager is online |

OHASD能执行更多的功能,如处理和管理Oracle本地库(OLR),以及作为OLR服务器。在集群中,OHASD以root身份运行;在Oracle重新启动的环境下,以Oracle用户运行OHASD管理应用程序资源。

Oracle 11.2的集群堆栈由OHASD守护进程启动,这本身是由一个启动了的节点的/etc/init.d/init.ohasd脚本产生的。另外用’CRSCTL stop CRS后用‘CRSCTL start CRS‘,ohasd开始运行的节点上。然后OHASD守护进程将启动其他守护进程和代理。每个集群守护进程由存储在OLR的OHASD资源表示。下面的图表显示了OHASD资源/集群守护程序和各自的代理进程和所有者的关系。

| Resource Name | Agent Name | Owner |

| ora.gipcd | oraagent | crs user |

| ora.gpnpd | oraagent | crs user |

| ora.mdnsd | oraagent | crs user |

| ora.cssd | cssdagent | Root |

| ora.cssdmonitor | cssdmonitor | Root |

| ora.diskmon | orarootagent | Root |

| ora.ctssd | orarootagent | Root |

| ora.evmd | oraagent | crs user |

| ora.crsd | orarootagent | Root |

| ora.asm | oraagent | crs user |

| ora.driver.acfs | orarootagent | Root |

| ora.crf (new in 11.2.0.2) | orarootagent | root |

下面的图片显示OHASD管理资源/守护进程之间的所有资源依赖关系:

一个节点典型的守护程序资源列表如下。要获得守护资源列表,我们需要使用-init标志和CRSCTL命令。

| [grid@rac1 admin]$ crsctl stat res -init -t

——————————————————————————- NAME TARGET STATE SERVER STATE_DETAILS ——————————————————————————- Cluster Resources ——————————————————————————- ora.asm 1 ONLINE ONLINE rac1 Started ora.cluster_interconnect.haip 1 ONLINE ONLINE rac1 ora.crf 1 ONLINE OFFLINE ora.crsd 1 ONLINE ONLINE rac1 。。。。。。 |

下面的列表会显示所使用的类型和层次。一切是建立在基本“resource”类型上。cluster_resource使用“resource”类型作为基本类型。cluster_resource作为基本类型构建出ora.daemon.type,守护进程资源都是使用“ora.daemon.type”类型作为基本类型。

| [grid@rac1 admin]$ crsctl stat type -init

TYPE_NAME=application BASE_TYPE=cluster_resource

TYPE_NAME=cluster_resource BASE_TYPE=resource

TYPE_NAME=generic_application BASE_TYPE=cluster_resource

TYPE_NAME=local_resource BASE_TYPE=resource

TYPE_NAME=ora.asm.type BASE_TYPE=ora.daemon.type

TYPE_NAME=ora.crf.type BASE_TYPE=ora.daemon.type

TYPE_NAME=ora.crs.type BASE_TYPE=ora.daemon.type

TYPE_NAME=ora.cssd.type BASE_TYPE=ora.daemon.type

TYPE_NAME=ora.cssdmonitor.type BASE_TYPE=ora.daemon.type

TYPE_NAME=ora.ctss.type BASE_TYPE=ora.daemon.type

TYPE_NAME=ora.daemon.type BASE_TYPE=cluster_resource

TYPE_NAME=ora.diskmon.type BASE_TYPE=ora.daemon.type

TYPE_NAME=ora.drivers.acfs.type BASE_TYPE=ora.daemon.type

TYPE_NAME=ora.evm.type BASE_TYPE=ora.daemon.type

TYPE_NAME=ora.gipc.type BASE_TYPE=ora.daemon.type

TYPE_NAME=ora.gpnp.type BASE_TYPE=ora.daemon.type

TYPE_NAME=ora.haip.type BASE_TYPE=cluster_resource

TYPE_NAME=ora.mdns.type BASE_TYPE=ora.daemon.type

TYPE_NAME=resource BASE_TYPE= |

用ora.cssd资源作为一个例子,所有的ora.cssd属性可以使用crsctl stat res ora.cssd –init –f显示。(列出一部分比较重要的)

| [grid@rac1 admin]$ crsctl stat res ora.cssd -init -f

NAME=ora.cssd TYPE=ora.cssd.type STATE=ONLINE TARGET=ONLINE ACL=owner:root:rw-,pgrp:oinstall:rw-,other::r–,user:grid:r-x AGENT_FILENAME=%CRS_HOME%/bin/cssdagent%CRS_EXE_SUFFIX% CHECK_INTERVAL=30 CLEAN_ARGS=abort CLEAN_COMMAND= CREATION_SEED=6 CSSD_MODE= CSSD_PATH=%CRS_HOME%/bin/ocssd%CRS_EXE_SUFFIX% CSS_USER=grid ID=ora.cssd LOGGING_LEVEL=1 START_DEPENDENCIES=weak(concurrent:ora.diskmon)hard(ora.cssdmonitor,ora.gpnpd,ora.gipcd)pullup(ora.gpnpd,ora.gipcd) STOP_DEPENDENCIES=hard(intermediate:ora.gipcd,shutdown:ora.diskmon,intermediate:ora.cssdmonitor) |

为了调试守护进程资源,-init标志一直要用。要启用额外的调试例如ora.cssd:

| [root@rac2 bin]# ./crsctl set log res ora.cssd:3 -init

Set Resource ora.cssd Log Level: 3 |

检查log级别

| [root@rac2 bin]# ./crsctl get log res ora.cssd -init

Get Resource ora.cssd Log Level: 3 |

要检查资源属性,如log级别:

| [root@rac2 bin]# ./crsctl stat res ora.cssd -init -f | grep LOGGING_LEVEL

DAEMON_LOGGING_LEVELS=CSSD=2,GIPCNM=2,GIPCGM=2,GIPCCM=2,CLSF=0,SKGFD=0,GPNP=1,OLR=0 LOGGING_LEVEL=3 |

Oracle 11.2集群引入了一个新概念,代理,这使得Oracle集群更强大和高性能。这些代理是多线程的守护进程,实现多个资源类型的入口点和为不同的用户生成新流程。代理是高可用的,此外oraagent,orarootagent和cssdagent/ cssdmonitor,可以有一个应用程序代理和脚本代理。

两个主要代理是oraagent和orarootagent。 ohasd和CRSD各使用一个oraagent和一个orarootagent。如果CRS用户和Oracle用户不同,那么CRSD将利用两个oraagent和一个orarootagent。

ohasd’s oraagent:

crsd’s oraagent:

ohasd’s orarootagent:

crsd’s orarootagent:

请参照章节: “cssdagent and cssdmonitor”.

请参照章节:“application and scriptagent”.

ohasd/crsd代理的日志放在Grid_home/log/<hostname>/agent/ {ohasd|crsd}/ <agentname>_<owner>/ <agentname>_<o wner>.log.例如,ora.crsd是ohasd管理属于root用户,那么代理的日志名字为:

Grid_home/log/<hostname>/agent/ohasd/orarootagent_root/orarootagent_root.log

| [grid@rac2 orarootagent_root]$ ls /u01/app/11.2.0/grid/log/rac2/agent/ohasd/orarootagent_root

orarootagent_root.log orarootagent_rootOUT.log orarootagent_root.pid |

同一个代理日志可以存放不同资源的日志,如果这些资源是由相同的守护进程管理的。

如果一个代理进程崩溃了,

-核心文件将被写入

Grid_home/log/<hostname>/agent/{ohasd|crsd}/<agentname>_<owner>

-堆栈调用将写入

Grid_home/log/<hostname>/agent/{ohasd|crsd}/<agentname>_<owner>/<agentna me>_<owner>OUT.log

代理日志的格式如下:

<timestamp>:[<component>][<thread id>]…

<timestamp>:[<component>][<thread id>][<entry point>]…

例如:

| 2016-04-01 13:39:23.070: [ora.drivers.acfs][3027843984]{0:0:2} [check] execCmd ret = 0

[ clsdmc][3015236496]CLSDMC.C returnbuflen=8, extraDataBuf=A6, returnbuf=8D33FD8 2016-04-01 13:39:24.201: [ora.ctssd][3015236496]{0:0:213} [check] clsdmc_respget return: status=0, ecode=0, returnbuf=[0x8d33fd8], buflen=8 2016-04-01 13:39:24.201: [ora.ctssd][3015236496]{0:0:213} [check] translateReturnCodes, return = 0, state detail = OBSERVERCheckcb data [0x8d33fd8]: mode[0xa6] offset[343 ms]. |

如果发生错误,确定发生了什么的入口点:

-集群告警日志,Grid_home/log/<hostname>/alert<hostname>.log

如:/u01/app/11.2.0/grid/log/rac2/alertrac2.log

-OHASD/CRSD日志

Grid_home/log/<hostname>/ohasd/ohasd.log

Grid_home/log/<hostname>/crsd/crsd.log

-对应的代理日志文件

请记住,一个代理日志文件将包含多个资源的启动/停止/检查。以crsd orarootagent资源名称”ora.rac2.vip”为例。

| [root@rac2 orarootagent_root]# grep ora.rac2.vip orarootagent_root.log

。。。。。。。。 2016-04-01 12:30:33.606: [ora.rac2.vip][3013606288]{2:57434:199} [check] Failed to check 192.168.1.102 on eth0 2016-04-01 12:30:33.607: [ora.rac2.vip][3013606288]{2:57434:199} [check] (null) category: 0, operation: , loc: , OS error: 0, other: 2016-04-01 12:30:33.607: [ora.rac2.vip][3013606288]{2:57434:199} [check] VipAgent::checkIp returned false 。。。。。。。。。。。。。 |

CSS守护进程(OCSSD)管理集群的配置,集群里有哪些节点,并在有节点离开或加入时通知集群成员。

ASM和数据库实例的其他集群守护进程依赖于一个有效的CSS。如果OCSSD因任何原因不能引导程序,比如没有发现投票的文件信息,所有其他层级将无法启动。

OCSSD还可以通过网络心跳(NHB)和磁盘心跳(DHB)监控集群的健康。NHB是主要的指标,一个节点还活着,并能参与群集。而DHB将主要用于解决脑裂。

下面的部分将列出并解释ocssd使用的线程。

– 集群监听线程(CLT) – 试图在启动时连接到所有远程节点,接收和处理所有收到的消息,并响应其他节点的连接请求。每当从节点收到一个数据包,监听重置该节点漏掉的统计数量。

– 发送线程(ST) -专门每秒发送一次网络心跳(NHB)到所有节点,和使用grid IPC(GIPC)每秒发送一次当地的心跳(LHB)到cssdagent和cssdmonitor。

– 投票线程(PT) – 监视远程节点的NHB的。如果CSS守护进程之间的通信通道发生故障时,心跳会被错过。如果某一个节点有太多的心跳信号被错过了,它被怀疑是关闭或断开。重新配置的线程会被唤醒,重新配置将发生,并最终将一个节点驱逐。

在重新配置管理节点,唤醒的重新配置管理线程着眼于每个节点,看哪些节点已经错过了NHB的太久。在重新配置管理器线程参与了与其他CSS守护程序投票过程中,一旦确定了新的群集成员,重新配置管理器线程会在投票的文件写入驱逐通知。该RMT还发送关闭消息给被驱逐的节点。投票文件会监控检查心跳的裂脑,直到他们的磁盘心跳已经停止了<misscount>秒,远程节点才会被踢走。

– 发现进程 -发现投票文件

– 避开线程 – 用于I / O防护diskmon进程通信,如果使用EXADATA。

投票文件群集成员线程

– 磁盘ping线程(每个投票的文件)

与它相关联的节点数量和递增序列号的投票文件一起写入群集成员的当前视图;

读取驱逐通知看它的主机节点是否已被驱逐;

这个线程还监视远程节点投票磁盘心跳信息。磁盘心跳信息,以便重新配置过程中用于确定一个远程OCSSD是否已经终止。

– kill block线程 – (每个投票的文件)监控投票文件可用性,以确保足够可访问的投票文件的数量。如果使用的Oracle冗余,我们需要配置多数投票磁盘在线。

– 工作线程 – (11.2.0.1里新增加的,每个投票文件)各种I / O在投票文件。

– 磁盘Ping监视器 – 监视器的I / O投票文件状态

此监视线程,确保磁盘ping线程正确地读取多数投票配置文件里的kill blocks。如果我们不能对投票文件进行I/O操作,由于I / O挂起或I / O故障或其他原因,我们把这个投票文件设置离线。该线程监视磁盘ping线程。如果CSS是无法读取多数投票的文件,它可能不再获得至少一个盘在所有的节点上。这个节点有可能会错过的驱逐通知;换句话说,CSS是不能够进行合作,并必须被终止。

– 节点杀死线程 – (瞬时的)用于通过IPMI杀死节点

– 成员杀死线程 – (瞬时的)杀成员期间使用

成员-杀死(监控)线程

本地杀死线程 – 当一个CSS客户端开始杀死成员,当地CSS杀死线程将被创建

– SKGXN监视器(skgxnmon只出现在供应商集群)

这个线程寄存器与SKGXN节点组的成员观察节点组成员身份的变化。当重新配置事件发生时,该线程从SKGXN请求当前节点组成员的位图,并将其与它接收到的最后的时间和其他两个位图的当前值的位图:驱逐待定,其标识节点在被关闭中,VMON的组成员,这表明其节点的过程oclsmon仍在运行(节点仍然是up的)。当一个成员的转变被确认,节点监视线程启动相应的操作。

在Oracle集群11g第2版(11.2)减少了配置要求,这意味节点启动时自动添加回去,如果已经停机很久则删除它们。停止超过一个星期都不再olsnodes报道的服务器。当他们离开集群这些服务器自动管理,所以你不必从集群中明确地将其删除。

固定节点

相应的命令来更改节点固定行为(固定或不固定任何特定节点),是crsctl pin/unpin的CSS命令。固定节点是指节点名称与节点号码的关联是固定的。如果一个节点不固定,如果租赁到期时,节点号可能会改变。一个固定节点的租约永不过期。用crsctl delete node命令删除一个节点隐含取消节点固定。

– 在Oracle集群升级,所有服务器都固定,而经过Oracle集群的全新安装11g第2版(11.2),您添加到集群中的所有服务器都不固定。

– 在安装了11.2集群的服务器上有比11.2早版本的实例,那么您无法取消固定。

固定一个节点需要滚动升级到Oracle集群件11g第2版(11.2),将自动完成。我们已经看到有客户进行手动升级失败,是因为没有固定节点。

端口分配

对于CSS和节点监视器固定端口分配已被删除,所以不应该有与其他应用程序的端口竞争。唯一的例外是滚动升级过程中我们分配两个固定的端口。

GIPC

该CSS层是使用新的通信层Grid PC(GIPC),它仍然支持11.2之前使用CLSC通信层。在11.2.0.2,GIPC将支持的使用多个NIC的单个通信链路,例如CSS / NM间的通信。

集群告警日志

多个cluster_alert.log消息已被添加便于更快的定位问题。标识符将在alert.log和链接到该问题的守护程序日志条目都被打印。标识符是组件中唯一的,例如CSS或CRS。

2009-11-24 03:46:21.110

[crsd(27731)]CRS-2757:Command ‘Start’ timed out waiting for response from the resource ‘ora.stnsp006.vip’. Details at (:CRSPE00111:) in

/scratch/grid_home_11.2/log/stnsp005/crsd/crsd.log.

2009-11-24 03:58:07.375

[cssd(27413)]CRS-1605:CSSD voting file is online: /dev/sdj2; details in

/scratch/grid_home_11.2/log/stnsp005/cssd/ocssd.log.

独占模式

在Oracle集群11g第2版(11.2)集群独占模式是一个新的概念。此模式将允许您在一个节点上启动堆栈无需其他跟多的堆栈启动。投票文件不是必需的,不需要的网络连接。此模式用于维护或故障定位。因为这是一个用户调用命令确保在同一时刻只有一个节点是开启的。在独占模式下root用户在某一个节点上使用crsctl start crs –excl命令启动堆栈。

如果集群中的另一个节点已经启动,那么独占模式启动时将失败。OCSSD守护进程会主动去检查节点,如果发现有其他节点已经启动,那么启动将失败报CRS-4402。这不是错误;这是一个预期的行为时,因为另一节点已经启动。约翰·利思说,“你收到CRS-4402时是没有错误文件的”。

发现投票文件

识别投票文件的方法在11.2已经改变。投票文件在11.1和更早版本里的OCR配置,在11.2投票文件通过在GPNP配置文件中的CSS文件投票字符串的发现位置。 例如:

CSS voting file discovery string referring to ASM

发现CSS投票文件字符串指向SM,所以将使用在ASM搜寻字符串值。最常见的是你会看到系统这个配置(例如Linux中,使用旧的2.6内核),其中裸设备仍可配置,裸设备被CRS和ASM使用。

例如:

<orcl:CSS-Profile id=”css” DiscoveryString=”+asm” LeaseDuration=”400″/>

<orcl:ASM-Profile id=”asm” DiscoveryString=”” SPFile=””/>

对于ASM搜寻字符串空值意味着它将恢复到特定的操作系统默认情况下。在Linux上就是/dev/raw/raw*。

CSS voting file discovery string referring to list of LUN’s/disks

在下面的例子中,CSS文件投票字符串发现其实是指磁盘/ LUN列表中。这可能是配置在块设备或设备使用非默认位置。在这种情况下,对于CSS VF发现字符串与ASM发现字符串的值是相同的。

<orcl:CSS-Profile id=”css” DiscoveryString=”/dev/shared/sdsk-a[123]-*-part8″ LeaseDuration=”400″/>

<orcl:ASM-Profile id=”asm” DiscoveryString=”/dev/shared/sdsk-a[123]-*-part8 SPFile=””/>

一些投票文件标识符必须在磁盘上找到接受它作为一种投票磁盘:文件的唯一标识符,集群GUID和匹配的配置化身号(CIN)。可以使用vdpatch检查设备是否是一个投票文件。

获得租约的机制是通过获得该节点的节点数字。租约表示一个节点拥有由租约期限定义的周期关联的节点数量。一个租借期限在GPNP参数文件里硬编码为一个星期。一个节点拥有从上次续租的时间租约的租期。租赁是考虑每DHB过程中得到更新。因此,租赁期满被定义

如下 – lease expiry time = last DHB time + lease duration(租约到期时间=最后一次DHB时间+租约期限)。

有两种类型的租约

– 固定租约

节点使用硬编码的静态节点数量。固定租约是指涉及到使用静态节点号旧版本集群的升级方案中使用。

– 不固定租约

一个节点动态获取节点号使用租约获得算法。租约获得算法旨在解决其试图在同一时间获得相同的插槽节点之间的冲突。

对于一个成功的租约操作会记录在Grid_home/log/<hostname>/alert<hostname>.log,

[cssd(8433)]CRS-1707:Lease acquisition for node staiv10 number 5 completed

对于租约失败的,会有一些信息记录在<alert>hostname.log和ocssd.log。在当前版本中没有可调调整租约。

下面的章节将描述主要的组件和技术用来解决脑裂。

Heartbeats心跳

CSS的使用集群成员的两个主要的心跳机制,网络心跳(NHB)和磁盘心跳(DHB)。心跳机制是故意冗余的,它们用于不同的目的。在NHB用于检测群集连接丢失的,而DHB主要用于脑裂解决。每个群集节点必须参加心跳协议为了被认为是健康的集群成员。

Network Heartbeat (NHB)

该NHB是在集群安装过程中配置为私有互连专用网络接口。CSS每秒钟从一个节点发送NHB到集群中的所有其他节点,并从远程节点接收每秒一个NHB。该NHBB也发送cssdmonitor和cssdagent。

在NHB包含从本地节点时间戳信息,并用于远程节点找出NHB被发送。这表明一个节点可以参与集群的活动,例如组成员的变化,消息发送等。如果NHB缺少<misscount>秒(在Linux11.2数据库中30秒),集群成员资格更改(群集重新配置)是必需的。如果网络连接在小于<misscount>秒恢复连接到该网络,那么就不一定是致命的。

要调试NHB问题,增加OCSSD日志级别为3对于看每个心跳消息有时是有帮助的。在每个节点上的root用户运行CRSCTL设置日志命令:

# crsctl set log css ocssd:3

监测misstime最大值,看看是否misscount正在增加,这将定位网络问题。

| # tail -f ocssd.log | grep -i misstime

2009-10-22 06:06:07.275: [ ocssd][2840566672]clssnmPollingThread: node 2, stnsp006, ninfmisstime 270, misstime 270, skgxnbit 4, vcwmisstime 0, syncstage 0 2009-10-22 06:06:08.220: [ ocssd][2830076816]clssnmHBInfo: css timestmp 1256205968 220 slgtime 246596654 DTO 28030 (index=1) biggest misstime 220 NTO 28280 2009-10-22 06:06:08.277: [ ocssd][2840566672]clssnmPollingThread: node 2, stnsp006, ninfmisstime 280, misstime 280, skgxnbit 4, vcwmisstime 0, syncstage 0 2009-10-22 06:06:09.223: [ ocssd][2830076816]clssnmHBInfo: css timestmp 1256205969 223 slgtime 246597654 DTO 28030 (index=1) biggest misstime 1230 NTO 28290 2009-10-22 06:06:09.279: [ ocssd][2840566672]clssnmPollingThread: node 2, stnsp006, ninfmisstime 270, misstime 270, skgxnbit 4, vcwmisstime 0, syncstage 0 2009-10-22 06:06:10.226: [ ocssd][2830076816]clssnmHBInfo: css timestmp 1256205970 226 slgtime 246598654 DTO 28030 (index=1) biggest misstime 2785 NTO 28290 |

要显示当前misscount设置的值,使用命令crsctl get css misscount。我们不支持misscount设置默认值以外的值。

| [grid@rac2 ~]$ crsctl get css misscount

CRS-4678: Successful get misscount 30 for Cluster Synchronization Services. |

Disk Heartbeat (DHB)

除了NHB,我们需要使用DHB来解决脑裂。它包含UNIX本地时间的时间戳。

DHB明确的机制,决定有关节点是否还活着。当DHB心跳丢失时间过长,则该节点被假定为死亡。当连接到磁盘丢失时间’过长’,盘会考虑脱机。

关于“太长”的定义取决于对DHB下列情形。首先,长期磁盘I / O超时(LIOT),其中有一个默认的200秒的设定。如果我们不能在时间内完成一个投票文件内的I / O,我们将此投票文件脱机。其次,短期磁盘I / O超时(SIOT),其中CSS集群重新配置过程中使用。SIOT是有关misscount(misscount(30) – reboottime(3)=27秒)。默认重启时间为3秒。要显示CSS 的disktimeout参数的值,使用命令crsctl get css disktimeout。

| [grid@rac2 ~]$ crsctl get css disktimeout

CRS-4678: Successful get disktimeout 200 for Cluster Synchronization Services. |

网络分离检测

最后NHB的时间戳和最近DHB的时间戳进行比较,以确定一个节点是否仍然活着。

当最近DHB和最后NHB的时间戳之间的差是大于SIOT (misscount – reboottime),一个节点被认为仍然活跃。

当时间戳之间的增量小于重启时间,节点被认为是还活着。

如果该最后DHB读取的时间大于SIOT,该节点被认为是死的。

如果时间戳之间的增量比SIOT大比reboottime少,节点的状态不明确,我们必须等待做出决定,直到我们陷入上述三个类别之一。

当网络发生故障,并且仍有活动的节点无法和其他节点通信,网络被认为是分裂。为了保持分裂发生时数据完整性,节点中的一个必须失败,尚存节点应该是最优子群集。节点通过三种可能的方式逐出:

– 通过网络发送驱逐消息。在大多数情况下,这将失败,因为现有的网络故障。

– 通过投票的文件

– 通过IPMI,如果支持和配置

在我们用下面的例子与节点A,B,C和D集群更为详细的解释:

Nodes A and B receive each other’s heartbeats

Nodes C and D receive each other’s heartbeats

Nodes A and B cannot see heartbeats of C or D

Nodes C and D cannot see heartbeats of A or B

Nodes A and B are one cohort, C and D are another cohort

Split begins when 2 cohorts stop receiving NHB’s from each other

CSS任务是一个对称的失败,也就是,A+B组停止接收C+D组发送的NHB,C+D组通知接收A+B组发送的NHB。

在这种情况下,CSS使用投票文件和DHB解决脑裂。kill block,是投票文件结构的一个组成部分,将更新和用于通知已被驱逐的节点。每个节点每一秒读取它的kill block,当另一个节点已经更新kill block后,就会自杀。

在像上面,有相似大小的子群集的情况下,子群中含有低节点编号的子群的节点将生存下来,而其他子群集节点将重新启动。

在一个更大的群集分裂的情况下,更大的子群将生存。在两个节点的群集的情况下,节点号小的节点在网络分离下将存活下来,独立于网络发生错误的位置。

连接到一个节点所需的多数投票文件保持活跃。

在11.2.0.1中kill守护程序是一个没有杀死CSS组的成员的权利。它是由在I/ O客户端加入组OCSSD库代码催生,并在需要时重生。每个用户有一个杀守护进程(oclskd)(例如crsowner,oracle)。

杀死成员说明

下面这些OCSSD线程参与杀死成员:

client_listener – receives group join and kill requests

peer_listener – receives kill requests from remote nodes

death_check – provides confirmation of termination

member_kill – spawned to manage a member kill request

local_kill – spawned to carry out member kills on local node

node termination – spawned to carry out escalation

Member kills are issued by clients who want to eliminate group members doing IO, for example:

LMON of the ASM instance

LMON of a database instance

crsd on Policy Engine (PE) master node (new in 11.2)

成员杀死总是涉及远程目标;无论是远程ASM或数据库实例。成员杀死请求被移交到本地OCSSD,再把请求发送到OCSSD目标节点上。

在某些情况下,可能在11.2.0.1及更早版本,如极端的CPU和内存资源缺乏,远程节点的杀死守护进程或远程OCSSD不能服务当地OCSSD的成员杀死时间请求(misscount秒),因此成员杀死请求超时。如果LMON(ASM和/或RDBMS)要求成员杀死,那么请求将由当地OCSSD升级到远程节点杀。通过CRSD成员终止请求将永远不会被升级为节点杀,相反,我们依靠orarootagent的检查动作检测功能失调CRSD并重新启动。目标节点的OCSSD将收到成员杀死升级的要求,并会自杀,从而迫使节点重新启动。

与杀守护进程运行实时线程cssdagent / cssdmonitor(11.2.0.2),有更高的机会杀死请求成功,尽管高系统负载。

如果IPMI配置和功能的OCSSD节点监视器将使用IPMI产生一个节点终止线程关闭远程节点。节点终止线程通过管理LAN远程BMC通信;它将建立一个认证会话(只有特权用户可以关闭一个节点),并检查电源状态。下一步骤是请求被断电并反复检查状态直至节点状态为OFF。接到OFF状态后,我们将再次开启远程节点,节点终止线程将退出。

成员杀死的例子:

由于CPU紧缺,数据库实例3的LMON发起成员杀死比如在节点2:

2009-10-21 12:22:03.613810 : kjxgrKillEM: schedule kill of inst 2 inc 20

in 20 sec

2009-10-21 12:22:03.613854 : kjxgrKillEM: total 1 kill(s) scheduled kgxgnmkill: Memberkill called – group: DBPOMMI, bitmap:1

2009-10-21 12:22:22.151: [ CSSCLNT]clssgsmbrkill: Member kill request: Members map 0x00000002

2009-10-21 12:22:22.152: [ CSSCLNT]clssgsmbrkill: Success from kill call rc 0

本地的ocssd(第三节点,内部节点2号)收到的成员杀死请求:

2009-10-21 12:22:22.151: [ ocssd][2996095904]clssgmExecuteClientRequest: Member kill request from client (0x8b054a8)

2009-10-21 12:22:22.151: [ ocssd][2996095904]clssgmReqMemberKill: Kill requested map 0x00000002 flags 0x2 escalate 0xffffffff

2009-10-21 12:22:22.152: [ ocssd][2712714144]clssgmMbrKillThread: Kill requested map 0x00000002 id 1 Group name DBPOMMI flags 0x00000001 start time 0x91794756 end time 0x91797442 time out 11500 req node 2

DBPOMMI is the database group where LMON registers as primary member time out = misscount (in milliseconds) + 500ms

map = 0x2 = 0010 = second member = member 1 (other example: map = 0x7 = 0111 = members 0,1,2)

远程的ocssd(第二节点,内部节点1号)收到的PID的杀死守护进程的请求和提交:

2009-10-21 12:22:22.201: [ ocssd][3799477152]clssgmmkLocalKillThread: Local kill requested: id 1 mbr map 0x00000002 Group name DBPOMMI flags 0x00000000 st time 1088320132 end time 1088331632 time out 11500 req node 2

2009-10-21 12:22:22.201: [ ocssd][3799477152]clssgmmkLocalKillThread: Kill requested for member 1 group (0xe88ceda0/DBPOMMI)

2009-10-21 12:22:22.201: [ ocssd][3799477152]clssgmUnreferenceMember: global grock DBPOMMI member 1 refcount is 7

2009-10-21 12:22:22.201: [ ocssd][3799477152]GM Diagnostics started for mbrnum/grockname: 1/DBPOMMI

2009-10-21 12:22:22.201: [ ocssd][3799477152]group DBPOMMI, member 1 (client

0xe330d5b0, pid 23929)

2009-10-21 12:22:22.201: [ ocssd][3799477152]group DBPOMMI, member 1 (client 0xe331fd68, pid 23973) sharing group DBPOMMI, member 1, share type normal

2009-10-21 12:22:22.201: [ ocssd][3799477152]group DG_LOCAL_POMMIDG, member 0

(client 0x89f7858, pid 23957) sharing group DBPOMMI, member 1, share type xmbr

2009-10-21 12:22:22.201: [ ocssd][3799477152]group DBPOMMI, member 1 (client 0x8a1e648, pid 23949) sharing group DBPOMMI, member 1, share type normal

2009-10-21 12:22:22.201: [ ocssd][3799477152]group DBPOMMI, member 1 (client 0x89e7ef0, pid 23951) sharing group DBPOMMI, member 1, share type normal

2009-10-21 12:22:22.202: [ ocssd][3799477152]group DBPOMMI, member 1 (client 0xe8aabbb8, pid 23947) sharing group DBPOMMI, member 1, share type normal

2009-10-21 12:22:22.202: [ ocssd][3799477152]group DG_LOCAL_POMMIDG, member 0

(client 0x8a23df0, pid 23949) sharing group DG_LOCAL_POMMIDG, member 0, share type normal

2009-10-21 12:22:22.202: [ ocssd][3799477152]group DG_LOCAL_POMMIDG, member 0

(client 0x8a25268, pid 23929) sharing group DG_LOCAL_POMMIDG, member 0, share type normal

2009-10-21 12:22:22.202: [ ocssd][3799477152]group DG_LOCAL_POMMIDG, member 0

(client 0x89e9f78, pid 23951) sharing group DG_LOCAL_POMMIDG, member 0, share type normal

在这一点上,oclskd.log将表明成功杀死这些进程,并完成此杀死请求。在11.2.0.2及更高版本,杀死守护线程将执行杀死:

2009-10-21 12:22:22.295: [ USRTHRD][3980221344] clsnkillagent_main:killreq received:

2009-10-21 12:22:22.295: [ USRTHRD][3980221344] clsskdKillMembers: kill status 0

pid 23929

2009-10-21 12:22:22.295: [ USRTHRD][3980221344] clsskdKillMembers: kill status 0

pid 23973

2009-10-21 12:22:22.295: [ USRTHRD][3980221344] clsskdKillMembers: kill status 0

pid 23957

2009-10-21 12:22:22.295: [ USRTHRD][3980221344] clsskdKillMembers: kill status 0

pid 23949

2009-10-21 12:22:22.295: [ USRTHRD][3980221344] clsskdKillMembers: kill status 0

pid 23951

2009-10-21 12:22:22.295: [ USRTHRD][3980221344] clsskdKillMembers: kill status 0

pid 23947

2009-10-21 12:22:22.295: [ USRTHRD][3980221344] clsskdKillMembers: kill status 0

pid 23949

2009-10-21 12:22:22.295: [ USRTHRD][3980221344] clsskdKillMembers: kill status 0

pid 23929

2009-10-21 12:22:22.295: [ USRTHRD][3980221344] clsskdKillMembers: kill status 0

pid 23951

2009-10-21 12:22:22.295: [ USRTHRD][3980221344] clsskdKillMembers: kill status 0

pid 23947

但是,如果在(misscount+1/2秒)的请求没完成,本地节点上的OCSSD升级节点杀死请求:

2009-10-21 12:22:33.655: [ ocssd][2712714144]clssgmMbrKillThread: Time up:

Start time -1854322858 End time -1854311358 Current time -1854311358 timeout 11500

2009-10-21 12:22:33.655: [ ocssd][2712714144]clssgmMbrKillThread: Member kill request complete.

2009-10-21 12:22:33.655: [ ocssd][2712714144]clssgmMbrKillSendEvent: Missing answers or immediate escalation: Req member 2 Req node 2 Number of answers expected 0 Number of answers outstanding 1

2009-10-21 12:22:33.656: [ ocssd][2712714144]clssgmQueueGrockEvent: groupName(DBPOMMI) count(4) master(0) event(11), incarn 0, mbrc 0, to member 2, events 0x68, state 0x0

2009-10-21 12:22:33.656: [ ocssd][2712714144]clssgmMbrKillEsc: Escalating node

1 Member request 0x00000002 Member success 0x00000000 Member failure 0x00000000 Number left to kill 1

2009-10-21 12:22:33.656: [ ocssd][2712714144]clssnmKillNode: node 1 (staiu02) kill initiated

2009-10-21 12:22:33.656: [ ocssd][2712714144]clssgmMbrKillThread: Exiting

ocssd目标节点将中止,迫使一个节点重启:

2009-10-21 12:22:33.705: [ ocssd][3799477152]clssgmmkLocalKillThread: Time up.

Timeout 11500 Start time 1088320132 End time 1088331632 Current time 1088331632

2009-10-21 12:22:33.705: [ ocssd][3799477152]clssgmmkLocalKillResults: Replying to kill request from remote node 2 kill id 1 Success map 0x00000000 Fail map 0x00000000

2009-10-21 12:22:33.705: [ ocssd][3799477152]clssgmmkLocalKillThread: Exiting

…

2009-10-21 12:22:34.679: [

ocssd][3948735392](:CSSNM00005:)clssnmvDiskKillCheck: Aborting, evicted by node 2, sync 151438398, stamp 2440656688

2009-10-21 12:22:34.679: [ ocssd][3948735392]###################################

2009-10-21 12:22:34.679: [ ocssd][3948735392]clssscExit: ocssd aborting from thread clssnmvKillBlockThread

2009-10-21 12:22:34.679: [ ocssd][3948735392]###################################

如何识别客户端是谁最初发出成员杀死请求?

在ocssd.log里,请求者可以被找到:

2009-10-21 12:22:22.151: [ocssd][2996095904]clssgmExecuteClientRequest: Member kill request from client (0x8b054a8)

<search backwards to when client registered>

2009-10-21 12:13:24.913: [ocssd][2996095904]clssgmRegisterClient:

proc(22/0x8a5d5e0), client(1/0x8b054a8)

<search backwards to when process connected to ocssd>

2009-10-21 12:13:24.897: [ocssd][2996095904]clssgmClientConnectMsg: Connect from con(0x677b23) proc(0x8a5d5e0) pid(20485/20485) version 11:2:1:4, properties: 1,2,3,4,5

用’ps’,或从其他历史(如trace文件,IPD/OS,OSWatcher),这个进程可以通过进程id识别:

$ ps -ef|grep ora_lmon

spommere 20485 1 0 01:46 ? 00:01:15 ora_lmon_pommi_3

智能平台管理接口(IPMI),今天是包含在许多服务器的行业标准管理协议。 IPMI独立于操作系统系统,如果系统不通电也能工作。IPMI服务器包含一个基板管理控制器(BMC),其用于与服务器通信(BMC)。

关于使用IPMI避开节点

为了支持会员杀死升级为终止节点,您必须配置和使用一个外部机制能够重启问题节点, 或从Oracle集群或从运行的操作系统的配置和使用能够重新启动该节点。IPMI是这样的机制,从11.2开始支持。通常情况下,在安装的过程中配置IPMI。如果在安装过程中没有配置IPMI,则可以在CRS的安装完成后用CRSCTL配置。

About Node-termination Escalation with IPMI

To use IPMI for node termination, each cluster member node must be equipped with a Baseboard Management Controller (BMC) running firmware compatible with IPMI version 1.5, which supports IPMI over a local area network (LAN). During database operation, member-kill escalation is accomplished by communication from the evicting ocssd daemon to the victim node’s BMC over LAN. The IPMI over LAN protocol is carried over an authenticated session protected by a user name and password, which are obtained from the administrator during installation. If the BMC IP addresses are DHCP assigned, ocssd requires direct communication with the local BMC during CSS startup. This is accomplished using a BMC probe command (OSD), which communicates with the BMC through an IPMI driver, which must be installed and loaded on each cluster system.

OLR Configuration for IPMI

There are two ways to configure IPMI, either during the Oracle Clusterware installation via the Oracle Universal Installer or afterwards via crsctl.

OUI – asks about node-fencing via IPMI

tests for driver to enable full support (DHCP addresses)

obtains IPMI username and password and configures OLR on all cluster nodes

Manual configuration – after install or when using static IP addresses for BMCs

crsctl query css ipmidevice

crsctl set css ipmiadmin <ipmi-admin>

crsctl set css ipmiaddr

参见: Oracle Clusterware Administration and Deployment Guide, “Configuration and Installation for Node Fencing” for more information and Oracle Grid Infrastructure Installation Guide, “Enabling Intelligent Platform Management Interface (IPMI)”

有时有必要改变ocssd的默认日志级别。

在11.2的日志默认级别是2.要改变日志级别,root用户在一个节点上执行下面命令:

# crsctl set log css CSSD:N (where N is the logging level)

Logging level 2 = 默认的

Logging level 3 =详细信息,显示各个心跳信息包括misstime,有助于调试NHB的相关问题。

Logging level 4 = 超级详细

大多数问题在级别2就能解决了,有一些需要级别3,很少需要级别4. 使用3或4级,跟踪信息可能只保持几个小时(甚至分钟),因为跟踪文件可以填满和信息可以被覆盖。请注意,日志级别高会造成性能影响ocssd由于数量的跟踪。如果你需要保持更长一段时间的数据,创建一个cron作业来备份和压缩CSS日志。

为了增强对cssdagent或cssdmonitor的跟踪,可以通过crsctl命令实现:

# crsctl set log res ora.cssd=2 -init

# crsctl set log res ora.cssdmonitor=2 -init

在Oracle11.2的集群,CSS输出堆栈的dump信息到cssdOUT.log中。有助于在发生重启之前刷新诊断数据到磁盘上。因此在11.2上我们不考虑有必要的diagwait(默认0)改变,除非支持或者开发有相关建议。

在非常罕见的情况下,只有在调试期间,可能也许必要禁用ocssd重新启动。这可以通过以下crsctl命令。禁用重启应该在支持或开发人员的指导下,可以在线做不会有堆栈重启。

# crsctl modify resource ora.cssd -attr “ENV_OPTS=DEV_ENV” -init

# crsctl modify resource ora.cssdmonitor -attr “ENV_OPTS=DEV_ENV” –init

在11.2.0.2启用更高的日志级别可能介绍各种模块。

用下面命令列出css守护进程的所有模块的名字:

| [root@rac2 bin]# ./crsctl lsmodules css

List CSSD Debug Module: CLSF List CSSD Debug Module: CSSD List CSSD Debug Module: GIPCCM List CSSD Debug Module: GIPCGM List CSSD Debug Module: GIPCNM List CSSD Debug Module: GPNP List CSSD Debug Module: OLR List CSSD Debug Module: SKGFD |

CLSF and SKGFD – 关于仲裁盘的I/O

CSSD – same old one

GIPCCM – gipc communication between applications and CSS

GIPCGM – communication between peers in the GM layer

GIPCNM – communication between nodes in the NM layer

GPNP – trace for gpnp calls within CSS

OLR – trace for olr calls within CSS

下面是如何对不同的模块设置不同的日志级别的例子:

# crsctl set log css GIPCCM=1,GIPCGM=2,GIPCNM=3

# crsctl get log css CSSD=4

检查当前的跟踪日志级别用下面的命令:

# crsctl get log ALL

# crsctl get log css GIPCCM

CSSDAGENT and CSSDMONITOR

CSSDAGENT and CSSDMONITOR几乎提供相同的功能。cssdagent启动,停止,检查ocssd守护进程状态。cssdmonitor监控cssdagent。没有ora.cssdagent资源,也不是ocssd守护进程的资源。

在11.2之前实现上面两个代理的功能是oprocd,olcsmon守护进程。cssdagent和cssdmonitor运行在实时优先级锁定内存,就像ocssd一样。

另外,cssdagent 和cssdmonitor提供下面的服务来确保数据完整性:

监控ocssd,如果ocssd失败,那么cssd* 重启节点

监控节点调度:如果节点夯住了/没有进程调度,重启节点。

更全面的决策是否需要重新启动,cssdagent和cssdmonitor通过NHB从ocssd接收状态信息,确保本地节点的状态被远程节点认为是准确的。此外,集成将利用时间其他节点感知当地节点为目的,如文件系统同步得到完整的诊断数据。

为了启动ocssd代理调试,可以用crsctl set log res ora.cssd:3 –init命令。这个操作的日志记录在Grid_home/log/<hostname>/agent/ohasd/oracssdagent_root/oracssdagent_root.log和更多跟踪信息写在oracssdagent_root.log里。

2009-11-25 10:00:52.386: [ AGFW][2945420176] Agent received the message: RESOURCE_MODIFY_ATTR[ora.cssd 1 1] ID 4355:106099

2009-11-25 10:00:52.387: [ AGFW][2966399888] Executing command:

res_attr_modified for resource: ora.cssd 1 1

2009-11-25 10:00:52.387: [ USRTHRD][2966399888] clsncssd_upd_attr: setting trace to level 3

2009-11-25 10:00:52.388: [ CSSCLNT][2966399888]clssstrace: trace level set to 2 2009-11-25 10:00:52.388: [ AGFW][2966399888] Command: res_attr_modified for resource: ora.cssd 1 1 completed with status: SUCCESS

2009-11-25 10:00:52.388: [ AGFW][2945420176] Attribute: LOGGING_LEVEL for

resource ora.cssd modified to: 3

2009-11-25 10:00:52.388: [ AGFW][2945420176] config version updated to : 7 for ora.cssd 1 1

2009-11-25 10:00:52.388: [ AGFW][2945420176] Agent sending last reply for: RESOURCE_MODIFY_ATTR[ora.cssd 1 1] ID 4355:106099

2009-11-25 10:00:52.484: [ CSSCLNT][3031063440]clssgsgrpstat: rc 0, gev 0, incarn

2, mc 2, mast 1, map 0x00000003, not posted

同样适用于cssdmonitor(ora.cssdmonitor)资源。

heartbeats(心跳)

Disk HeartBeat (DHB) 磁盘心跳,定期的写在投票文件里,一秒钟一次

Network HeartBeat (NHB)网络心跳,每一秒钟发送一次到其他节点上

Local HeartBeat (LHB)本地心跳,每一秒钟一次发送到代理或监控

ocssd 线程

Sending Thread (ST) 同一时间发送网络心跳和本地心跳

Disk Ping thread 每一秒钟把磁盘心跳写到投票文件里

Cluster Listener (CLT) 接收其他节点发送过来的消息,主要是网络心跳

agent/monitor线程

HeartBeat thread (HBT)从ocssd接收本地心跳和检测连接失败

OMON thread (OMT) 监控连接失败

OPROCD thread (OPT) 监控agent/moniter调度进程

VMON thread (VMT)取代clssvmon可执行文件,注册在skgxn组供应商集群软件

Timeouts(超时)

Misscount (MC) 一个节点在被删除之前没有网络心跳的时间

Network Time Out (NTO) 一个节点在被删除之前没有网络心跳的最大保留时间

Disk Time Out (DTO) 大多数投票文件被认为是无法访问的最大时间

ReBoot Time (RBT) 允许重新启动的时间,默认是三秒钟。

Misscount, SIOT, RBT

Disk I/O Timeout amount of time for a voting file to be offline before it is unusable

SIOT – Short I/O Timeout, in effect during reconfig

LIOT – Long I/O Timeout, in effect otherwise

Long I/O Timeout – (LIOT)通过crsctl set css disktimeout配置超时时间,默认200秒。

Short I/O Timeout (SIOT) is (misscount – reboot time)

In effect when NHB’s missed for misscount/2

ocssd terminates if no DHB for SIOT

Allows RBT seconds after termination for reboot to complete

Disk Heartbeat Perceptions

Other node perception of local state in reconfig

No NHB for misscount, node not visible on network

No DHB for SIOT, node not alive

If node alive, wait full misscount for DHB activity to be missing, i.e. node not alive

As long as DHB’s are written, other nodes must wait

Perception of local state by other nodes must be valid to avoid data corruption

Disk Heartbeat Relevance

DHB only read starting shortly before a reconfig to remove the node is started

When no reconfig is impending, the I/O timeout not important, so need not be monitored

If the disk timeout expires, but the NHB’s have been sent to and received from other nodes, it will still be misscount seconds before other nodes will start a reconfig

The proximity to a reconfig is important state information for OPT

Clocks

Time Of Day Clock (TODC) the clock that indicates the hour/minute/second of the day (may change as a result of commands)

aTODC is the agent TODC

cTODC is the ocssd TODC

Invariant Time Clock (ITC) a monotonically increasing clock that is invariant i.e. does not change as a result of commands). The invariant clock does not change if time set backwards or forwards; it is always constant.

aITC is the agent ITC

cITC is the ocssd ITC

是如何工作的

ocssd state information contains the current clock information, the network time out (NTO) based on the node with the longest time since the last NHB and a disk I/O timeout based on the amount of time since the majority of voting files was last online. The sending thread gathers this current state information and sends both a NHB and local heartbeat to ensure that the agent perception of the aliveness of ocssd is the same as that of other nodes.

The cluster listener thread monitors the sending thread. It ensures the sending thread has been scheduled recently and wakes up if necessary. There are enhancements here to ensure that even after clock shifts backwards and forwards, the sending thread is scheduled accurately.

There are several agent threads, one is the oprocd thread which just sleeps and wakes up periodically. Upon wakeup, it checks if it should initiate a reboot, based on the last known ocssd state information and the local invariant time clock (ITC). The wakeup is timer driven. The heartbeat thread is just waiting for a local heartbeat from the ocssd. The heartbeat thread will calculate the value that the oprocd thread looks at, to determine whether to reboot. It checks if the oprocd thread has been awake recently and if not, pings it awake. The heartbeat thread is event driven and not timer driven.

文件系统同步

当ocssd失败, 启动文件系统同步。有大量的时间来做到这一点,我们可以等待几秒钟同步。最后当地心跳表明我们可以等多久,等待事件基于misscount。当等待时间超时了,oprocd会重启这个节点。大多数情况下,诊断数据会写到磁盘里。在极少数的情况下,如因为CSS夯住同步还没执行才会没写到磁盘。

集群就绪服务是管理高可用操作的主要程序。CRS守护进程管理集群资源基于配置在OCR上的每个资源信息。这包括启动,停止,监控和故障转移操作。csrd守护进程监控数据库实例,监听,等等,当发生故障时自动重启这些组件。

crsd守护进程由root用户运行,发生故障后自动重启。当数据库集群安装单实例环境在ASM和数据库重启时,ohasd代替crsd管理应用资源。

Policy Engine

概述

在11.2上资源的高可用是由OHASD(通常用于基础设施资源)和CRSD(应用程序部署在集群上)处理。这两个守护进程共享相同的体系结构和大部分的代码库,对于大多数意图和目的,OHASD可以看做是CRSD在单节点的集群上。在后续部分中讨论适用于这两个守护进程。

从11.2开始,CRSD的体系结构实现了主从模型:一个单一的CRSD在集群里被选作主,其他的都是从。在守护进程启动和每次主被重新选择,CRSD把当前主写入crsd.log日志里。

grep “PE MASTER” Grid_home/log/hostname/crsd/crsd.*

crsd.log:2010-01-07 07:59:36.529: [ CRSPE][2614045584] PE MASTER NAME: staiv13

CRSD是一个分布式应用程序由几个“模块”组成。模块主要是state-less和操作通过交换信息。状态(上下文)总是携带每个信息;大多数交互在本质上是异步的。有些模块有专用的,有些线程共享一个线程和一些操作共享线程池。重要的CRSD模块如下:

例如,一个客户机请求修改资源将产生以下交互

CRSCTL UI Server PE OCR Module PE Reporter (event publishing)

Proxy (to notify the agent)

CRSCTL UI Server PE

注意UiServer/PE/Proxy每一个可以在不同的节点上,如下图:

Resource Instances & IDs

在11.2中,CRS模块支持资源多样性的两个概念:基数和程度。In 11.2, CRS modeling supports two concepts of resource multiplicity: cardinality and degree. The former controls the number of nodes where the resource can run concurrently while the latter controls the number of instances of the resource that can be run on each node. To support the concepts, the PE now distinguishes between resources and resource instances. The former can be seen as a configuration profile for the entire resource while the latter represents the state data for each instance of the resource. For example, a resource with CARDINALITY=2, DEGREE=3 will have 6 resource instances. Operations that affect resource state (start/stopping/etc.) are performed using resource instances. Internally, resource instances are referred to with IDs which following the following format: “<A> <B>

<C>” (note space separation), where <A> is the resource name, <C> is the degree of the instance (mostly 1), and <B> is the cardinality of the instance for cluster_resource resources or the name of the node to which the instance is assigned for local_resource names. That’s why resource name have “funny” decorations in logs:

[ CRSPE][2660580256] {1:25747:256} RI [r1 1 1] new target state: [ONLINE] old

value: [OFFLINE]

Log Correlation

CRSD is event-driven in nature. Everything of interest is an event/command to process. Two kinds of commands are distinguished: planned and unplanned. The former are usually administrator-initiated (add/start/stop/update a resource, etc.) or system-initiated (resource auto start at node reboot, for instance) actions while the latter are normally unsolicited state changes (a resource failure, for example). In either case, processing such events/commands is what CRSD does and that’s when module interaction takes place. One can easily follow the interaction/processing of each event in the logs, right from the point of origination (say from the UI module) through to PE and then all the way to the agent and back all the way using the concept referred to as a “tint”. A tint is basically a cluster-unique event ID of the following format: {X:Y:Z}, where X is the node number, Y a node-unique number of a process where the event first entered the system, and Z is a monotonically increasing sequence number, per process. For instance, {1:25747:254} is a tint for the 254th event that originated in some process internally referred to us 25747 on node number 1. Tints are new in 11.2.0.2 and can be seen in CRSD/OHASD/agent logs. Each event in the system gets assigned a unique tint at the point of entering the system and modules prefix each log message while working on the event with that tint.

例如,在3节点的集群,node0是PE,在node1上执行“crsctl start resource r1 –n node2”,恰好如上面的图形,将会在日志里产生下面信息:

节点1上的CRSD日志(crsctl总是连接本地CRSD;UI服务器把命令转发到PE)

2009-12-29 17:07:24.742: [UiServer][2689649568] {1:25747:256} Container [ Name: UI_START

…

RESOURCE:

TextMessage[r1]

2009-12-29 17:07:24.742: [UiServer][2689649568] {1:25747:256} Sending message to PE. ctx= 0xa3819430

节点0上的CRSD日志(with PE master)

2009-12-29 17:07:24.745: [ CRSPE][2660580256] {1:25747:256} Cmd : 0xa7258ba8 :

flags: HOST_TAG | QUEUE_TAG

2009-12-29 17:07:24.745: [ CRSPE][2660580256] {1:25747:256} Processing PE

command id=347. Description: [Start Resource : 0xa7258ba8]

2009-12-29 17:07:24.748: [ CRSPE][2660580256] {1:25747:256} RI [r1 1 1] new

target state: [ONLINE] old value: [OFFLINE]

2009-12-29 17:07:24.748: [ CRSOCR][2664782752] {1:25747:256} Multi Write Batch

processing…

2009-12-29 17:07:24.753: [ CRSPE][2660580256] {1:25747:256} Sending message to

agfw: id = 2198

这里,PE执行政策评估和目标节点与代理进行交互(开始行动)和OCR(记录目标的新值)。

CRSD节点2上的日志(启动代理,将消息转发给它)

2009-12-29 17:07:24.763: [ AGFW][2703780768] {1:25747:256} Agfw Proxy Server

received the message: RESOURCE_START[r1 1 1] ID 4098:2198

2009-12-29 17:07:24.767: [ AGFW][2703780768] {1:25747:256} Starting the agent:

/ade/agusev_bug/oracle/bin/scriptagent with user id: agusev and incarnation:1

节点2上的代理日志 (代理执行启动命令)

2009-12-29 17:07:25.120: [ AGFW][2966404000] {1:25747:256} Agent received the

message: RESOURCE_START[r1 1 1] ID 4098:1459

2009-12-29 17:07:25.122: [ AGFW][2987383712] {1:25747:256} Executing command:

start for resource: r1 1 1

2009-12-29 17:07:26.990: [ AGFW][2987383712] {1:25747:256} Command: start for

resource: r1 1 1 completed with status: SUCCESS

2009-12-29 17:07:26.991: [ AGFW][2966404000] {1:25747:256} Agent sending reply

for: RESOURCE_START[r1 1 1] ID 4098:1459

几点2上的CRSD日志(代理回复,将信息传回PE)

2009-12-29 17:07:27.514: [ AGFW][2703780768] {1:25747:256} Agfw Proxy Server

received the message: CMD_COMPLETED[Proxy] ID 20482:2212

2009-12-29 17:07:27.514: [ AGFW][2703780768] {1:25747:256} Agfw Proxy Server

replying to the message: CMD_COMPLETED[Proxy] ID 20482:2212

节点0上的CRSD 日志(收到回复信息,通知通讯员并返回给UI服务器,通讯员发布信息到EVM)

2009-12-29 17:07:27.012: [ CRSPE][2660580256] {1:25747:256} Received reply to

action [Start] message ID: 2198

2009-12-29 17:07:27.504: [ CRSPE][2660580256] {1:25747:256} RI [r1 1 1] new

external state [ONLINE] old value: [OFFLINE] on agusev_bug_2 label = []

2009-12-29 17:07:27.504: [ CRSRPT][2658479008] {1:25747:256} Sending UseEvm mesg

2009-12-29 17:07:27.513: [ CRSPE][2660580256] {1:25747:256} UI Command [Start

Resource : 0xa7258ba8] is replying to sender.

节点1上的CRSD日志(crsctl命令执行完成,UI服务器写出响应,完成API请求)

2009-12-29 17:07:27.525: [UiServer][2689649568] {1:25747:256} Container [ Name:

UI_DATA

r1: TextMessage[0]

]

2009-12-29 17:07:27.526: [UiServer][2689649568] {1:25747:256} Done for

ctx=0xa3819430

The above demonstrates the ease of following distributed processing of a single request across 4 processes on 3 nodes by using tints as a way to filter, extract, group and correlate information pertaining to a single event across a plurality of diagnostic logs.

11.2集群的一个新特性是即插即用,由GPnP守护进程管理。GPnPD提供访问GPnP概要文件,在集群的节点协调更新概要文件,以确保所有的节点都有最近的概要文件。

GPnP配置概要文件和钱夹配置,对于每一个节点都是相同的。在数据库安装过程中概要文件和钱夹会被创建并复制。GPnP概要文件是一个XML的测试文件,其中包含必要的引导信息组成一个集群。信息内容比如集群名字,GUID,发现字符串,预期的网络连接。不包含节点的细节信息。配置文件由GPnPD管理,存在于每个节点的GPnP缓存上。如果没有进行更改,那么在所有节点上都是一样的。通过序列号来鉴别配置文件。

GPnP钱包只是一个二进制blob,包含公共/私有RSA密钥, 用于登录和验证GPnP概要文件。钱夹对于所有的GPnP是相同的,在安装数据库软件时创建,不会更改且永远的活着的。

一个典型的配置文件将包含以下信息。永远不会直接改变XML文件; 通过使用支持工具,比如ASMCA,asmcd,oifcfg等等。来修改GPnP的配置信息。

不建议用GPnP 工具来修改GPnP配置文件,要修改配置文件需要很多步骤。如果添加了无效的信息,那么就会弄坏配置文件,并后续会产生问题。

# gpnptool get

Warning: some command line parameters were defaulted. Resulting command line:

/scratch/grid_home_11.2/bin/gpnptool.bin get -o-

<?xml version=”1.0″ encoding=”UTF-8″?><gpnp:GPnP-Profile Version=”1.0″

xmlns=”http://www.grid-pnp.org/2005/11/gpnp-profile”

xmlns:gpnp=”http://www.grid- pnp.org/2005/11/gpnp-profile”

xmlns:orcl=”http://www.oracle.com/gpnp/2005/11/gpnp- profile”

xmlns:xsi=”http://www.w3.org/2001/XMLSchema-instance”

xsi:schemaLocation=”http://www.grid-pnp.org/2005/11/gpnp-profile gpnp-profile.xsd”

ProfileSequence=”4″ ClusterUId=”0cd26848cf4fdfdebfac2138791d6cf1″

ClusterName=”stnsp0506″ PALocation=””><gpnp:Network-Profile><gpnp:HostNetwork

id=”gen” HostName=”*”><gpnp:Network id=”net1″ IP=”10.137.8.0″ Adapter=”eth0″

Use=”public”/><gpnp:Network id=”net2″ IP=”10.137.20.0″ Adapter=”eth2″

Use=”cluster_interconnect”/></gpnp:HostNetwork></gpnp:Network-Profile><orcl:CSS-

Profile id=”css” DiscoveryString=”+asm”

LeaseDuration=”400″/><orcl:ASM-Profile id=”asm”

DiscoveryString=”/dev/sdf*,/dev/sdg*,/voting_disk/vote_node1″

SPFile=”+DATA/stnsp0506/asmparameterfile/registry.253.699162981″/>

<ds:Signature xmlns:ds=”http://www.w3.org/2000/09/xmldsig#”>

<ds:SignedInfo><ds:CanonicalizationM ethod

Algorithm=”http://www.w3.org/2001/10/xml-exc-c14n#”/><ds:SignatureMethod

Algorithm=”http://www.w3.org/2000/09/xmldsig#rsa-sha1″/><ds:Reference URI=””>

<ds:Transforms><ds:Transform Algorithm=”http://www.w3.org/2000/09/xmldsig#enveloped-signature”/>

<ds:Transform Algorithm=”http://www.w3.org/2001/10/xml-exc-c14n#”>

<InclusiveNamespaces xmlns=”http://www.w3.org/2001/10/xml-exc-c14n#”

PrefixList=”gpnp orcl xsi”/></ds:Transform></ds:Transforms>

<ds:DigestMethod Algorithm=”http://www.w3.org/2000/09/xmldsig#sha1″/><ds:DigestValue>ORAmrPMJ/plFtG Tg/mZP0fU8ypM=</ds:DigestValue>

</ds:Reference></ds:SignedInfo><ds:SignatureValue>

K u7QBc1/fZ/RPT6BcHRaQ+sOwQswRfECwtA5SlQ2psCopVrO6XJV+BMJ1UG6sS3vuP7CrS8LXrOTyoIxSkU 7xWAIB2Okzo/Zh/sej5O03GAgOvt+2OsFWX0iZ1+2e6QkAABHEsqCZwRdI4za3KJeTkIOPliGPPEmLuImu

DiBgMk=</ds:SignatureValue></ds:Signature></gpnp:GPnP-Profile>

Success.

初始化GPnP配置在安装数据库集群软件时由root脚本创建并传播。在全新安装配置文件的内容来自于数据库安装结构在Grid_home/crs/install/crsconfig_params。

GPnP守护进程和其他进程一样由OHASD管理并由OHASD产生oraagent。GPnPD的主要目的是服务配置文件,因此是为了启动堆栈。GPnPD的主要启动顺序:

有几个客户端工具能够直接修改GPnP配置文件。要求ocssd是运行的:

注意,参数文件的改变会系列化整个集群的CSS锁(bug 7327595)。

Grid_home/bin/gpnptool是真正维护gpnp文件的工具。查看详细的信息,可以运行:

Oracle GPnP Tool Usage:

“gpnptool <verb> <switches>”, where verbs are:

create Create a new GPnP Profile

edit Edit existing GPnP Profile

getpval Get value(s) from GPnP Profile

get Get profile in effect on local node

rget Get profile in effect on remote GPnP node put Put profile as a current best

find Find all RD-discoverable resources of given type

lfind Find local gpnpd server

check Perform basic profile sanity checks

c14n Canonicalize, format profile text (XML C14N)

sign Sign/re-sign profile with wallet’s private key

unsign Remove profile signature, if any

verify Verify profile signature against wallet certificate

help Print detailed tool help

ver Show tool version

为了获取更多的日志和跟踪文件,可以设置环境变量GPNP_TRACELEVEL 范围为0-6。GPnP跟踪文件在:

Grid_home/log/<hostname>/alert*, Grid_home/log/<hostname>/client/gpnptool*, other client logs Grid_home/log/<hostname>/gpnpd|mdnsd/* Grid_home/log/<hostname>/agent/ohasd/oraagent_<username>/*

产品安装文件里有基本信息,位置在:

Grid_home/crs/install/crsconfig_params

Grid_home/cfgtoollogs/crsconfig/root*

Grid_home/gpnp/*,

Grid_home/gpnp/<hostname>/* [profile+wallet]

如果GPnP 安装失败,应该进行下面失败场景的检查:

如果是在GPnP运行过程中产生错误,应该进行如下检查:

上面的解决的所有第一步都应该先活动守护进程的日志文件并通过crsctl stat res –init –t检查资源的状态。

GPnPD没有运行的其他解决步骤:

# gpnptool check -\

p=/scratch/grid_home_11.2/gpnp/stnsp006/profiles/peer/profile.xml

Profile cluster=”stnsp0506″, version=4

GPnP profile signed by peer, signature valid.

Got GPnP Service current profile to check against.

Current GPnP Service Profile cluster=”stnsp0506″, version=4

Error: profile version 4 is older than- or duplicate of- GPnP Service current profile version 4.

Profile appears valid, but push will not succeed.

# gpnptool verify Oracle GPnP Tool

verify Verify profile signature against wallet certificate Usage:

“gpnptool verify <switches>”, where switches are:

-p[=profile.xml] GPnP profile name

-w[=file:./] WRL-locator of OracleWallet with crypto keys

-wp=<val> OracleWallet password, optional

-wu[=owner] Wallet certificate user (enum: owner,peer,pa)

-t[=3] Trace level (min..max=0..7), optional

-f=<val> Command file name, optional

-? Print verb help and exit

– 如果GPnPD服务在本地,可以用gpnptool lfind进行检查

# gpnptool lfind

Success. Local gpnpd found.

‘gpnptool get’ 可以返回本地配置文件的信息。如果gpnptool lfind|get夯住了,从客户端 夯住的信息和GPnPD日志在Grid_home/log/<hostname>/gpnpd,将会对进一步解决问题有很大的帮助。

– 检查远程GPnPD是响应的,’find’选项将很有帮助:

# gpnptool find -h=stnsp006

Found 1 instances of service ‘gpnp’. mdns:service:gpnp._tcp.local.://stnsp006:17452/agent=gpnpd,cname=stnsp0506

,host=stnsp006,pid=13133/gpnpd h:stnsp006 c:stnsp0506

如果上面的操作挂起了或者返回错误了,检查

Grid_home/log/<hostname>/mdnsd/*.log files和 gpnpd日志。

– 检查所有节点都是响应的,运行gpnptool find –c=<clustername>

# gpnptool find -c=stnsp0506

Found 2 instances of service ‘gpnp’. mdns:service:gpnp._tcp.local.://stnsp005:23810/agent=gpnpd,cname=stnsp0506

,host=stnsp005,pid=12408/gpnpd h:stnsp005 c:stnsp0506 mdns:service:gpnp._tcp.local.://stnsp006:17452/agent=gpnpd,cname=stnsp0506,host=stnsp006,pid=13133/gpnpd h:stnsp006 c:stnsp0506

我们将GPnP配置文件存放在本地OLR和OCR。如果配置文件丢失或者损坏,GPnPD重备份中重建配置文件。

集群中GNS执行名称解析。GNS并不总是使用mDNS的性能原因。

在11.2我们支持使用DHCP私人互连和几乎所有的公共网络上的虚拟IP地址。为集群之外的客户端发现集群中的虚拟主机,我们提供了GNS。这适用于任何高级DNS为外部提供名称解析。

本节介绍如何简单的进行DHCP和GNS的配置。一个复杂的网络环境可能需要更复杂的解决方案。配置GNS和DHCP必须在grid安装之前。

GSN提供什么

DHCP提供了动态配置的主机IP地址,但是不能提供一个好的外部客户端使用的名字,因此在混合服务器已经很罕见了。在Oracle 11.2集群,提供了我们的服务来解析名称解决这个问题,和DNS的连接客户是可见的。

设置网络配置

让GNS为客户端工作,需要配置高级别的DNS来代表集群中的一个子区域,集群必须在DNS已知的一个地址运行GNS。GNS地址将用集群中配置的静态VIP来维护。GNS守护进程将跟随在集群vip和子区域的服务名。

需要配置四方面:

获取一个IP地址作为GNS-VIP

从网络管理员那里请求一个ip地址分配作为GNS-VIP。这个IP地址必须是已经分配了的公司DNS作为给定集群的GNS-VIP,例如strdv0108-gns.mycorp.com。这个地址在集群软件安装之后将由集群软件管理。

创建一个下面格式的条目在适当的DNS区域文件里:

# Delegate to gns on strdv0108

strdv0108-gns.mycorp.com NS strdv0108.mycorp.com

#Let the world know to go to the GNS vip strdv0108.mycorp.com 10.9.8.7

在这里,子区域是strdv0108.mycorp.com,GNS VIP 已经分配了的名称是strdv0108-gns.us.mycorp.com(对应于一个静态IP地址),GNS守护进程将监听默认端口53。

注意:这并不是建立一个地址的名字strdv0108.mycorp.com,创建了一种解析子区域中名字的方法,比如clusterNode1- VIP.strdv0108.mycorp.com。

DHCP

一个主机要求一个IP地址发送广播消息给硬件网络。一个DHCP服务器可以相应请求,并返回一个地址,连同其他消息,比如使用什么网关,用了那个DNS服务,改用什么域名,改用什么NTP服务,等等。

当我们获取DHCP公共网络,我们有几个IP地址:

GNS VIP 不能从DHCP获取,因为它必须提前知道,因此必须静态分配。

DHCP配置文件在/etc/dhcp.conf

使用下面的配置例如:

/etc/dhcp.conf 将包含类似的信息:

subnet 10.228.212.0 netmask 255.255.252.0

{

default-lease-time 43200;

max-lease-time 86400;

option subnet-mask 255.255.252.0;

option broadcast-address 10.228.215.255;

option routers 10.228.212.1;

option domain-name-servers M.N.P.Q, W.X.Y.Z; option domain-name “strdv0108.mycorp.com”; pool

{

range 10.228.212.10 10.228.215.254;

}

}

名称解析

/etc/resolv.conf必须包含可以解析企业DNS服务器的命名服务器条目,和总超时周期配置必须低于30秒。例如:

/etc/resolv.conf:

options attempts: 2

options timeout: 1

search us.mycorp.com mycorp.com

nameserver 130.32.234.42

nameserver 133.2.2.15

/etc/nsswitch.conf控制名称服务查找顺序。在一些系统上配置,网络信息系统可能在解析Oracle SCAN时产生错误。建议在搜索列表里添加NIS 条目。

/etc/nsswitch.conf

hosts: files dns nis

请参阅:Oracle Grid Infrastructure Installation Guide,

“DNS Configuration for Domain Delegation to Grid Naming Service” for more information.

在11.2 GNS由集群代理orarootagent管理。这个代理启动,停止和检查DNS。GNS添加到OCR和GNS添加到集群的信息通过srvctl add gns –d <mycluster.company.com>命令。

在服务器启动GNS服务器,从子区域中检索名字,需要的服务OCR和启动线程。GNS服务器将做的第一件事是一个自我检查一次所有正在运行的线程。它执行一个测试,看看名称解析正在工作。客户端API调用分配一个虚拟的名称和地址,然后服务器试图解析这个名字。如果解析成功和一个地址匹配的虚拟地址,自我检查将成功并把信息写入alert<hostname>.log.这样做自我检查是只有一次,即使测试失败GNS服务器一直运行。

GNS服务的默认trace路径是Grid_home/log/<hostname>/gnsd/。trace文件看起来像下面的格式:

<Time stamp>: [GNS][Thread ID]<Thread name>::<function>:<message>

2009-09-21 10:33:14.344: [GNS][3045873888] Resolve::clsgnmxInitialize: initializing mutex 0x86a7770 (SLTS 0x86a777c).

GNS代理orarootagent会定期检查GNS服务。检查是通过查询GNS的状态。

代理是否成功与GNS广告,执行:

#grep -i ‘updat.*gns’

Grid_home/log/<hostname>/agent/crsd/orarootagent_root/orarootagent_*

orarootagent_root.log:2009-10-07 10:17:23.513: [ora.gns.vip] [check] Updating GNS with stnsp0506-gns-vip 10.137.13.245

orarootagent_root.log:2009-10-07 10:17:23.540: [ora.scan1.vip] [check] Updating GNS with stnsp0506-scan1-vip 10.137.12.200

orarootagent_root.log:2009-10-07 10:17:23.562: [ora.scan2.vip] [check] Updating GNS with stnsp0506-scan2-vip 10.137.8.17

orarootagent_root.log:2009-10-07 10:17:23.580: [ora.scan3.vip] [check] Updating GNS with stnsp0506-scan3-vip 10.137.12.214

orarootagent_root.log:2009-10-07 10:17:23.597: [ora.stnsp005.vip] [check] Updating GNS with stnsp005-vip 10.137.12.228

orarootagent_root.log:2009-10-07 10:17:23.615: [ora.stnsp006.vip] [check] Updating GNS with stnsp006-vip 10.137.12.226

命令行接口通过srvctl与GNS进行交互(唯一的支持途径)。crsctl可以停在和启动ora.gsn但是我们不支持这个除了直接告诉开发。

用下面操作实现GNS操作:

# srvctl {start|stop|modify|etc.} gns …

启动 gns

# srvctl start gns [-l <log_level>] – where –l is the level of logging that GNS should run with.

停止gns

# srvctl stop gns

发布名称和地址

# srvctl modify gns -N <name> -A <address>

默认的GNS服务日志级别是0,我们可以通过ps –ef | grep gnsd.bin简单查看:

/scratch/grid_home_11.2/bin/gnsd.bin -trace-level 0 -ip-address 10.137.13.245 – startup-endpoint ipc://GNS_stnsp005_31802_429f8c0476f4e1

调试GNS服务是可能需要提高日志级别。必须先通过srvctl stop gns命令停掉GNS服务,并通过srvctl start gns –v –l 5重启。只有root用户可以停止和启动GNS。

Usage: srvctl start gns [-v] [-l <log_level>] [-n <node_name>]

-v Verbose output

-l <log_level> Specify the level of logging that GNS should run with.

-n <node_name> Node name

-h Print usage

trace级别在0-6之间,级别5应该在所有情况下都够用了,不推荐设置级别到6,而且gnsd将消耗大量的CPU。

在11.2.0.1由于8705125bug,在初始化安装后默认的GNS服务日志级别是6。用‘srvctl stop / start’命令停掉和重启GNS,把日志级别设成0,这只需要停止和启动gnsd.bin,不会对正在运行的集群产生其他影响。

用srvctl 可用查看当前GNS配置

srvctl config gns –a GNS is enabled.

GNS is listening for DNS server requests on port 53 GNS is using port 5353 to connect to mDNS

GNS status: OK

Domain served by GNS: stnsp0506.oraclecorp.com GNS version: 11.2.0.1.0

GNS VIP network: ora.net1.network

从11.2.0.2开始,使用-l 选项对调试GNS很有帮助。

Grid 进程间的通讯是一个普通的通讯设施用来替代CLSC/NS.他提供一个完全的控制从操作系统到任何客户端的通讯堆栈。在11.2之前依赖NS已经撤掉了,但是为了往下兼容,存在CLSC客户端(主要是从11.1开始)。

GIPC可以支持多种通讯类型:CLSC, TCP, UDP, IPC和GIPC。

关于GIPC端点的监听配置是有点不同的。私人/集群的互连现在定义在GPnP配置文件里。

The requirement for the same interfaces to exist with the same name on all nodes is more relaxed, as long as communication will be established.在GPnP配置文件里关于私人和公共的网络连接配置:

<gpnp:Network id=”net1″ IP=”10.137.8.0″ Adapter=”eth0″ Use=”public”/>

<gpnp:Network id=”net2″ IP=”10.137.20.0″ Adapter=”eth2″ Use=”cluster_interconnect”/>

本文永久地址:https://www.askmac.cn/archives/oracle-clusterware-11-2.html

日志和诊断

GIPC的默认trace级别只是输出错误,默认的trace级别在不同组件之间是0-2。要调试和GIPC相关的问题,你应该提高跟踪日志的级别,下面将进行介绍。

通过crsctl设置跟踪日志级别

用crsctl设置不同组件的GIPC trace级别。

例如:

# crsctl set log css COMMCRS:abcd

Where

如果只想定义GIPC的跟踪日志级别,修改为默认值2,执行:

# crsctl set log css COMMCRS:2242

为所有的组件打开GIPC跟踪((NM, GM,等等),设置:

# crsctl set log css COMMCRS:3 or

# crsctl set log css COMMCRS:4

级别为4的话,会产生大量的跟踪日志,因此ocssd.log就会很快的进行循环覆盖。

通过GIPC_TRACE_LEVEL和GIPC_FIELD_LEVEL设置跟踪级别

Another option is to set a pair of environment variables for the component using GIPC as communication e.g. ocssd. In order to achieve this, a wrapper script is required. Taking ocssd as an example, the wrapper script is Grid_home/bin/ocssd that invokes ‘ocssd.bin’. Adding the variables below to the wrapper script (under the LD_LIBRARY_PATH) and restarting ocssd will enable GIPC tracing. To restart ocssd.bin, perform a crsctl stop/start cluster.

case `/bin/uname` in Linux)

LD_LIBRARY_PATH=/scratch/grid_home_11.2/lib export LD_LIBRARY_PATH

export GIPC_TRACE_LEVEL=4

export GIPC_FIELD_LEVEL=0x80

# forcibly eliminate LD_ASSUME_KERNEL to ensure NPTL where available

LD_ASSUME_KERNEL=

export LD_ASSUME_KERNEL LOGGER=”/usr/bin/logger”

if [ ! -f “$LOGGER” ];then

LOGGER=”/bin/logger”

fi

LOGMSG=”$LOGGER -puser.err”

;;

这将设置跟踪级别为4,环境变量的值

GIPC_TRACE_LEVEL=3 (valid range [0-6])

GIPC_FIELD_LEVEL=0x80 (only 0x80 is supported)

通过GIPC_COMPONENT_TRACE设置跟踪级别

使用GIPC_COMPONENT_TRACE环境变量进行更细粒度的跟踪。定义的组建为GIPCGEN, GIPCTRAC, GIPCWAIT, GIPCXCPT, GIPCOSD, GIPCBASE, GIPCCLSA, GIPCCLSC, GIPCEXMP, GIPCGMOD, GIPCHEAD, GIPCMUX, GIPCNET, GIPCNULL, GIPCPKT, GIPCSMEM, GIPCHAUP, GIPCHALO, GIPCHTHR, GIPCHGEN, GIPCHLCK, GIPCHDEM, GIPCHWRK

例如:

# export GIPC_COMPONENT_TRACE=GIPCWAIT:4,GIPCNET:3

跟踪信息样子如下:本文永久地址:https://www.askmac.cn/archives/oracle-clusterware-11-2.html

2009-10-23 05:47:40.952: [GIPCMUX][2993683344]gipcmodMuxCompleteSend: [mux] Completed send req 0xa481c0e0 [00000000000093a6] { gipcSendRequest : addr ”, data 0xa481c830, len 104, olen 104, parentEndp 0x8f99118, ret gipcretSuccess (0), objFlags 0x0, reqFlags 0x2 }

2009-10-23 05:47:40.952: [GIPCWAIT][2993683344]gipcRequestSaveInfo: [req]

Completed req 0xa481c0e0 [00000000000093a6] { gipcSendRequest : addr ”, data 0xa481c830, len 104, olen 104, parentEndp 0x8f99118, ret gipcretSuccess (0), objFlags 0x0, reqFlags 0x4 }

只有一些层级CSS,GPnPD,GNSD,和很小部分的MDNSD现在使用GIPC。

其他的如CRS/EVM/OCR/CTSS 从11.2.0.2开始使用GIPC。设置GIPC跟踪日志级别对于调试连接问题将很重要。

The CTSS is a new feature in Oracle Clusterware 11g release 2 (11.2), which takes care of time synchronization in a cluster, in case the network time protocol daemon is not running or is not configured properly.

The CTSS synchronizes the time on all of the nodes in a cluster to match the time setting on the CTSS master node. When Oracle Clusterware is installed, the Cluster Time Synchronization Service (CTSS) is installed as part of the software package. During installation, the Cluster Verification Utility (CVU) determines if the network time protocol (NTP) is in use on any nodes in the cluster. On Windows systems, CVU checks for NTP and Windows Time Service.

If Oracle Clusterware finds that NTP is running or that NTP has been configured, then NTP is not affected by the CTSS installation. Instead, CTSS starts in observer mode (this condition is logged in the alert log for Oracle Clusterware). CTSS then monitors the cluster time and logs alert messages, if necessary, but CTSS does not modify the system time. If Oracle Clusterware detects that NTP is not running and is not configured, then CTSS designates one node as a clock reference, and synchronizes all of the other cluster member time and date settings to those of the clock reference.

Oracle Clusterware considers an NTP installation to be misconfigured if one of the following is true:

To check whether CTSS is running in active or observer mode run crsctl check ctss

CRS-4700: The Cluster Time Synchronization Service is in Observer mode.

or

CRS-4701: The Cluster Time Synchronization Service is in Active mode. CRS-4702: Offset from the reference node (in msec): 100

The tracing for the ctssd daemon is written to the octssd.log. The alert log (alert<hostname>.log) also contains information about the mode in which CTSS is running.

[ctssd(13936)]CRS-2403:The Cluster Time Synchronization Service on host node1 is in observer mode.

[ctssd(13936)]CRS-2407:The new Cluster Time Synchronization Service reference node

is host node1.

[ctssd(13936)]CRS-2401:The Cluster Time Synchronization Service started on host node1.

There are pre-install CVU checks performed automatically during installation, like: cluvfy stage –pre crsinit <>

This step will check and make sure that the operating system time synchronization software (e.g. NTP) is either properly configured and running on all cluster nodes, or on none of the nodes.

During the post-install check, CVU will run cluvfy comp clocksync –n all. If CTSS is in observer mode, it will perform a configuration check as above. If the CTSS is in active mode, we verify that the time difference is within the limit.

When CTSS comes up as part of the clusterware startup, it performs step time sync, and if everything goes well, it publishes its state as ONLINE. There is a start dependency on ora.cssd but note that it has no stop dependency, so if for some reasons (maybe faulted CTSSD), CTSSD dumps core or exits, nothing else should be affected.

The chart below shows the start dependency build on ora.ctssd for other resources.

crsctl stat res ora.ctssd -init –t

———————————————————————- NAME TARGET STATE SERVER STATE_DETAILS

———————————————————————-

ora.ctssd

1 ONLINE ONLINE node1 OBSERVER

Debugging mdnsd

In order to capture mdnsd network traffic, use the mDNS Network Monitor located in

Grid_home/bin:

# mkdir Grid_home/log/$HOSTNAME/netmon

# Grid_home/bin/oranetmonitor &

The output from oranetmonitor will be captured in netmonOUT.log in the above directory.

在ASM上存储OCR和voting files 消除了第三方卷管理器和消除了安装Oracle集群时的OCR和投票文件复杂的磁盘分区。

ASM管理存储投票文件和其他文件不一样。当投票文件放在ASM磁盘组的磁盘上时,Oracle集群正确的记录是存放在哪个磁盘组上的哪个磁盘。如果ASM坏了,CSS还能继续访问投票文件。如果你选择存放投票文件在ASM上,所有的投票文件都要存放在ASM上。我们不支持有一部分投票文件在ASM上有一部分在NAS上。

在一个磁盘组上能够存放的投票文件的数量依赖于你的ASM磁盘组冗余 。

By default, Oracle ASM puts each voting file in its own failure group within the disk group. A failure group is a subset of the disks in a disk group, which could fail at the same time because they share hardware, e.g. a disk controller. The failure of common hardware must be tolerated. For example, four drives that are in a single removable tray of a large JBOD (Just a Bunch of Disks) array are in the same failure group because the tray could be removed, making all four drives fail at the same time. Conversely, drives in the same cabinet can be in multiple failure groups if the cabinet has redundant power and cooling so that it is not necessary to protect against failure of the entire cabinet. However, Oracle ASM mirroring is not intended to protect against a fire in the computer room that destroys the entire cabinet. If voting files stored on Oracle ASM with Normal or High redundancy, and the storage hardware in one failure group suffers a failure, then if there is another disk available in a disk group in an unaffected failure group, Oracle ASM recovers the voting file in the unaffected failure group.

$ crsctl query css votedisk

| ## STATE | File Universal Id | File Name | Disk group |

| — —– | —————– | ——— | ———- |

o 投票文件存放在ASM里,一个现存的投票文件损坏可能会自动删除和添加回去。

$ crsctl replace css votedisk /nas/vdfile1 /nas/vdfile2 /nas/vdfile3

或

$ crsctl replace css votedisk +OTHERDG

假如是扩展的oracle集群/扩展的RAC配置, 第三投票文件必须存放在第三方存储上的三个位置防止数据中心宕机。我们支持第三方投票文件在标准的NFS上. 更多信息参考附录 “Oracle Clusterware 11g release 2 (11.2) – Using standard NFS to support a third voting file on a stretch cluster configuration”.

参见: Oracle Clusterware Administration and Deployment Guide, “Voting file, Oracle Cluster Registry, and Oracle Local Registry” for more information. For information about extended clusters and how to configure the quorum voting file see the Appendix.

在11.2,OCR可以存放在ASM中。ASM的成员关系和状态表(PST)在多个磁盘上复制并存放在OCR。因此OCR可以容忍丢失相同数量的磁盘和底层磁盘组,针对错误磁盘,可以重定位/重新均衡。

为了在磁盘组中存放OCR,磁盘组有一个特殊的文件类型叫’ocr’.

默认的配置文件的位置是/etc/oracle/ocr.loc

# cat /etc/oracle/ocr.loc

ocrconfig_loc=+DATA

local_only=FALSE

From a user and maintenance perspective, the rest remains the same. The OCR can only be configured in ASM when the cluster completely migrated to 11.2 (crsctl query crs activeversion >= 11.2.0.1.0). We still support mixed configurations, so we could have OCR’s stored in ASM and another stored on a supported NAS device, as we support up to 5 OCR locations in 11.2.0.1. We do not support raw or block devices for neither OCR nor voting files anymore.

在ASM实例启动时,OCR磁盘组自动挂载。CRSD和ASM维护依赖于OHASD。

OCRCHECK

There are small enhancements in ocrcheck like the –config which is only checking the configuration. Run ocrcheck as root otherwise the logical corruption check will not run. To check OLR data use the –local keyword.

Usage: ocrcheck [-config] [-local]

Shows OCR version, total, used and available space Performs OCR block integrity (header and checksum) checks Performs OCR logical corruption checks (11.1.0.7)

‘-config’ checks just configuration (11.2) ‘-local’ checks OLR, default OCR

Can be run when stack is up or down

输出结果就像:

# ocrcheck

Status of Oracle Cluster Registry is as follows: Version : 3

Total space (kbytes) : 262120 Used space (kbytes) : 3072 Available space (kbytes) : 259048 ID : 701301903

Device/File Name : +DATA

Device/File integrity check succeeded Device/File Name : /nas/cluster3/ocr3

Device/File integrity check succeeded Device/File Name : /nas/cluster5/ocr1

Device/File integrity check succeeded Device/File Name : /nas/cluster2/ocr2

Device/File integrity check succeeded Device/File Name : /nas/cluster4/ocr4

Device/File integrity check succeeded

Cluster registry integrity check succeeded Logical corruption check succeeded

OLR结构和OCR相似,是一个节点的本地信息库,是由OHASD管理的。OLR的配置信息只属于本地几点,不和其他节点共享。

配置信息存放在‘/etc/oracle/olr.loc’ (on Linux)或其他操作系统的类似位置上。在安装好Oracle集群后的默认位置:

在OLR里存放信息,是必须由OHASD启动或添加到集群的;包括的数据关于GPnP钱夹,集群配置和版本信息。

OLR的密匙属性和OCR是一样的,检查或者转储OLR信息的工具和OCR也是一样的。

查看OLR的位置,运行命令:

# ocrcheck -local –config

Oracle Local Registry configuration is : Device/File Name : Grid_home/cdata/node1.olr

转储OLR的内容,执行命令:

# ocrdump -local –stdout (or filename)

ocrdump –h to get the usage

参见:Oracle Clusterware Administration and Deployment Guide, “Managing the Oracle Cluster Registry and Oracle Local Registries” for more information about using the ocrconfig and ocrcheck.

ASM挂载磁盘组之前OCR操作必须能执行。强制卸载OCR或ASM实例被强制关闭会报错。

当堆栈是运行的,CRSD保持读写OCR。

OHASD maintains the resource dependency and will bring up ASM with the required diskgroup mounted before it starts CRSD.

Once ASM is up with the diskgroup mounted, the usual ocr* commands (ocrcheck, ocrconfig, etc.) can be used.

执行关闭有活动的OCR的ASM实例会报ORA-15097错误。(意味着在这个节点上运行着CRSD)。为了查看哪个客户端在访问ASM,执行命令:

asmcmd lsct (v$asm_client)

DB_Name Status Software_Version Compatible_version Instance_Name Disk_Group

+ASM CONNECTED 11.2.0.1.0 11.2.0.1.0 +ASM2 DATA

asmcmd lsof

DB_Name Instance_Name Path

+ASM +ASM2 +data.255.4294967295

+data.255用来标识在ASM上的OCR。

产生了一些错误,

The ASM Diskgroup Resource

当一个磁盘组被创建,磁盘组资源将自动创建名字,ora.<DGNAME>.dg,状态被设置成ONLINE。如果磁盘组卸载了,那么状态就会设置成OFFLINE,由于这是CRS管理的资源。当删除一个磁盘组时,磁盘组资源也会被删除。

数据库要访问ASM文件时会在数据库和磁盘组之间自动建立依赖关系。然而,当数据库不再使用ASM文件或者ASM文件被移除了,我们没法自动移除依赖关系,这就需要用srvctl命令行工具了。

典型的ASM alert.log 里的成功/失败和警告信息:

Success:

NOTE: diskgroup resource ora.DATA.dg is offline

NOTE: diskgroup resource ora.DATA.dg is online

Failure

ERROR: failed to online diskgroup resource ora.DATA.dg

ERROR: failed to offline diskgroup resource ora.DATA.dg

Warning

WARNING: failed to online diskgroup resource ora.DATA.dg (unable to communicate with CRSD/OHASD)

This warning may appear when the stack is started WARNING: unknown state for diskgroup resource ora.DATA.dg

如果错误发生了,查看alert.log里关于资源操作的状态信息,如:

“ERROR”: the resource operation failed; check CRSD log and Agent log for more details

Grid_home/log/<hostname>/crsd/

Grid_home/log/<hostname>/agent/crsd/oraagent_user/

“WARNING”: cannot communicate with CRSD.

在引导ASM实例启动和在CRSD之前挂载磁盘组,这个警告可以忽略。

磁盘组资源的状态和磁盘组时要一致的。在少数情况下,会出现短暂的不同步。执行srvctl让状态同步,或者等待一段时间让代理去刷新状态。如果这个不同步的时间比较长,请检查CRSD 日志和ASM日期看更多的细节信息。

打开更全面的跟踪用事件event=”39505 trace name context forever, level 1“。

一个仲裁故障组是故障组的一种特殊类型,不包含用户数据也在决定冗余要求时也不需要考虑。

COMPATIBLE.ASM磁盘组的compatibility属性必须设置为11.2或更高,用来在磁盘组里存放OCR或投票文件。

在拓展的/延伸的集群或者两个存储阵列需要第三个投票文件时,在安装数据库软件时我们不支持创建一个仲裁故障组。

创建一个仲裁故障组的磁盘组在第三阵列可用:

SQL> CREATE DISKGROUP PROD NORMAL REDUNDANCY

FAILGROUP fg1 DISK ‘<a disk in SAN1>’

FAILGROUP fg2 DISK ‘<a disk in SAN2>’

QUORUM FAILGROUP fg3 DISK ‘<another disk or file on a third location>’

ATTRIBUTE ‘compatible.asm’ = ’11.2.0.0’;

如果是用asmca创建的磁盘组,添加仲裁盘到磁盘组里,Oracle集群会自动改变CSS仲裁盘的位置,例如:

$ crsctl query css votedisk

## STATE File Universal Id File Name Disk group

— —– —————– ——— ———

Located 3 voting file(s).

如果是通过SQL*PLUS,就要执行crsctl replace css votedisk。

本文永久地址:https://www.askmac.cn/archives/oracle-clusterware-11-2.html

参见:Oracle Database Storage Administrator’s Guide, “Oracle ASM Failure Groups” for more information. Oracle Clusterware Administration and Deployment Guide, “Voting file, Oracle Cluster Registry, and Oracle Local Registry” for more information about backup and restore and failure recovery.

Oracle建议把ASM SPFILE存放在磁盘组上。你不能给已经存在的ASM SPFILE创建别名。

如果你没有用共享的Oracle grid家目录,Oracle ASM实例会使用PFILE。相同规则的文件名,默认位置,和查找用来适用于数据库初始化参数的文件和也适用于ASM的初始化参数文件。

ASM查找参数参数文件的顺序是:

例如:在Linux环境下,SPFILE的默认路径是在Oracle grid的家目录下:

$ORACLE_HOME/dbs/spfile+ASM.ora

Backing Up, Moving a ASM spfile

你可以备份,复制,或移动ASM SPFILE 用ASMCMD的spbackup,spcopy或spmove命令。关于ASMCMD的命令参见Oracle Database Storage Administrator’s Guide。

参见:Oracle Database Storage Administrator’s Guide “Configuring Initialization Parameters for an Oracle ASM Instance” for more information.

Oracle 集群管理应用和进程是通过管理你在集群里注册的资源。你在集群里注册的资源数量取决于你的应用。应用只由一个进程组成,通常就只有一个资源。有些复制的应用,由多个进程或组件组成,可能需要多个资源。

通常,所有的资源是唯一的但是有些资源可能有共同的属性。Oracle集群用资源类型来组织这些相似的资源。用资源类型有这些好处:

每个在Oracle集群注册的资源都要有一个指定的资源类型。除了在Oracle集群中的资源类型,可以用crsctl工具自定义资源类型。资源类型包括:

所有用户定义的资源类型必须是基础的,直接的或间接的,为local_resource类型或cluster_resource类型。

执行crsctl stat type命令可以列出说有的定义的类型:

TYPE_NAME=application

BASE_TYPE=cluster_resource

TYPE_NAME=cluster_resource

BASE_TYPE=resource

TYPE_NAME=local_resource

BASE_TYPE=resource

TYPE_NAME=ora.asm.type

BASE_TYPE=ora.local_resource.type

TYPE_NAME=ora.cluster_resource.type

BASE_TYPE=cluster_resource

TYPE_NAME=ora.cluster_vip.type

BASE_TYPE=ora.cluster_resource.type

TYPE_NAME=ora.cluster_vip_net1.type

BASE_TYPE=ora.cluster_vip.type

TYPE_NAME=ora.database.type