之前有同学想要给11gR2的RAC添加LISTENER监听器,查看了listener.ora并发现问题:

[oracle@vrh2 ~]$ lsnrctl status LSNRCTL for Linux: Version 11.2.0.3.0 - Production on 04-DEC-2011 02:51:40 Copyright (c) 1991, 2011, Oracle. All rights reserved. Connecting to (ADDRESS=(PROTOCOL=tcp)(HOST=)(PORT=1521)) STATUS of the LISTENER ------------------------ Alias LISTENER Version TNSLSNR for Linux: Version 11.2.0.3.0 - Production Start Date 02-DEC-2011 05:40:09 Uptime 1 days 21 hr. 11 min. 31 sec Trace Level off Security ON: Local OS Authentication SNMP OFF Listener Parameter File /g01/11.2.0/grid/network/admin/listener.ora Listener Log File /g01/orabase/diag/tnslsnr/vrh2/listener/alert/log.xml Listening Endpoints Summary... (DESCRIPTION=(ADDRESS=(PROTOCOL=ipc)(KEY=LISTENER))) (DESCRIPTION=(ADDRESS=(PROTOCOL=tcp)(HOST=192.168.1.163)(PORT=1521))) (DESCRIPTION=(ADDRESS=(PROTOCOL=tcp)(HOST=192.168.1.164)(PORT=1521))) Services Summary... Service "+ASM" has 1 instance(s). Instance "+ASM2", status READY, has 1 handler(s) for this service... Service "VPROD" has 1 instance(s). Instance "VPROD2", status READY, has 1 handler(s) for this service... Service "VPRODXDB" has 1 instance(s). Instance "VPROD2", status READY, has 1 handler(s) for this service... The command completed successfully [oracle@vrh2 ~]$ cat /g01/11.2.0/grid/network/admin/listener.ora LISTENER_SCAN1=(DESCRIPTION=(ADDRESS_LIST=(ADDRESS=(PROTOCOL=IPC)(KEY=LISTENER_SCAN1)))) # line added by Agent LISTENER=(DESCRIPTION=(ADDRESS_LIST=(ADDRESS=(PROTOCOL=IPC)(KEY=LISTENER)))) # line added by Agent ENABLE_GLOBAL_DYNAMIC_ENDPOINT_LISTENER=ON # line added by Agent ENABLE_GLOBAL_DYNAMIC_ENDPOINT_LISTENER_SCAN1=ON # line added by Agent ENABLE_GLOBAL_DYNAMIC_ENDPOINT_LSN_MACLEAN=ON # line added by Agent

以上listener.ora配置文件中的信息是Grid Infrastructure安装过程中Agent自行添加的(During the Grid Infrastructure installation, the (default) node VIP listener is always created referencing the public network),比较难以理解的可能是LISTENER仅指定了PROTOCOL=IPC的信息, 而没有指定监听的地址、端口等信息。

实际上11.2 GI的LISTENER 监听器配置默认受到11.2新引入的endpoints_listener.ora配置文件的管理:

Listener.ora [grid@netrac1 admin]$ more listener.ora LISTENER_SCAN2=(DESCRIPTION=(ADDRESS_LIST=(ADDRESS=(PROTOCOL=IPC)(KEY=LISTENER_SCAN2)))) # line added by Agent LISTENER=(DESCRIPTION=(ADDRESS_LIST=(ADDRESS=(PROTOCOL=IPC)(KEY=LISTENER))))# line added by Agent LISTENER_SCAN1=(DESCRIPTION=(ADDRESS_LIST=(ADDRESS=(PROTOCOL=IPC)(KEY=LISTENER_SCAN1)))) # line added by Agent ENABLE_GLOBAL_DYNAMIC_ENDPOINT_LISTENER_SCAN1=ON # line added by Agent ENABLE_GLOBAL_DYNAMIC_ENDPOINT_LISTENER=ON # line added by Agent ENABLE_GLOBAL_DYNAMIC_ENDPOINT_LISTENER_SCAN2=ON # line added by Agent The ENABLE_GLOBAL_DYNAMIC_ENDPOINT_ parameter is set to allow the listener to accept connections for pre-11.2 databases which did not register the dynamic endpoint. Listener status "listener" showing 1 instance registered, ie instance running on the node [grid@netrac1 admin]$ lsnrctl status listener Connecting to (DESCRIPTION=(ADDRESS=(PROTOCOL=IPC)(KEY=LISTENER))) STATUS of the LISTENER ------------------------ Alias LISTENER Version TNSLSNR for Linux: Version 11.2.0.1.0 - Production Start Date 15-FEB-2011 10:57:09 Uptime 0 days 0 hr. 0 min. 46 sec Trace Level off Security ON: Local OS Authentication SNMP OFF Listener Parameter File /u01/app/11.2.0/grid/network/admin/listener.ora Listener Log File /u01/app/grid/diag/tnslsnr/netrac1/listener/alert/log.xml Listening Endpoints Summary... (DESCRIPTION=(ADDRESS=(PROTOCOL=ipc)(KEY=LISTENER))) (DESCRIPTION=(ADDRESS=(PROTOCOL=tcp)(HOST=12.345.678.111)(PORT=1521))) ** Node IP Address ** (DESCRIPTION=(ADDRESS=(PROTOCOL=tcp)(HOST=12.345.678.888)(PORT=1521))) ** Node VIP Address ** Services Summary... Service "v11gr2" has 1 instance(s). Instance "v11gr21", status READY, has 2 handler(s) for this service... The command completed successfully New file for 11.2 called endpoints_listener.ora, showing the Node IP address and Node VIP address. [grid@netrac1 admin]$ more endpoints_listener.ora LISTENER_NETRAC1=(DESCRIPTION=(ADDRESS_LIST=(ADDRESS=(PROTOCOL=TCP)(HOST=netrac1-vip)(PORT=1521)) (ADDRESS=(PROTOCOL=TCP)(HOST=12.345.678.888)(PORT=1521)(IP=FIRST)))) # line added by Agent Endpoints_listener.ora file is there for backward compatibility with pre-11.2 databases. DBCA needs to know the endpoints location to configure database parameters and tnsnames.ora file. It used to use the listener.ora file, 11.2 RAC listener.ora by default only has IPC entries. "Line added by Agent" is the Oraagent is the process updating the listener.ora and endpoints_listener.ora files. Endpoints_listener.ora showing the Node IP address and Node VIP address [grid@netrac2 admin]$ more endpoints_listener.ora LISTENER_NETRAC2=(DESCRIPTION=(ADDRESS_LIST=(ADDRESS=(PROTOCOL=TCP)(HOST=netrac2-vip)(PORT=1521)) (ADDRESS=(PROTOCOL=TCP)(HOST=12.345.678.999) (PORT=1521)(IP=FIRST)))) # line added by Agent

我一开始以为LISTENER默认监听的地址和端口被写到了OCR中,后来用ocrdump转储注册信息发现没有相关记录。 后来才发现原来11.2 GI中监听器的地址和端口信息被移到了 endpoints_listener.ora中, “Line added by Agent”说明是由Oraagent 进程更新的记录。

注意:使用 endpoints_listener.ora的情况 下不应使用lsnrctl管理LISTENER,而需使用srvctl或crsctl工具管理,否则lsnrctl将不会识别endpoints_listener.ora中的信息,造成监听没有在必要地址、端口上工作。如:

[grid@vrh1 admin]$ lsnrctl status LSN_MACLEAN LSNRCTL for Linux: Version 11.2.0.3.0 - Production on 27-NOV-2011 10:45:26 Copyright (c) 1991, 2011, Oracle. All rights reserved. Connecting to (DESCRIPTION=(ADDRESS=(PROTOCOL=IPC)(KEY=LSN_MACLEAN))) STATUS of the LISTENER ------------------------ Alias LSN_MACLEAN Version TNSLSNR for Linux: Version 11.2.0.3.0 - Production Start Date 26-NOV-2011 08:33:14 Uptime 1 days 2 hr. 12 min. 11 sec Trace Level off Security ON: Local OS Authentication SNMP OFF Listener Parameter File /g01/11.2.0/grid/network/admin/listener.ora Listener Log File /g01/orabase/diag/tnslsnr/vrh1/lsn_maclean/alert/log.xml Listening Endpoints Summary... (DESCRIPTION=(ADDRESS=(PROTOCOL=ipc)(KEY=LSN_MACLEAN))) (DESCRIPTION=(ADDRESS=(PROTOCOL=tcp)(HOST=192.168.1.161)(PORT=1588))) (DESCRIPTION=(ADDRESS=(PROTOCOL=tcp)(HOST=192.168.1.162)(PORT=1588))) Services Summary... Service "VPROD" has 1 instance(s). Instance "VPROD1", status READY, has 1 handler(s) for this service... Service "VPRODXDB" has 1 instance(s). Instance "VPROD1", status READY, has 1 handler(s) for this service... The command completed successfully [grid@vrh1 admin]$ lsnrctl reload LSN_MACLEAN LSNRCTL for Linux: Version 11.2.0.3.0 - Production on 27-NOV-2011 10:45:39 Copyright (c) 1991, 2011, Oracle. All rights reserved. Connecting to (DESCRIPTION=(ADDRESS=(PROTOCOL=IPC)(KEY=LSN_MACLEAN))) The command completed successfully [grid@vrh1 admin]$ lsnrctl status LSN_MACLEAN LSNRCTL for Linux: Version 11.2.0.3.0 - Production on 27-NOV-2011 10:45:44 Copyright (c) 1991, 2011, Oracle. All rights reserved. Connecting to (DESCRIPTION=(ADDRESS=(PROTOCOL=IPC)(KEY=LSN_MACLEAN))) STATUS of the LISTENER ------------------------ Alias LSN_MACLEAN Version TNSLSNR for Linux: Version 11.2.0.3.0 - Production Start Date 26-NOV-2011 08:33:14 Uptime 1 days 2 hr. 12 min. 30 sec Trace Level off Security ON: Local OS Authentication SNMP OFF Listener Parameter File /g01/11.2.0/grid/network/admin/listener.ora Listener Log File /g01/orabase/diag/tnslsnr/vrh1/lsn_maclean/alert/log.xml Listening Endpoints Summary... (DESCRIPTION=(ADDRESS=(PROTOCOL=ipc)(KEY=LSN_MACLEAN))) The listener supports no services The command completed successfully [grid@vrh1 admin]$ srvctl stop listener -l LSN_MACLEAN [grid@vrh1 admin]$ srvctl start listener -l LSN_MACLEAN [grid@vrh1 admin]$ lsnrctl status LSN_MACLEAN LSNRCTL for Linux: Version 11.2.0.3.0 - Production on 27-NOV-2011 10:46:26 Copyright (c) 1991, 2011, Oracle. All rights reserved. Connecting to (DESCRIPTION=(ADDRESS=(PROTOCOL=IPC)(KEY=LSN_MACLEAN))) STATUS of the LISTENER ------------------------ Alias LSN_MACLEAN Version TNSLSNR for Linux: Version 11.2.0.3.0 - Production Start Date 27-NOV-2011 10:46:22 Uptime 0 days 0 hr. 0 min. 4 sec Trace Level off Security ON: Local OS Authentication SNMP OFF Listener Parameter File /g01/11.2.0/grid/network/admin/listener.ora Listener Log File /g01/orabase/diag/tnslsnr/vrh1/lsn_maclean/alert/log.xml Listening Endpoints Summary... (DESCRIPTION=(ADDRESS=(PROTOCOL=ipc)(KEY=LSN_MACLEAN))) (DESCRIPTION=(ADDRESS=(PROTOCOL=tcp)(HOST=192.168.1.161)(PORT=1588))) (DESCRIPTION=(ADDRESS=(PROTOCOL=tcp)(HOST=192.168.1.162)(PORT=1588))) The listener supports no services The command completed successfully

而在11.2 RAC中listener.ora仅记录LISTENER的IPC条目。这样做的目的是方便dbca配置数据库参数及tnsnames.ora配置文件。

了解到以上信息后可能你对当前11.2 RAC中的listener.ora文件中的监听配置信息不再感到奇怪。

我们可以使用netca图形化工具或者srvctl 命令行工具添加监听配置; 如果仅仅是手动在listener.ora中添加记录的话是无法被注册为Cluster Ready Service的服务的,将不会被CRS管理。

方法1:

使用netca和netmgr图形化工具,完成添加监听和静态注册的工作。

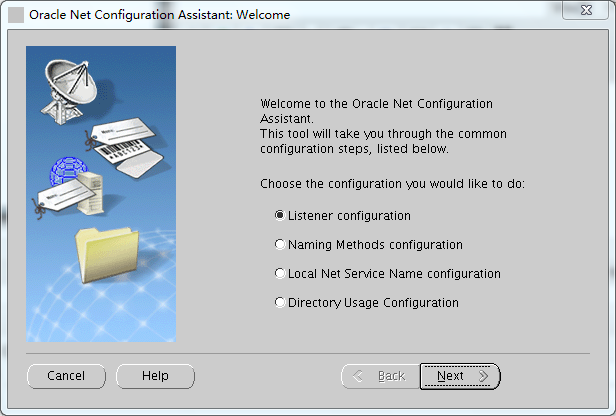

1) 以Grid Infrastructure GI用户登录任意节点,并运行netca启动图形界面:

su - grid (grid)$ export DISPLAY=:0 (grid)$ netca

选择LISTENER Configuration

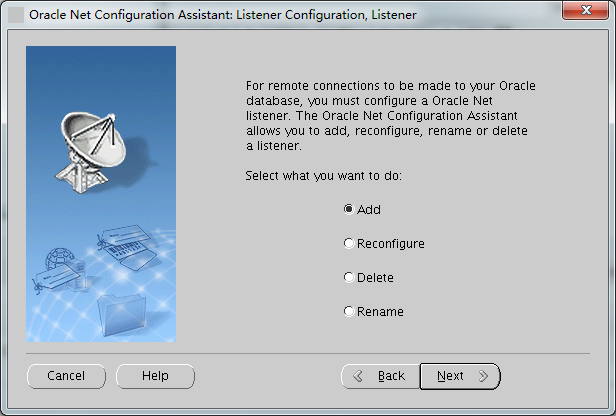

选择ADD

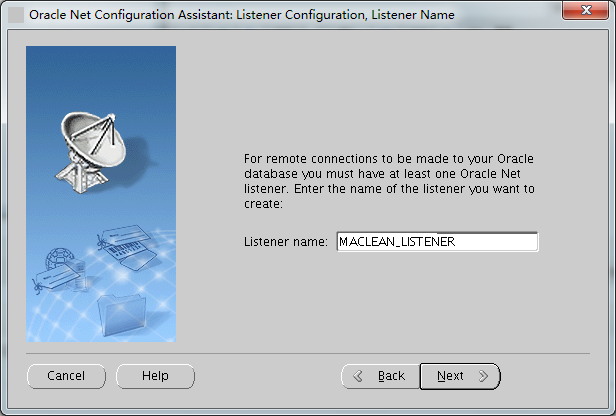

填入监听名字

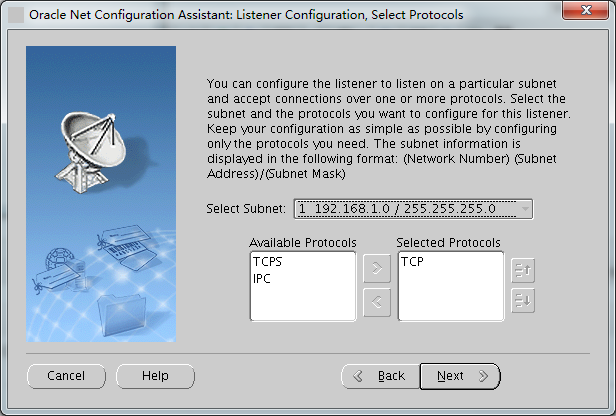

选择subnet和availabe protocol ,一般默认即可,除非你有多个public network网段

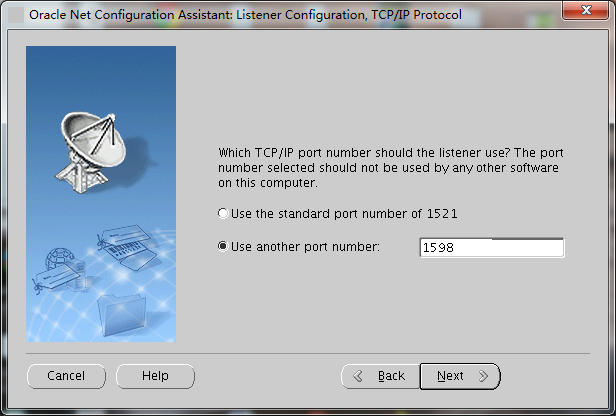

填入端口号

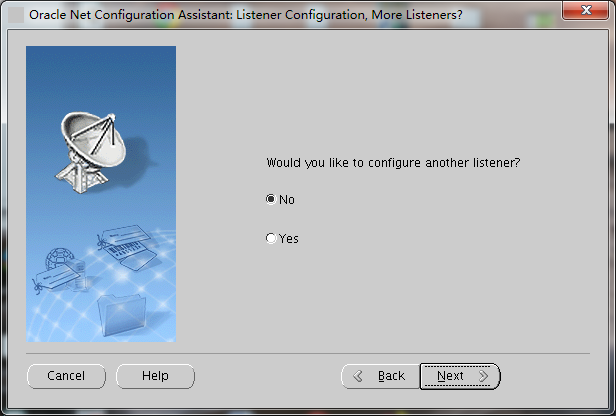

选择NO

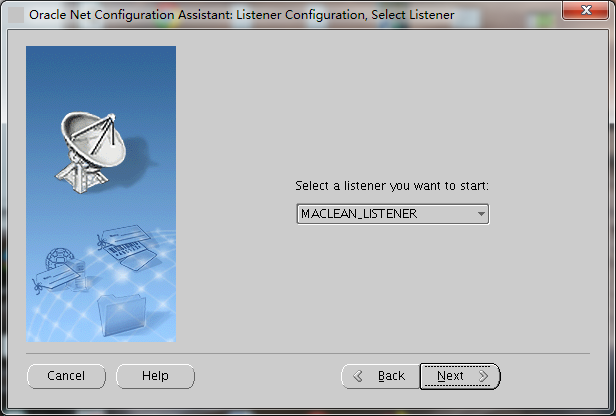

选择要启动的监听名,即方才你创建的监听名

之后选择FINISH退出netca 界面,启动netmgr界面,为监听加入静态注册的信息:

su - grid (grid)$ export DISPLAY=:0 (grid)$ netmgr

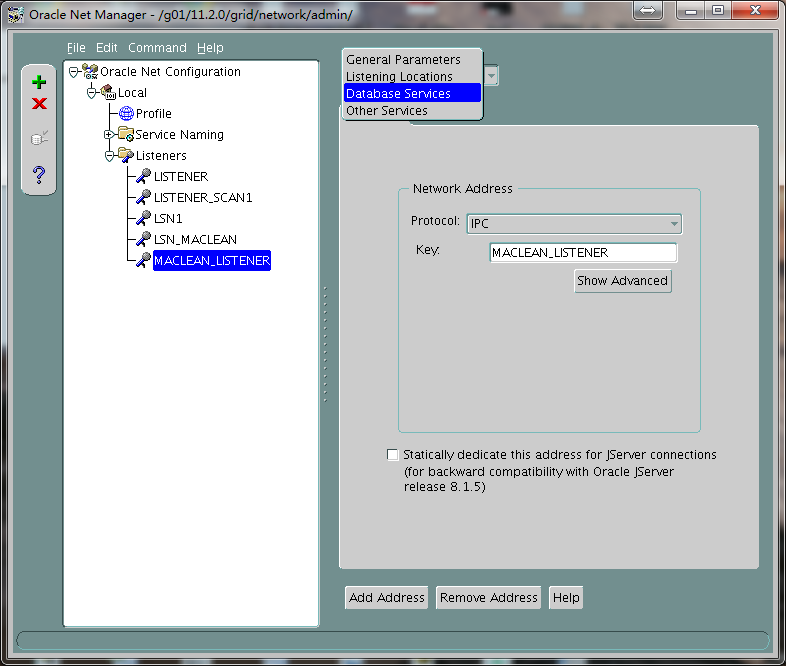

点选方才创建的监听器,选择Database Services菜单

填入Global Database Name和本地实例的SID信息,并确认ORACLE HOME Directory(应是Grid Infrastructure的Home目录)正确后点选Save Network Configuration。

之后使用srvctl 或 crsctl 重启该监听即可生效:

[grid@vrh1 admin]$ crsctl status res ora.MACLEAN_LISTENER.lsnr NAME=ora.MACLEAN_LISTENER.lsnr TYPE=ora.listener.type TARGET=ONLINE , ONLINE STATE=ONLINE on vrh1, ONLINE on vrh2 [grid@vrh1 admin]$ crsctl stop res ora.MACLEAN_LISTENER.lsnr CRS-2673: Attempting to stop 'ora.MACLEAN_LISTENER.lsnr' on 'vrh1' CRS-2673: Attempting to stop 'ora.MACLEAN_LISTENER.lsnr' on 'vrh2' CRS-2677: Stop of 'ora.MACLEAN_LISTENER.lsnr' on 'vrh1' succeeded CRS-2677: Stop of 'ora.MACLEAN_LISTENER.lsnr' on 'vrh2' succeeded [grid@vrh1 admin]$ crsctl start res ora.MACLEAN_LISTENER.lsnr CRS-2672: Attempting to start 'ora.MACLEAN_LISTENER.lsnr' on 'vrh2' CRS-2672: Attempting to start 'ora.MACLEAN_LISTENER.lsnr' on 'vrh1' CRS-2676: Start of 'ora.MACLEAN_LISTENER.lsnr' on 'vrh1' succeeded CRS-2676: Start of 'ora.MACLEAN_LISTENER.lsnr' on 'vrh2' succeeded [grid@vrh1 admin]$ lsnrctl status MACLEAN_LISTENER LSNRCTL for Linux: Version 11.2.0.3.0 - Production on 27-NOV-2011 11:00:42 Copyright (c) 1991, 2011, Oracle. All rights reserved. Connecting to (DESCRIPTION=(ADDRESS=(PROTOCOL=IPC)(KEY=MACLEAN_LISTENER))) STATUS of the LISTENER ------------------------ Alias MACLEAN_LISTENER Version TNSLSNR for Linux: Version 11.2.0.3.0 - Production Start Date 27-NOV-2011 11:00:11 Uptime 0 days 0 hr. 0 min. 31 sec Trace Level off Security ON: Local OS Authentication SNMP OFF Listener Parameter File /g01/11.2.0/grid/network/admin/listener.ora Listener Log File /g01/orabase/diag/tnslsnr/vrh1/maclean_listener/alert/log.xml Listening Endpoints Summary... (DESCRIPTION=(ADDRESS=(PROTOCOL=ipc)(KEY=MACLEAN_LISTENER))) (DESCRIPTION=(ADDRESS=(PROTOCOL=tcp)(HOST=192.168.1.161)(PORT=1598))) (DESCRIPTION=(ADDRESS=(PROTOCOL=tcp)(HOST=192.168.1.162)(PORT=1598))) Services Summary... Service "VPROD" has 1 instance(s). Instance "VPROD1", status UNKNOWN, has 1 handler(s) for this service... The command completed successfully [grid@vrh1 admin]$ srvctl stop listener -l MACLEAN_LISTENER [grid@vrh1 admin]$ srvctl start listener -l MACLEAN_LISTENER [grid@vrh1 admin]$ srvctl config listener -l MACLEAN_LISTENER Name: MACLEAN_LISTENER Network: 1, Owner: grid Home: End points: TCP:1598 [grid@vrh1 admin]$ lsnrctl status MACLEAN_LISTENER LSNRCTL for Linux: Version 11.2.0.3.0 - Production on 27-NOV-2011 11:01:42 Copyright (c) 1991, 2011, Oracle. All rights reserved. Connecting to (DESCRIPTION=(ADDRESS=(PROTOCOL=IPC)(KEY=MACLEAN_LISTENER))) STATUS of the LISTENER ------------------------ Alias MACLEAN_LISTENER Version TNSLSNR for Linux: Version 11.2.0.3.0 - Production Start Date 27-NOV-2011 11:01:10 Uptime 0 days 0 hr. 0 min. 31 sec Trace Level off Security ON: Local OS Authentication SNMP OFF Listener Parameter File /g01/11.2.0/grid/network/admin/listener.ora Listener Log File /g01/orabase/diag/tnslsnr/vrh1/maclean_listener/alert/log.xml Listening Endpoints Summary... (DESCRIPTION=(ADDRESS=(PROTOCOL=ipc)(KEY=MACLEAN_LISTENER))) (DESCRIPTION=(ADDRESS=(PROTOCOL=tcp)(HOST=192.168.1.161)(PORT=1598))) (DESCRIPTION=(ADDRESS=(PROTOCOL=tcp)(HOST=192.168.1.162)(PORT=1598))) Services Summary... Service "VPROD" has 1 instance(s). Instance "VPROD1", status UNKNOWN, has 1 handler(s) for this service... The command completed successfully

以上使用netca和netmgr图形界面工具完成了新监听的添加和静态注册工作。

2. 使用srvctl 工具添加监听并手动加入静态注册信息

检查默认network的network number,红色的数字

[grid@vrh1 admin]$ srvctl config network

Network exists: 1/192.168.1.0/255.255.255.0/eth0, type static

srvctl 添加监听的语法如下

[grid@vrh1 admin]$ srvctl add listener -h

Adds a listener configuration to the Oracle Clusterware.

Usage: srvctl add listener [-l <lsnr_name>] [-s] [-p "[TCP:]<port>[, ...][/IPC:<key>]

[/NMP:<pipe_name>][/TCPS:<s_port>] [/SDP:<port>]"] [-o <oracle_home>] [-k <net_num>]

-l <lsnr_name> Listener name (default name is LISTENER)

-o <oracle_home> ORACLE_HOME path (default value is CRS_HOME)

-k <net_num> network number (default number is 1)

-s Skip the checking of ports

-p "[TCP:]<port>[, ...][/IPC:<key>][/NMP:<pipe_name>][/TCPS:<s_port>] [/SDP:<port>]"

Comma separated tcp ports or listener endpoints

-h Print usage

[grid@vrh1 admin]$ srvctl add listener -l NEW_MACLEAN_LISTENER -o $CRS_HOME -p 1601 -k 1

-k 填入方才获得的network number,-p填入端口号,-l填入监听名,-o 填入GI HOME路径

[grid@vrh1 admin]$ srvctl start listener -l NEW_MACLEAN_LISTENER

srvctl start listener启动新添加的监听后listener.ora和endpoints_listener.ora会出现新的记录:

[grid@vrh1 admin]$ head -1 listener.ora NEW_MACLEAN_LISTENER=(DESCRIPTION=(ADDRESS_LIST=(ADDRESS=(PROTOCOL=IPC)(KEY=NEW_MACLEAN_LISTENER)))) # line added by Agent [grid@vrh1 admin]$ head -1 endpoints_listener.ora NEW_MACLEAN_LISTENER_VRH1=(DESCRIPTION=(ADDRESS_LIST=(ADDRESS=(PROTOCOL=TCP)(HOST=vrh1-vip)(PORT=1601)) (ADDRESS=(PROTOCOL=TCP)(HOST=192.168.1.161)(PORT=1601)(IP=FIRST)))) # line added by Agent

以上已经完成了监听的添加,足见使用srvctl管理更为简便。

之后仅需要加入静态注册信息即可,如:

SID_LIST_NEW_MACLEAN_LISTENER =

(SID_LIST =

(SID_DESC =

(GLOBAL_DBNAME = VPROD)

(ORACLE_HOME = /g01/11.2.0/grid)

(SID_NAME = VPROD1)

)

)

加入如上信息到listener.ora配置文件中(SID_LIST_($LISTENER_NAME),并重启监听即完成静态注册:

[grid@vrh1 admin]$ srvctl stop listener -l NEW_MACLEAN_LISTENER [grid@vrh1 admin]$ srvctl start listener -l NEW_MACLEAN_LISTENER [grid@vrh1 admin]$ lsnrctl status NEW_MACLEAN_LISTENER LSNRCTL for Linux: Version 11.2.0.3.0 - Production on 27-NOV-2011 11:21:37 Copyright (c) 1991, 2011, Oracle. All rights reserved. Connecting to (DESCRIPTION=(ADDRESS=(PROTOCOL=IPC)(KEY=NEW_MACLEAN_LISTENER))) STATUS of the LISTENER ------------------------ Alias NEW_MACLEAN_LISTENER Version TNSLSNR for Linux: Version 11.2.0.3.0 - Production Start Date 27-NOV-2011 11:21:25 Uptime 0 days 0 hr. 0 min. 11 sec Trace Level off Security ON: Local OS Authentication SNMP OFF Listener Parameter File /g01/11.2.0/grid/network/admin/listener.ora Listener Log File /g01/11.2.0/grid/log/diag/tnslsnr/vrh1/new_maclean_listener/alert/log.xml Listening Endpoints Summary... (DESCRIPTION=(ADDRESS=(PROTOCOL=ipc)(KEY=NEW_MACLEAN_LISTENER))) (DESCRIPTION=(ADDRESS=(PROTOCOL=tcp)(HOST=192.168.1.161)(PORT=1601))) (DESCRIPTION=(ADDRESS=(PROTOCOL=tcp)(HOST=192.168.1.162)(PORT=1601))) Services Summary... Service "VPROD" has 1 instance(s). Instance "VPROD1", status UNKNOWN, has 1 handler(s) for this service... The command completed successfully

以上利用srvctl管理工具完成了添加新监听和静态注册的任务。