本文档描述如何在11.2 Grid Infrastructure 安装完成后修改其GI的时区time zone 设置。

一旦OS默认时区被修改,注意确保以下2点:

1. 对于11.2.0.1 ,确保root、grid、oracle用户的shell环境变量TZ设置正确!

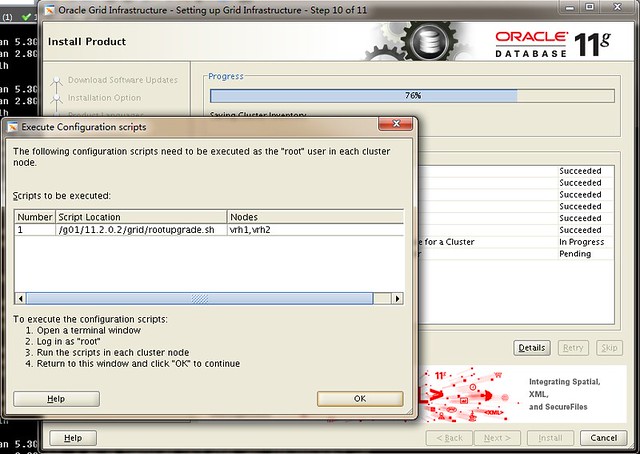

2. 对于11.2.0.2及以上版本,确认 $GRID_HOME/crs/install/s_crsconfig_<nodename>_env.txt 中的参数设置为正确的时区

例如:

ech $TZ TZ=US/Pacific grep TZ s_crsconfig__env.txt TZ=US/Pacific

若timezone设置时区不正确或存在无关字符可能导致RAC Grid Infrastructure无法正常启动。

确保以上2点保证GI能正常启动,这种因时区不正确导致的启动异常问题常发生在OS、GI已经安装完毕后而时区最后被修改的情景中,若发现OS时间与ohasd.log、ocssd.log等日志中的最新记录的时间不匹配,则往往是由该时区问题引起的。

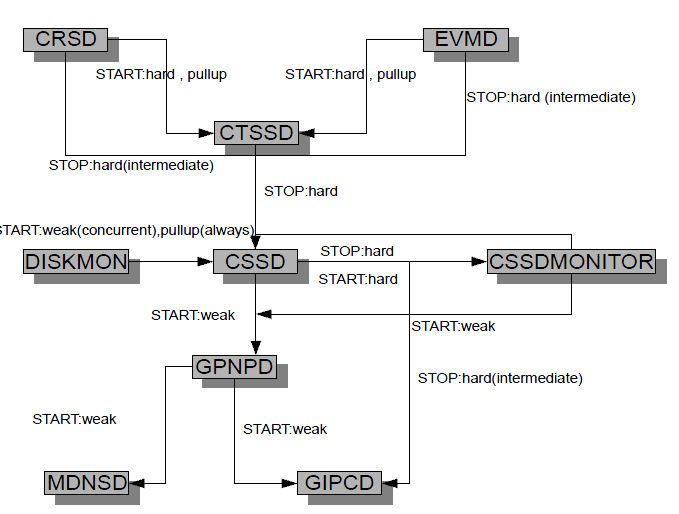

在11.2 CRS之前可以通过init.cssd diag来确认时区设置。

以下为各OS上Timezone默认配置信息

Linux To change: /usr/sbin/timeconfig To display current setting: cat /etc/sysconfig/clock ZONE="America/Los_Angeles" UTC=true ARC=false To find out all valid setting: ls -l /usr/share/zoneinfo Anything that appears in this directory is valid to use, for example, CST6CDT and America/Chicago. Note: the "Zone" field in /etc/sysconfig/clock could be different than what's in /usr/share/zoneinfo in OL6.3/RHEL6.3, the one from /usr/share/zoneinfo should be used in $GRID_HOME/crs/install/s_crsconfig_<nodename>_env.txt hp-ux To display current setting: cat /etc/default/tz PST8PDT To change: set_parms timezone To find out all valid setting: ls -l /usr/lib/tztab Solaris To display current setting: grep TZ /etc/TIMEZONE TZ=US/Pacific To change, modify TIMEZONE, also run "rtc -z US/pacific; rtc -c" To find out all valid settings: ls -l /usr/share/lib/zoneinfo AIX To display current setting: grep TZ /etc/environment TZ=GMT