这个问题发生在今年的1月,用户以操作系统认证形式登陆RAC中的主用实例时发现登陆挂起,但不出现错误。之后应用人员陆续手动杀死服务进程,杀死进程后发现实例可以登录了,应用人员在没有做任何信息转储的情况下重启了数据库,这就造成了我们后期诊断时缺乏必要的信息,也是这个case变成悬案的主要原因。

在实例hang住的一个半小时中告警日志没有任何信息;仅有的有用信息是该实例中diag,pmon,lmd后台进程的trace文件。以下为trace文件:

lmd0 trace:

*** 2010-01-16 11:02:58.106

DUMP LOCAL BLOCKER/HOLDER: block level 5 res [0x10b0005][0xeb5],[TX]

----------resource 0x70000044a76e1a0----------------------

resname : [0x10b0005][0xeb5],[TX]

Local node : 1

dir_node : 1

master_node : 1

hv idx : 25

hv last r.inc : 0

current inc : 20

hv status : 0

hv master : 0

open options : dd

grant_bits : KJUSERNL KJUSEREX

grant mode : KJUSERNL KJUSERCR KJUSERCW KJUSERPR KJUSERPW KJUSEREX

count : 1 0 0 0 0 1

val_state : KJUSERVS_NOVALUE

valblk : 0x00000000000000000000000000000000 .

access_node : 1

vbreq_state : 0

state : x0

resp : 70000044a76e1a0

On Scan_q? : N

Total accesses: 15061

Imm. accesses: 12545

Granted_locks : 1

Cvting_locks : 1

value_block: 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00

GRANTED_Q :

lp 700000488546990 gl KJUSEREX rp 70000044a76e1a0 [0x10b0005][0xeb5],[TX]

master 1 gl owner 70000048dd08220 possible pid 2928930 xid 2033-0333-0026D22C bast 0 rseq 215 mseq 0 history 0x14951495

open opt KJUSERDEADLOCK

CONVERT_Q:

lp 700000488546ae0 gl KJUSERNL rl KJUSEREX rp 70000044a76e1a0 [0x10b0005][0xeb5],[TX]

master 1 gl owner 70000048ee0ed48 possible pid 2040272 xid 2006-0066-0006F888 bast 0 rseq 215 mseq 0 history 0x1495149a

convert opt KJUSERGETVALUE KJUSERTRCCANCEL

----------enqueue 0x700000488546990------------------------

lock version : 2242641

Owner node : 1

grant_level : KJUSEREX

req_level : KJUSEREX

bast_level : KJUSERNL

notify_func : 0

resp : 70000044a76e1a0

procp : 70000048928b8f0

pid : 2040272

proc version : 40135

oprocp : 0

opid : 0

group lock owner : 70000048dd08220

possible pid : 2928930

xid : 2033-0333-0026D22C

dd_time : 0.0 secs

dd_count : 0

timeout : 0.0 secs

On_timer_q? : N

On_dd_q? : N

lock_state : GRANTED

Open Options : KJUSERDEADLOCK

Convert options : KJUSERNOQUEUE

History : 0x14951495

Msg_Seq : 0x0

res_seq : 215

valblk : 0x00000000000000000000000000000000 .

DUMP LOCAL BLOCKER: initiate state dump for TIMEOUT

possible owner[819.2928930] on resource TX-010B0005-00000EB5

Submitting asynchronized dump request [28]

pmon trace:

*** SESSION ID:(3384.1) 2010-01-16 11:00:11.349

[claim lock for dead process][lp 0x7000004870f7078][p 0x7000004891a79e0.2928930][hist x49514951]

[claim lock for dead process][lp 0x700000488546990][p 0x70000048928b8f0.0][hist x49514951] <<<< note:this message@2010-01-16 12:35

pmon trace:

*** 2010-01-16 11:02:58.244

Dump requested by process [orapid=6]

REQUEST:custom dump [2] with parameters [6][819][2][2]

. process info of pid[819.2928930] requested by pid[6.3621092]

Dumping process 819.2928930 info:

*** 2010-01-16 11:02:58.244

Dumping diagnostic information for ospid 2928930:

OS pid = 2928930

loadavg : 10.83 10.96 10.91

swap info: free_mem = 21646.73M rsv = 128.00M

alloc = 235.52M avail = 32768.00M swap_free = 32532.48M

F S UID PID PPID C PRI NI ADDR SZ WCHAN STIME TTY TIME CMD

240001 A orauser 2928930 1 120 120 20 6da7bd510 174068 10:35:28 - 1:50 oracleCRMDB22 (LOCAL=NO)

open: The file access permissions do not allow the specified action.

procstack: write(/proc/2928930/ctl): The requested resource is busy.

2928930: oracleCRMDB22 (LOCAL=NO)

lmd0进程的trace文件显示该进程发现了本地存在阻塞超时的问题,[0x10b0005][0xeb5],[TX]资源的拥有者是2928930进程,而pmon进程认为2928930进程可能出于僵死状态,故希望RAC中的diag进程能对该进程做进一步的诊断,diag进程受到pmon的邀请”Dump requested by process [orapid=6]”,尝试”Dumping process 819.2928930 info:”,并利用AIX上的procstack调试工具分析此进程当时的调用栈,但出现了”procstack: write(/proc/2928930/ctl): The requested resource is busy”的错误,在网上搜索该记录的相关信息发现可能是AIX上procstack工具的一个Bug,而跟实例挂起问题关系不大:

Problem summary

****************************************************************

* USERS AFFECTED:

* Users of the procstac command on the 5300-09 Technology Level

* with the bos.perf.proctools fileset at the 5.3.9.0 and 5.3.9.1

* levels.

****************************************************************

* PROBLEM DESCRIPTION:

* The procstac command fails with an error similar to:

*

* open: Permission denied

* procstack: write(/proc/2068724/ctl): Device busy

****************************************************************

* RECOMMENDATION:

* Install APAR IZ47903.

****************************************************************

Problem conclusion

*Modified the code to fix this problelm.

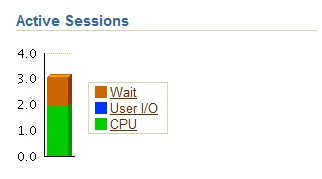

diag进程的跟踪文件还显示2928930进程持有redo copy latch;这个case发生后的一个礼拜恰好有Oracle原厂工程师到该客户单位做巡检,原厂工程师查看了实例挂起期间的ASH报告,发现该时段内实例中redo相关的等待频繁发生,基本可以认定是僵死进程持有”redo copy latch”造成了RAC中单实例hang住。

原厂工程师的结论令客户比较满意,这个case基本可以结束了。但我对这个结论并不完全认同,2928930进程持有的redo copy latch是一个子栓,该类子栓在实例上的总数取决于CPU总数,一般来说等于CPU * 2;该实例所在服务器为IBM的p595配有32个cpu,redo copy latch共有64个,单单一个子栓被hold住会造成整个实例都hang住,且登录挂起吗?

SQL> show parameter cpu_count

NAME TYPE VALUE

------------------------------------ ----------- ------------------------------

cpu_count integer 32

SQL> select count(*) from v$latch_children where name='redo copy';

COUNT(*)

----------

64

/*我们在非生产环境测试一下*/

select addr "十六进制地址",

to_number(addr, 'xxxxxxxxxxxx') "十进制地址",

gets,

misses,

immediate_gets,

immediate_misses

from v$latch_children

where name = 'redo copy';

十六进制 十进制地址 GETS MISSES IMMEDIATE_GETS IMMEDIATE_MISSES

-------- ---------- ---------- ---------- -------------- ----------------

2DAB5898 766204056 7 0 0 0

2DAB5818 766203928 5 0 0 0

2DAB5798 766203800 5 0 0 0

2DAB5718 766203672 5 0 0 0

2DAB5698 766203544 5 0 0 0

2DAB5618 766203416 5 0 0 0

2DAB5598 766203288 5 0 511 0

2DAB5518 766203160 5 0 297024 347

8 rows selected.

/*正式测试前的redo copy child latch统计信息,我们还要用到这里的十进制latch地址*/

/*我们尝试手动hold住一个redo copy child latch,并在其他会话中执行一些产生redo的操作*/

session A:

SQL> oradebug setmypid;

Statement processed.

SQL> oradebug call kslgetl 766203160 1;

Function returned 1

/* kslgetl是Oracle内部用get latch的函数,oradebug 可以调用该函数*/

session B:

SQL> select * From v$latchholder;

PID SID LADDR NAME GETS

---------- ---------- -------- ---------------------------------------------------------------- ----------

22 136 2DAB5518 redo copy 297443

另外开三个session,执行一下批量插入数据的匿名块,测试redo是否能够正常产生:

begin

for i in 1 .. 90000 loop

insert into tv values (i, i, i);

commit;

end loop;

end;

/

PL/SQL procedure successfully completed.

session A:

SQL> oradebug call kslfre 766203160 1;

Function returned 2FD548E0

/*手动释放766203160对应的redo copy 子闩*/

select addr "十六进制地址",

to_number(addr, 'xxxxxxxxxxxx') "十进制地址",

gets,

misses,

immediate_gets,

immediate_misses

from v$latch_children

where name = 'redo copy';

十六进制 十进制地址 GETS MISSES IMMEDIATE_GETS IMMEDIATE_MISSES

-------- ---------- ---------- ---------- -------------- ----------------

2DAB5898 766204056 7 0 0 0

2DAB5818 766203928 5 0 0 0

2DAB5798 766203800 5 0 0 0

2DAB5718 766203672 5 0 745 0

2DAB5698 766203544 5 0 122370 5

2DAB5618 766203416 5 0 176712 5

2DAB5598 766203288 5 0 144441 6

2DAB5518 766203160 6 0 297443 373

/*可以看到虽然8个子闩中一个被手动hold住,但对实例的影响远不足以到达hang所需要的条件*/

虽然能证明单个redo copy latch被僵死进程长期持有和实例hang住没有必然的因果联系,但因为缺少相关的systemstate和hanganalyze转储信息,我们也无法进一步做出推断。

这个case发生后的几个月,我无意中在MOS上发现了以下Bug note:

Diagnostic collection may hang or crash the instance

DescriptionSometimes while the DIAG process is collecting diagnostics

the database may hang or crash.

This is more likely to occur in a RAC or ASM environment .You may be seeing this problem if the database crashes or hangs just

after you see the following text in a trace file:

“Dumping diagnostic information for X”

where X is the process that hung or terminated.This fix replaces invokations of a port specific OS debugger

(like gdb) with Oracle ORADEBUG calls to gather the required

diagnostics.Note:

For HP Itanium fixes for this issue in 10.2.0.3/10.2.0.4 use bug 8767023

instead of this bug#.For AIX fixes for this issue in 10.2.0.3/10.2.0.4 use bug 8929701

instead of this bug#.Workaround

>=10.2.0.3 only: Set init.ora parameter “_ksb_disable_diagpid” = TRUE

but this will disable automatic diagnostics collection.

当diag诊断进程调用系统debugger工具调试问题进程时可能出现实例hang或crash的,且该问题多发于RAC或ASM环境中,workaroud的方法是动态设置_ksb_disable_diagpid为TRUE,可以避免diag诊断进程对问题进程的调试,但这些调试信息往往对我们又是有用的,这倒有些《种树郭橐驼说》中”既然已,勿动勿虑,去不复顾”的味道。

好了这个问题,仍旧pending着,是个悬案。