今天早上应用人员反映一个原本在9i上可以顺利完成的CTAS脚本,迁移到10g后运行总是报“ORA-1652: unable to extend temp segment by 128 in tablespace TS_HQY1_TEMP “无法扩展临时表空间的错误。应用人员表示该脚本涉及的数据量在迁移前后变化不大,而且令人匪夷所思的是在新的10g库上临时表空间大小已达40多个G,要远大于原9i库。很显然这不是由于临时表空间过小导致的该问题,更多的原因肯定是出在迁移后Oracle不同的行为方式上。

该脚本每月执行一次用以汇总数据,其中一个单表接近4亿行记录,GROUP BY操作涉及到的数据量十分庞大。我们来具体看一下这个SQL:

create table gprs_bill.zou_201007_cell_id as

select /* g_all_cdr01,60 */

calling_num mobile_number,

lac,

lpad(cell_id, 5, '0') cell_id,

count(*) c,

sum(call_duration) call_duration,

sum(decode(record_type, '00', 1, 0) * call_duration) moc_call_duration,

sum(decode(record_type, '01', 1, 0) * call_duration) mtc_call_duarion

from gprs_bill.g_all_cdr01

where substr(calling_num, 1, 7) in

(select mobile_prefix from gprs_bill.zou_mobile_prefix)

group by calling_num, lac, lpad(cell_id, 5, '0');

SQL> set autotrace traceonly exp

SQL> select /* g_all_cdr01,60 */

2 calling_num mobile_number,

3 lac,

4 lpad(cell_id,5,'0') cell_id,

5 count(*) c,

6 sum(call_duration) call_duration,

7 sum(decode(record_type,'00',1,0)*call_duration) moc_call_duration,

8 sum(decode(record_type,'01',1,0)*call_duration) mtc_call_duarion

9 from gprs_bill.g_all_cdr01

10 where substr(calling_num,1,7) in (select mobile_prefix from gprs_bill.zou_mobile_prefix)

11 group by

12 calling_num ,

13 lac,

14 lpad(cell_id,5,'0');

Execution Plan

----------------------------------------------------------

Plan hash value: 212866585

--------------------------------------------------------------------------------

-------------------

| Id | Operation | Name | Rows | Bytes |TempSpc| Cost

(%CPU)| Time |

--------------------------------------------------------------------------------

-------------------

| 0 | SELECT STATEMENT | | 229K| 9880K| | 103

3K (3)| 03:26:41 |

| 1 | HASH GROUP BY | | 229K| 9880K| 22M| 103

3K (3)| 03:26:41 |

|* 2 | HASH JOIN RIGHT SEMI| | 229K| 9880K| | 103

0K (3)| 03:26:10 |

| 3 | TABLE ACCESS FULL | ZOU_MOBILE_PREFIX | 1692 | 13536 | | 1

1 (0)| 00:00:01 |

| 4 | TABLE ACCESS FULL | G_ALL_CDR01 | 388M| 13G| | 102

6K (2)| 03:25:21 |

--------------------------------------------------------------------------------

-------------------

Predicate Information (identified by operation id):

---------------------------------------------------

2 - access("MOBILE_PREFIX"=SUBSTR("CALLING_NUM",1,7))

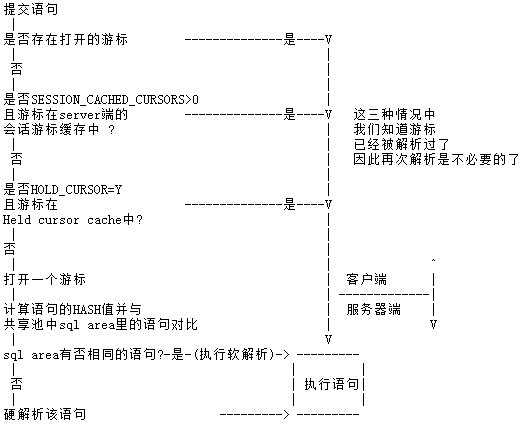

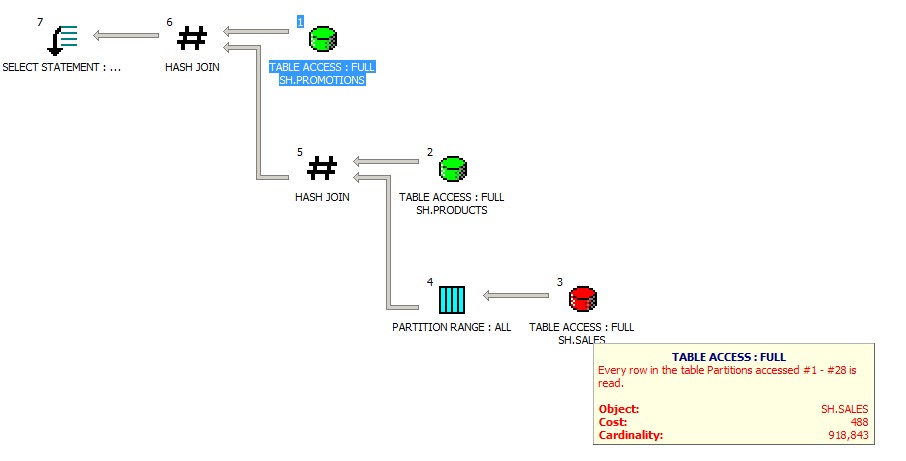

可以看到Oracle使用了HASH GROUP BY 算法以实现数据分组;HASH算法是10g中新引入的分组算法。

下面我们来详细介绍下10g中数据分组的改动:

在10g中GROUP BY操作仍将引发排序操作,但10g中引入了新的算法,这些算法都不保证返回的数据行有序排列;在10g中如果想保证”GROUP BY”后返回的数据有序排列则需要强制使用”ORDER BY”子句,这点和9i是截然不同的。若你没有指定”ORDER BY”子句,则不能保证返回的结果正确排序。

在10g中”GROUP BY”子句更倾向于使用一种HASH算法而非原先的SORT算法来分组数据,HASH算法的CPU COST要低于原先的SORT算法。但这2种算法在10g中都不保证返回数据正常排序,当采用SORT算法时可能”碰巧”出现返回正常排序数据的状况。

MOS建议,如果迁移中出现大量不利的变化,则可以通过修改参数来确保沿用原先的算法。但需要注意的是,即便采用了以下参数仍不能保证10g后”GROUP BY”后返回的数据如9i中那样排序,你需要做的是加入显式的”ORDER BY”子句以保证Oracle为你做到这一点。

alter session set "_gby_hash_aggregation_enabled" = false; alter session set optimizer_features_enable="9.2.0"; 或者 alter session set optimizer_features_enable="8.1.7";

其中_gby_hash_aggregation_enabled隐式参数决定了Oracle是否可以启用新的HASH算法来进行数据分组(也适用于distinct等操作)。

对于以上说法我们通过实验进一步验证:

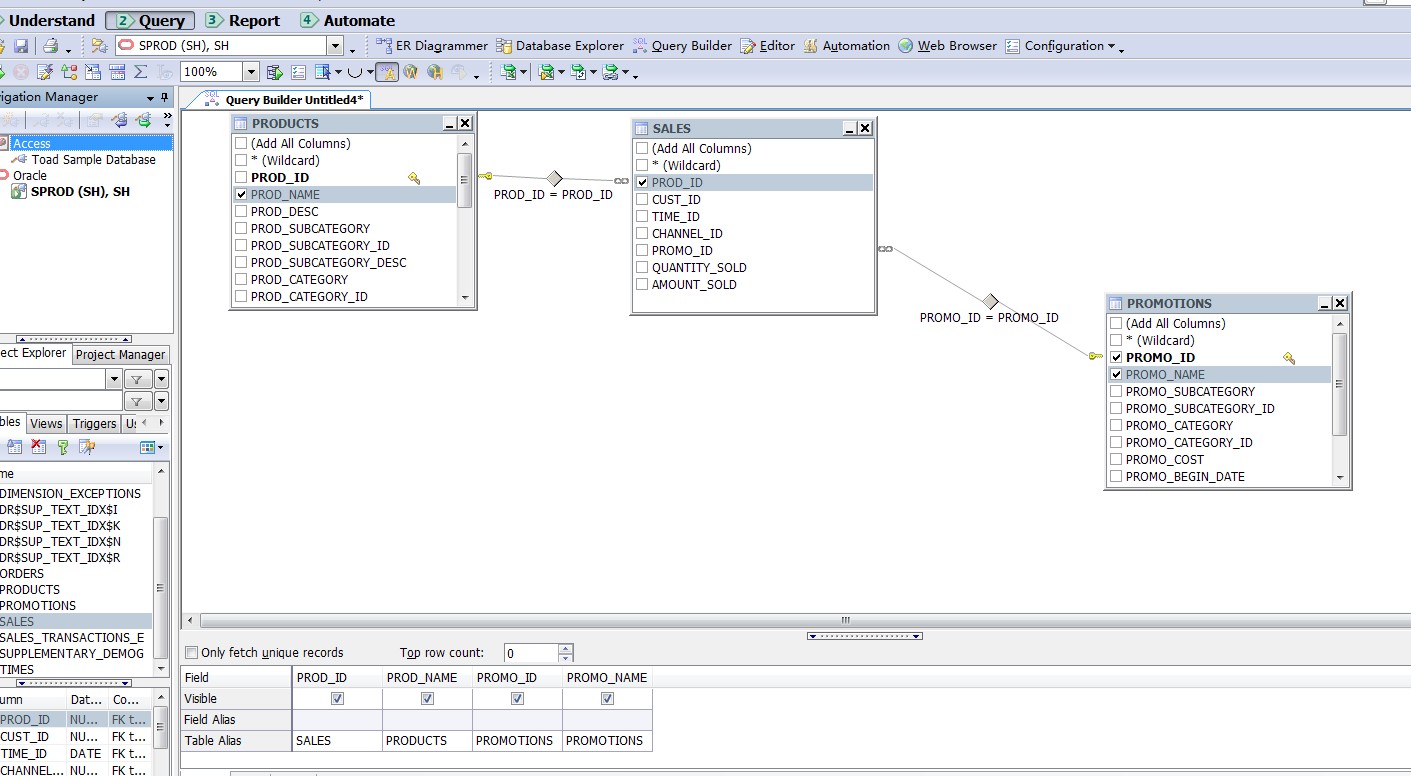

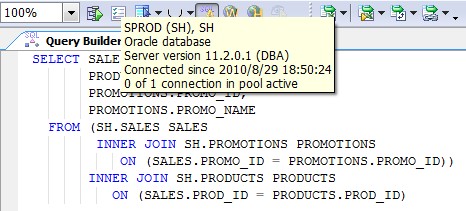

在11g中的测试如下:

SQL> select * from v$version;

BANNER

----------------------------------------------------------------------

Oracle Database 11g Enterprise Edition Release 11.2.0.1.0 - Production

PL/SQL Release 11.2.0.1.0 - Production

CORE 11.2.0.1.0 Production

TNS for 32-bit Windows: Version 11.2.0.1.0 - Production

NLSRTL Version 11.2.0.1.0 - Production

SQL> select * from youyus;

T1 T2

---------- ----------

A 10

B 10

F 30

G 30

H 40

I 40

J 40

L 20

M 20

已选择9行。

SQL> analyze table youyus compute statistics for all columns;

表已分析。

SQL> set autotrace on;

SQL> select t2,count(*) from youyus group by t2;

T2 COUNT(*)

---------- ----------

30 2

20 2

40 3

10 2

执行计划

----------------------------------------------------------

Plan hash value: 2940504347

-----------------------------------------------------------------------------

| Id | Operation | Name | Rows | Bytes | Cost (%CPU)| Time |

-----------------------------------------------------------------------------

| 0 | SELECT STATEMENT | | 4 | 8 | 3 (34)| 00:00:01 |

| 1 | HASH GROUP BY | | 4 | 8 | 3 (34)| 00:00:01 |

| 2 | TABLE ACCESS FULL| YOUYUS | 9 | 18 | 2 (0)| 00:00:01 |

-----------------------------------------------------------------------------

/*可以看到使用了hash算法,且返回结果未按t2列大小顺序排列*/

SQL> select t2,count(*) from youyus group by t2 order by t2;

T2 COUNT(*)

---------- ----------

10 2

20 2

30 2

40 3

执行计划

----------------------------------------------------------

Plan hash value: 1349668650

-----------------------------------------------------------------------------

| Id | Operation | Name | Rows | Bytes | Cost (%CPU)| Time |

-----------------------------------------------------------------------------

| 0 | SELECT STATEMENT | | 4 | 8 | 3 (34)| 00:00:01 |

| 1 | SORT GROUP BY | | 4 | 8 | 3 (34)| 00:00:01 |

| 2 | TABLE ACCESS FULL| YOUYUS | 9 | 18 | 2 (0)| 00:00:01 |

-----------------------------------------------------------------------------

/*加入order by子句后,又变回了SORT算法,而且正常排序*/

SQL> alter session set "_gby_hash_aggregation_enabled" = false;

会话已更改。

SQL> alter session set optimizer_features_enable="9.2.0";

会话已更改。

SQL> select t2,count(*) from youyus group by t2;

T2 COUNT(*)

---------- ----------

10 2

20 2

30 2

40 3

执行计划

----------------------------------------------------------

Plan hash value: 1349668650

-------------------------------------------------------------

| Id | Operation | Name | Rows | Bytes | Cost |

-------------------------------------------------------------

| 0 | SELECT STATEMENT | | 4 | 8 | 11 |

| 1 | SORT GROUP BY | | 4 | 8 | 11 |

| 2 | TABLE ACCESS FULL| YOUYUS | 9 | 18 | 2 |

-------------------------------------------------------------

Note

-----

- cpu costing is off (consider enabling it)

/*optimizer_features_enable设置为9.2.0后cpu cost被off了;返回数据正确排序,但我们要记住这是"侥幸"*/

SQL> alter session set optimizer_features_enable="10.2.0.5";

会话已更改。

SQL> select t2,count(*) from youyus group by t2;

T2 COUNT(*)

---------- ----------

10 2

20 2

30 2

40 3

执行计划

----------------------------------------------------------

Plan hash value: 1349668650

-----------------------------------------------------------------------------

| Id | Operation | Name | Rows | Bytes | Cost (%CPU)| Time |

-----------------------------------------------------------------------------

| 0 | SELECT STATEMENT | | 4 | 8 | 3 (34)| 00:00:01 |

| 1 | SORT GROUP BY | | 4 | 8 | 3 (34)| 00:00:01 |

| 2 | TABLE ACCESS FULL| YOUYUS | 9 | 18 | 2 (0)| 00:00:01 |

-----------------------------------------------------------------------------

/*optimizer_features_enable设为10.2.0.5 一切正常*/

SQL> alter session set optimizer_features_enable="11.2.0.1";

会话已更改。

SQL> select t2,count(*) from youyus group by t2;

T2 COUNT(*)

---------- ----------

10 2

20 2

30 2

40 3

执行计划

----------------------------------------------------------

Plan hash value: 1349668650

-----------------------------------------------------------------------------

| Id | Operation | Name | Rows | Bytes | Cost (%CPU)| Time |

-----------------------------------------------------------------------------

| 0 | SELECT STATEMENT | | 4 | 8 | 3 (34)| 00:00:01 |

| 1 | SORT GROUP BY | | 4 | 8 | 3 (34)| 00:00:01 |

| 2 | TABLE ACCESS FULL| YOUYUS | 9 | 18 | 2 (0)| 00:00:01 |

-----------------------------------------------------------------------------

/*11.2.0.1中没有变化*/

SQL> alter session set optimizer_features_enable="8.1.7";

会话已更改。

SQL> alter session set "_gby_hash_aggregation_enabled" =true;

会话已更改。

/*看看optimizer_features_enable设为8.1.7,而_gby_hash_aggregation_enabled为true,这种"矛盾"情况下的表现*/

SQL> select t2,count(*) from youyus group by t2;

T2 COUNT(*)

---------- ----------

30 2

20 2

40 3

10 2

执行计划

----------------------------------------------------------

Plan hash value: 2940504347

-------------------------------------------------------------

| Id | Operation | Name | Rows | Bytes | Cost |

-------------------------------------------------------------

| 0 | SELECT STATEMENT | | 4 | 8 | 10 |

| 1 | HASH GROUP BY | | 4 | 8 | 10 |

| 2 | TABLE ACCESS FULL| YOUYUS | 9 | 18 | 1 |

-------------------------------------------------------------

Note

-----

- cpu costing is off (consider enabling it)

/*居然仍采用了HASH GROUP BY,看起来类似_gby_hash_aggregation_enabled这类参数优先级要高于optimizer_features_enable*/

9i上的表现如下:

SQL> select * from v$version;

BANNER

----------------------------------------------------------------

Oracle9i Enterprise Edition Release 9.2.0.4.0 - Production

PL/SQL Release 9.2.0.4.0 - Production

CORE 9.2.0.3.0 Production

TNS for Linux: Version 9.2.0.4.0 - Production

NLSRTL Version 9.2.0.4.0 - Production

SQL> analyze table youyus_9i compute statistics for all columns;

Table analyzed.

SQL> select * from youyus_9i;

T1 T2

-- ----------

A 10

B 10

F 30

G 30

H 40

I 40

J 40

L 20

M 20

9 rows selected.

SQL> alter session set optimizer_mode=ALL_ROWS;

Session altered.

SQL> select t2,count(*) from youyus_9i group by t2;

T2 COUNT(*)

---------- ----------

10 2

20 2

30 2

40 3

Execution Plan

----------------------------------------------------------

0 SELECT STATEMENT Optimizer=ALL_ROWS (Cost=4 Card=4 Bytes=8)

1 0 SORT (GROUP BY) (Cost=4 Card=4 Bytes=8)

2 1 TABLE ACCESS (FULL) OF 'YOUYUS_9I' (Cost=2 Card=21 Bytes

=42)

/*9i下虽然没有指定order by,但我们可以放心返回的数据总是排序的;*/

SQL> alter session set "_gby_hash_aggregation_enabled" =true;

alter session set "_gby_hash_aggregation_enabled" =true

*

ERROR at line 1:

ORA-02248: invalid option for ALTER SESSION

/*9i下不存在_gby_hash_aggregation_enabled隐式参数*/

That's great!

应用脚本没有数据一定要正确排序的强制要求,但使用HASH GROUP BY算法后临时表空间的使用量大幅上升,远大于之前在9i上的使用量,最后导致语句无法顺利完成。首先想到的当然是通过修改_gby_hash_aggregation_enabled参数恢复到原先的SORT算法,并观察其临时表空间使用量:

SQL> alter session set "_gby_hash_aggregation_enabled"=false;

Session altered.

SQL> select /* g_all_cdr01,60 */

2 calling_num mobile_number,

3 lac,

4 lpad(cell_id,5,'0') cell_id,

5 count(*) c,

6 sum(call_duration) call_duration,

7 sum(decode(record_type,'00',1,0)*call_duration) moc_call_duration,

8 sum(decode(record_type,'01',1,0)*call_duration) mtc_call_duarion

9 from gprs_bill.g_all_cdr01

10 where substr(calling_num,1,7) in (select mobile_prefix from gprs_bill.zou_mobile_prefix)

11 group by

12 calling_num ,

13 lac,

14 lpad(cell_id,5,'0');

Execution Plan

----------------------------------------------------------

Plan hash value: 4013005149

--------------------------------------------------------------------------------

-------------------

| Id | Operation | Name | Rows | Bytes |TempSpc| Cost

(%CPU)| Time |

--------------------------------------------------------------------------------

-------------------

| 0 | SELECT STATEMENT | | 229K| 9880K| | 103

3K (3)| 03:26:41 |

| 1 | SORT GROUP BY | | 229K| 9880K| 22M| 103

3K (3)| 03:26:41 |

|* 2 | HASH JOIN RIGHT SEMI| | 229K| 9880K| | 103

0K (3)| 03:26:10 |

| 3 | TABLE ACCESS FULL | ZOU_MOBILE_PREFIX | 1692 | 13536 | | 1

1 (0)| 00:00:01 |

| 4 | TABLE ACCESS FULL | G_ALL_CDR01 | 388M| 13G| | 102

6K (2)| 03:25:21 |

--------------------------------------------------------------------------------

-------------------

Predicate Information (identified by operation id):

---------------------------------------------------

2 - access("MOBILE_PREFIX"=SUBSTR("CALLING_NUM",1,7))

/*重新执行出现问题的脚本*/

create table gprs_bill.zou_201007_cell_id as

select /* g_all_cdr01,60 */

calling_num mobile_number,

lac,

lpad(cell_id,5,'0') cell_id,

count(*) c,

sum(call_duration) call_duration,

sum(decode(record_type,'00',1,0)*call_duration) moc_call_duration,

sum(decode(record_type,'01',1,0)*call_duration) mtc_call_duarion

from gprs_bill.g_all_cdr01

where substr(calling_num,1,7) in (select mobile_prefix from gprs_bill.zou_mobile_prefix)

group by

calling_num ,

lac,

lpad(cell_id,5,'0');

可以看到在会话级别设置_gby_hash_aggregation_enabled为false后,Oracle不再采用10g中的HASH分组算法;因为该CTAS SQL脚本运行时间较长,我们通过动态视图V$SORT_USAGE来观察其运行期间的排序段使用量:

SQL> set time on; 14:30:59 SQL> select tablespace,contents,segtype,blocks*8/1024 from v$sort_usage where username='GPRS_BILL'; TABLESPACE CONTENTS SEGTYPE BLOCKS*8/1024 ------------------------------- --------- --------- ------------- TS_HQY1_TEMP TEMPORARY SORT 9349 14:35:59 SQL> / TABLESPACE CONTENTS SEGTYPE BLOCKS*8/1024 ------------------------------- --------- --------- ------------- TS_HQY1_TEMP TEMPORARY SORT 10011 /*5分钟内共用10011-9349=662MB 临时空间*/ 15:02:46 SQL> select target ,totalwork,sofar,time_remaining,elapsed_seconds from v$session_longops where sofar!=totalwork; TARGET TOTALWORK SOFAR TIME_REMAINING ELAPSED_SECONDS ---------------------------------------------------------------- ---------- ---------- -------------- --------------- GPRS_BILL.G_ALL_CDR01 5575890 5435796 143 5557 15:05:10 SQL> select target ,totalwork,sofar,time_remaining,elapsed_seconds from v$session_longops where sofar!=totalwork; TARGET TOTALWORK SOFAR TIME_REMAINING ELAPSED_SECONDS ---------------------------------------------------------------- ---------- ---------- -------------- --------------- GPRS_BILL.G_ALL_CDR01 5575890 5562082 14 5692 15:05:13 SQL> select tablespace,contents,segtype,blocks*8/1024 from v$sort_usage where username='GPRS_BILL'; TABLESPACE CONTENTS SEGTYPE BLOCKS*8/1024 ------------------------------- --------- --------- ------------- TS_HQY1_TEMP TEMPORARY SORT 13835 15:12:22 SQL> select tablespace,contents,segtype,blocks*8/1024 from v$sort_usage where username='GPRS_BILL'; TABLESPACE CONTENTS SEGTYPE BLOCKS*8/1024 ------------------------------- --------- --------- ------------- TS_HQY1_TEMP TEMPORARY SORT 13922 /* 排序已经完成,排序段不再增长*/

该分组操作最后排序段使用量为13922MB,在客户可以接受的范围内。看起来新引入的HASH算法虽然有CPU成本低于SORT算法的优势,但可能消耗大量临时空间,可谓有得有失。